Dynatrace is proud to be an Advanced Technology Partner

Fully automated, AI-powered observability across AWS hybrid-cloud environments

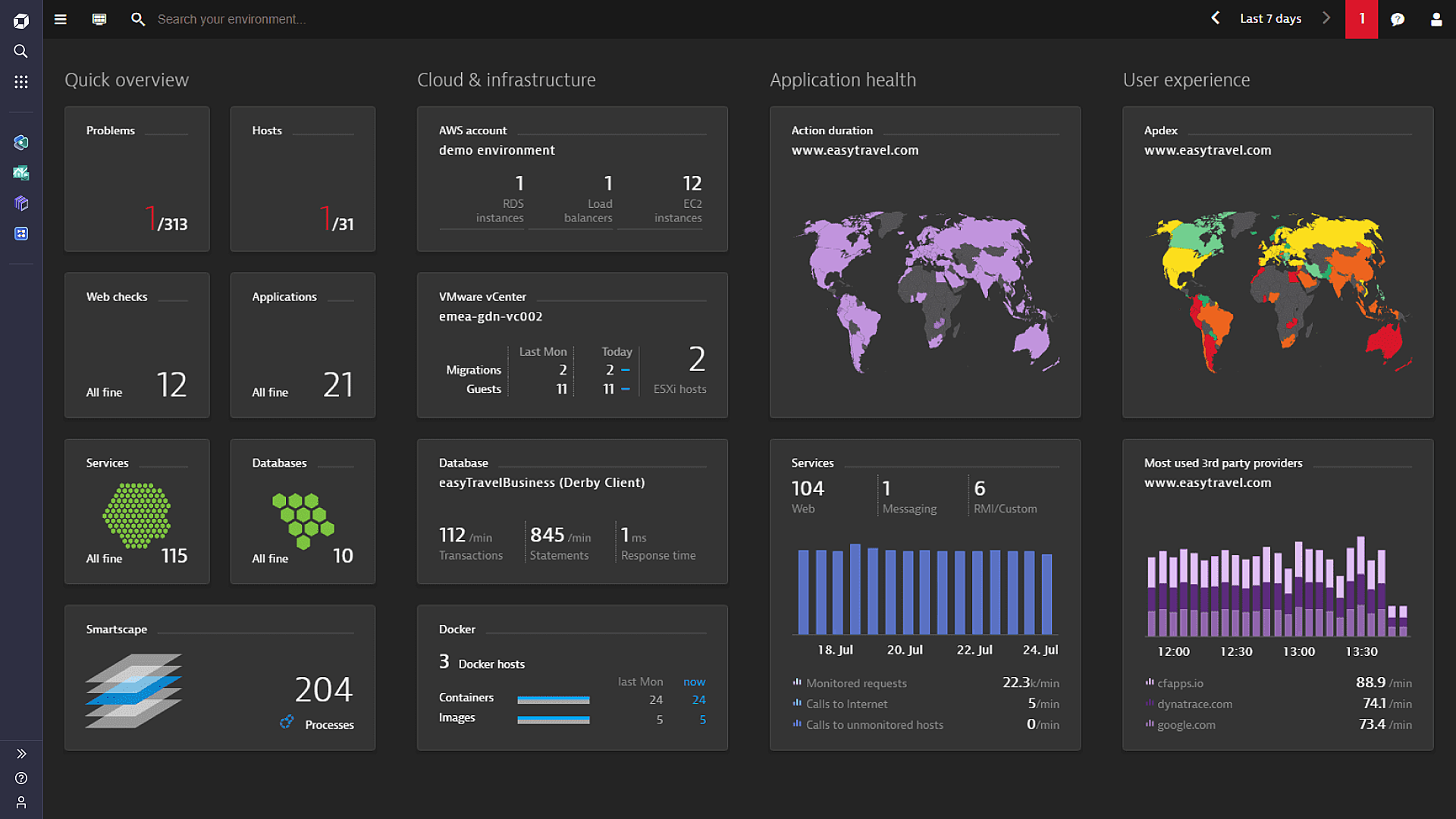

Dynatrace has pioneered and expanded the collection of observability data in highly dynamic cloud environments. In addition to metrics, logs and traces, Dynatrace also collects user experience data for full, end-to-end visibility helping you deliver answers, not just more data.

Simple installation

Install the Dynatrace OneAgent, that’s it! The rest is completely automated — zero manual configuration.

Hands-free monitoring

Let AI analyze problems in milliseconds to pinpoint the underlying root cause of problems.

Less noise, more problem-solving

Let Dynatrace consolidate all related performance issues into a single actionable notification.

Out of the box support for cloud native architectures

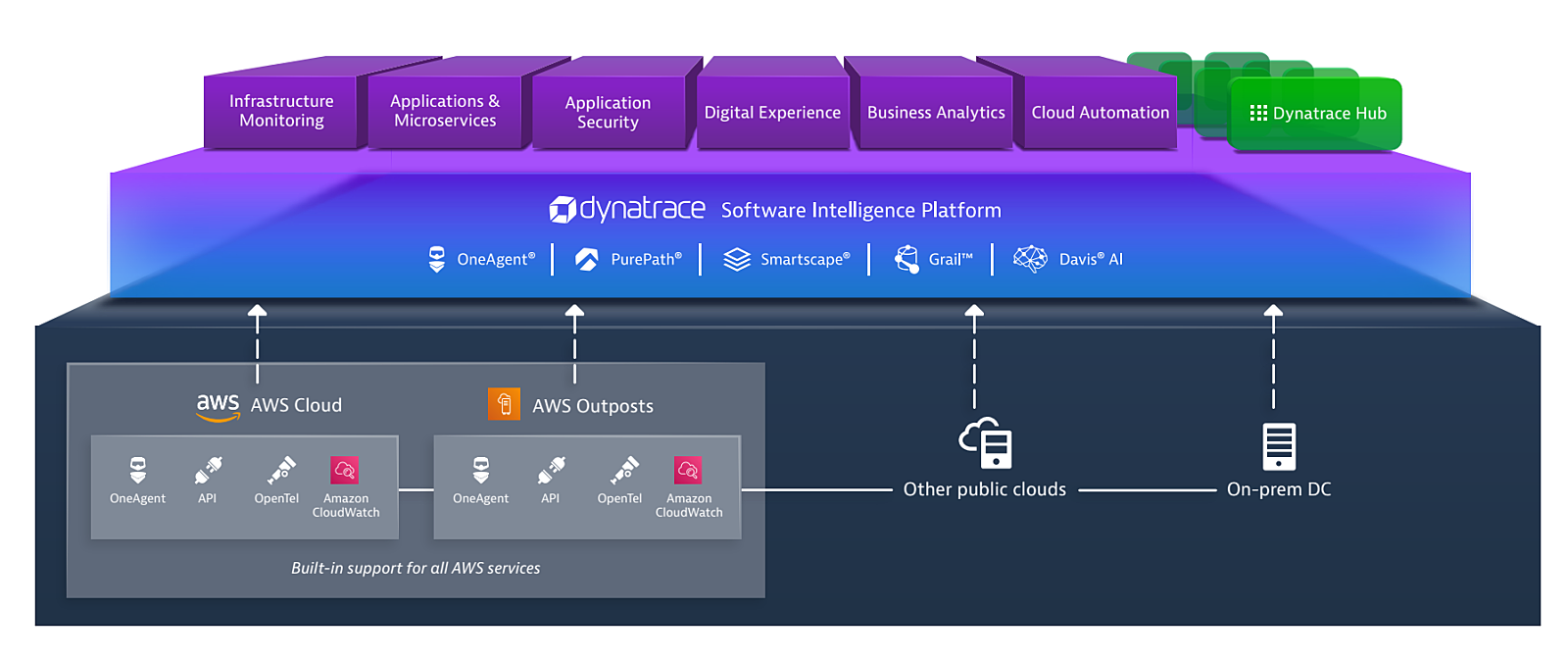

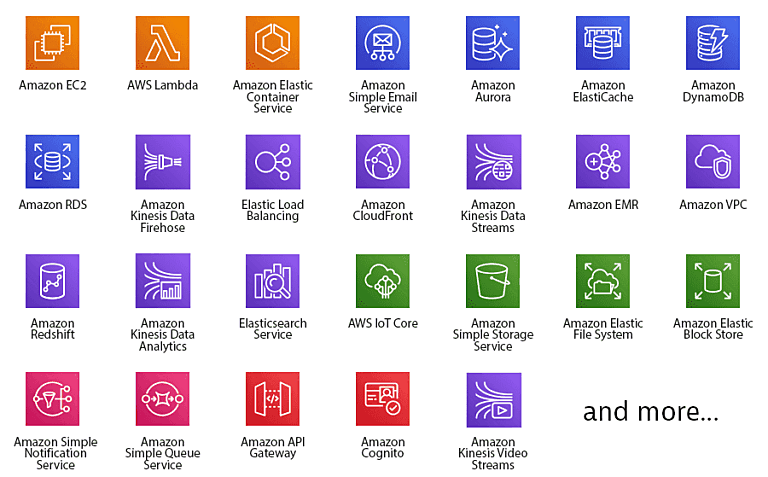

Integrate Dynatrace with AWS for intelligent monitoring of services running in the AWS Cloud. The AWS integration helps you stay on top of the dynamics of your enterprise and scale your AWS hybrid cloud environment. In addition to automatic full stack monitoring, Dynatrace provides comprehensive support for a wide range of AWS Services.

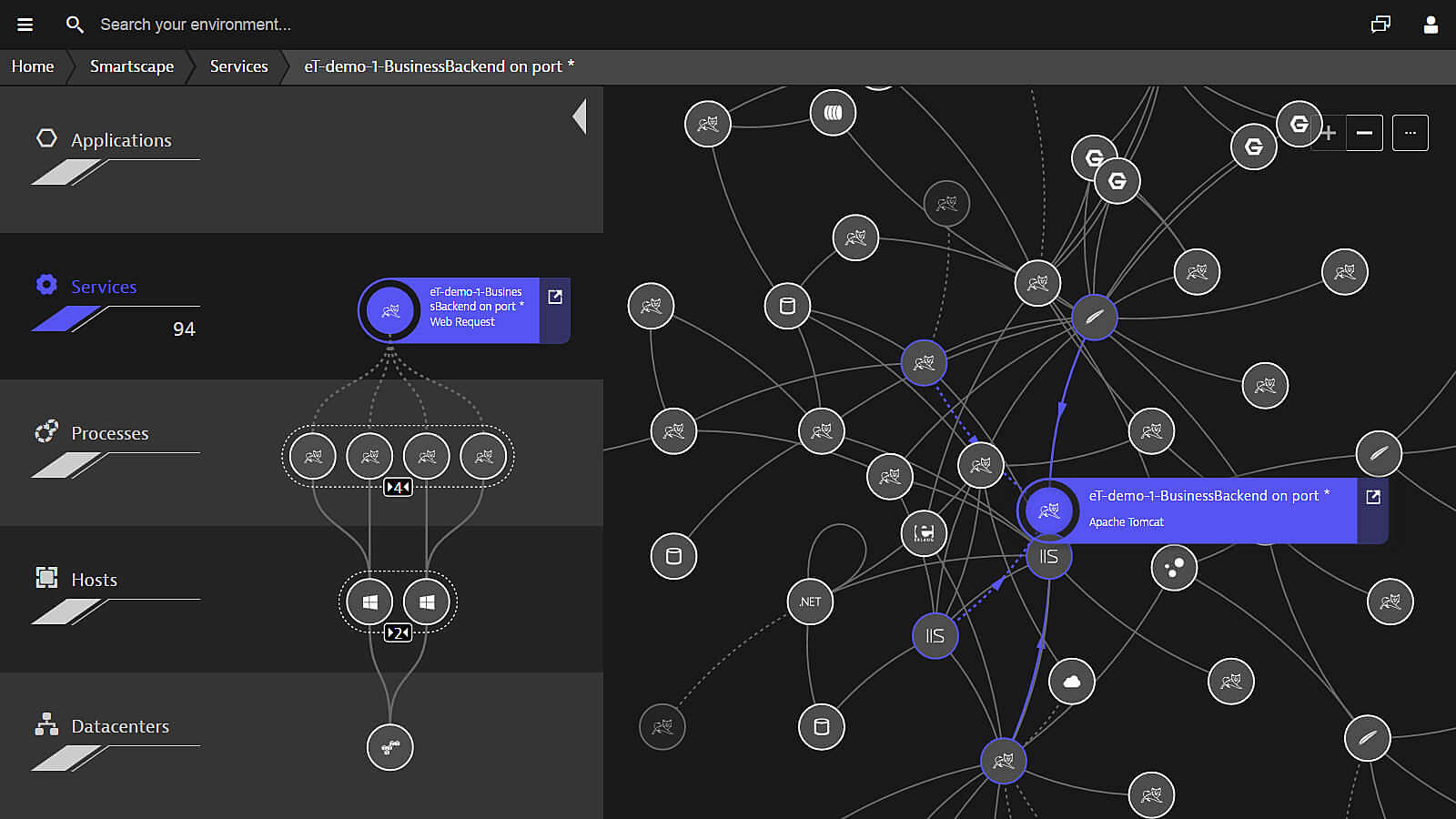

Real-time observability into dynamic environments

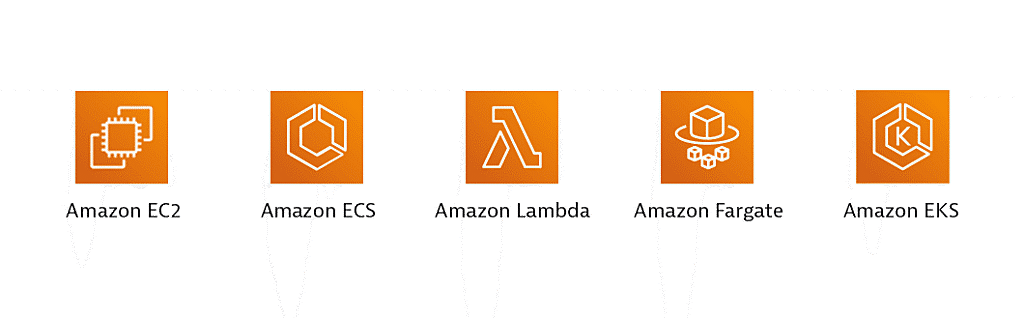

With automatic instrumentation for dynamic microservices and unique hybrid support, Dynatrace monitors your entire cloud including all dependencies in real time with no blind-spots. Dynatrace OneAgent supports byte-code instrumentation for Amazon EC2, Amazon Elastic Container Service, AWS Lambda, AWS Fargate, Amazon Elastic Kubernetes Service.

Ever growing support for AWS services

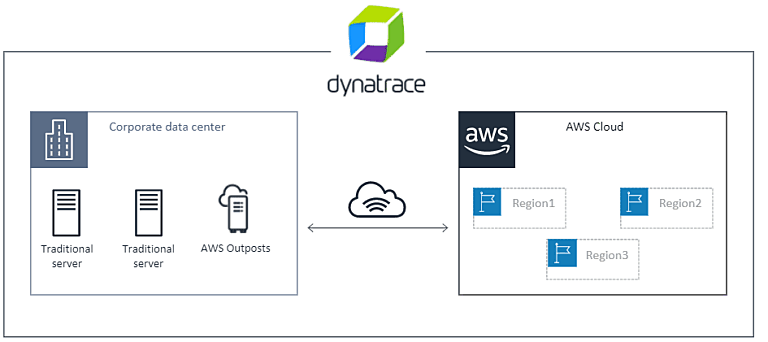

Hybrid cloud automatic & intelligent observability for AWS environments

Purpose-built for the cloud, Dynatrace automatically discovers, baselines, and intelligently monitors dynamic hybrid environments.

- Single view across the ecosystem — AWS, hybrid (including AWS Outposts) or multi-cloud

- One agent, fully automated, with scale, out of the box

- Auto deployment, configuration and intelligence; integrated with AWS CloudWatch metrics and metadata

- AI continuously baselines performance and serves precise root causation and contextual data for rapid MTTR

Migration and modernization of applications

It doesn't matter if you are re-hosting, re-architecting or re-platforming, Dynatrace provides you with actionable insights every step of the way:

1

AssessUnderstand your technology stack:

Discover all your hosts, processes, services and technologies2

PlanAnalyze your findings:

Make architectural decisions and plan your migration3

MigrateGain visibility into your hybrid cloud:

Stay on top of things while you're running two versions of your infrastructure at the same time4

OperateValidate and operate:

Ensure architectural integrity and enable autonomous cloud operations

Learn about cloud migration best practices using AWS and Dynatrace to help avoid the pitfalls that many organizations face through their cloud journey.

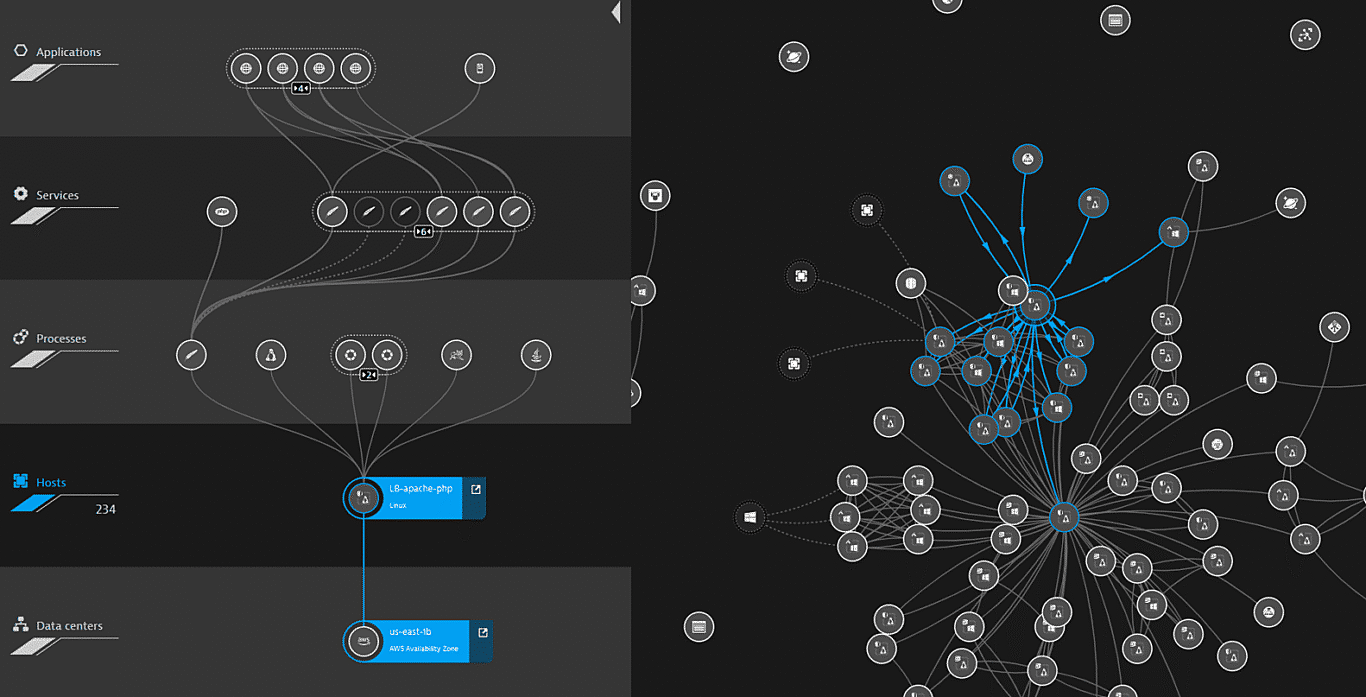

Microservices and container - real time observability into dynamic environments

Dynatrace was purpose-built for highly dynamic architectures like those deployed on AWS

- Continuous auto-discovery of microservices and containers with no code or image changes in under 5 minutes

- Monitors transparently and automatically – zero manual instrumentation required

- Dynatrace OneAgent supports byte-code instrumentation for Amazon EC2, Amazon Elastic Container Service, AWS Lambda, AWS Fargate, Amazon Elastic Kubernetes Service

- Automatic dependency mapping in real-time across entire environment

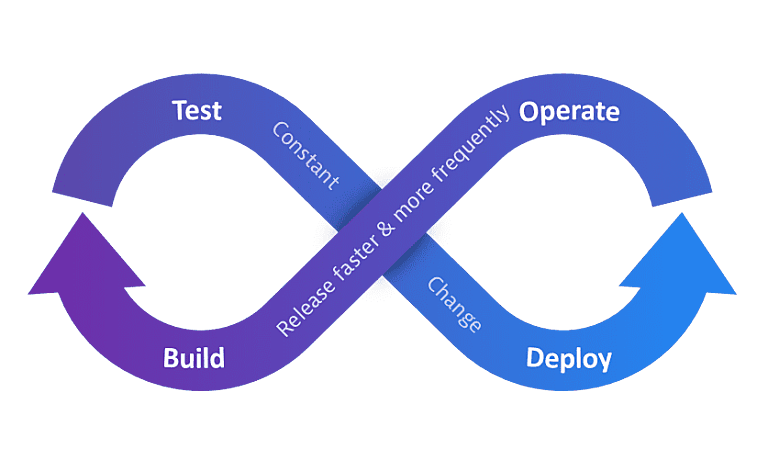

Empower DevOps collaboration and release better software faster

Innovate faster, automate operations, and improve business

Dynatrace empowers DevOps collaboration by leveraging AI and automation to release better software faster.

- Advanced observability throughout CI/CD software pipelines

- Automate Service Level Objective based quality gates to stop bad code changes before they reach production

- Automate deployment to release higher quality applications more frequently

- Automate operations to auto-mitigate and self-remediate bad deployments in production

Transform your DevOps ecosystem with Dynatrace, Keptn and AWS

Implement event driven continuous delivery and automated operations with Dynatrace, Keptn and AWS.

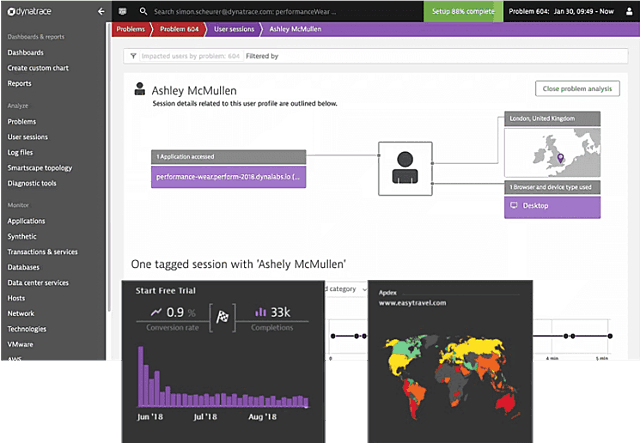

Digital experience and business analytics for best business outcomes

Dynatrace gives you the business impact so you can confidently optimize and deliver exceptional user experiences.

- Understand performance of every single user session or device; prioritize based on business impact

- Single view across AWS ecosystem – from users and edge devices, to apps and cloud platforms

- Democratization of data – break down silos for collaboration across the business, IT and marketing

To learn more on AWS, Dynatrace and our digital experience capabilities, watch Create Seamless Digital Customer Experiences with AI-Centered Monitoring.

Time to get to the root cause was significant in the old world. Now we can escalate to the right channels pretty much straight away. Gone are the days when our incident management team spent valuable time trying to find the right support group to escalate issues to.

Dynatrace is proud to be an Advanced Technology Partner

As an AWS Service Ready Partner, AWS recommends Dynatrace to AWS customers based on technical validation by AWS Partner Solution Architects who reviewed product availability and architecture for the following services:

- AWS Lambda for end-to-end distributed tracing across hybrid and multi-cloud environment

- AWS PrivateLink for increased security

- AWS RDS for visibility and understanding performance

- Amazon Linux 2 for visibility and understanding performance

- AWS Outposts for visibility at scale across on-prem and cloud as a single solution

Read more on Dynatrace’s recent recognition by AWS for experience and expertise in Applied AI.

Read more on Dynatrace as an AWS Well-Architected Framework - M&G Lens Partner providing prescriptive guidance on key concepts and best practices for optimizing management and governance across AWS environments.

Simplify and accelerate procurement with AWS Marketplace

✓ Pricing and terms that fit your technical and business needs.

✓ Tap into already-committed budget and get started right away!

✓ Use committed AWS spend to pay down units.

✓ Procure with standardized terms from all sellers.

✓ Consolidated billing from AWS.

✓ It’s all good!

Sign up for AWS monitoring today!

You’ll be up and running in under 5 minutes:

Sign up, deploy our agent and get unmatched insights out-of-the-box.