What is Apache Spark?

Apache Spark is an open-source cluster-computing framework. Originally developed at the University of California, Berkeley's AMPLab, the Spark codebase was later donated to the Apache Software Foundation, which has maintained it since. Spark provides an interface for programming entire clusters with implicit data parallelism and fault-tolerance. As a fast, in-memory data processing engine Apache Spark allows data workers to efficiently execute various tasks. Examples are streaming, machine learning or SQL workloads that require fast iterative access to datasets.

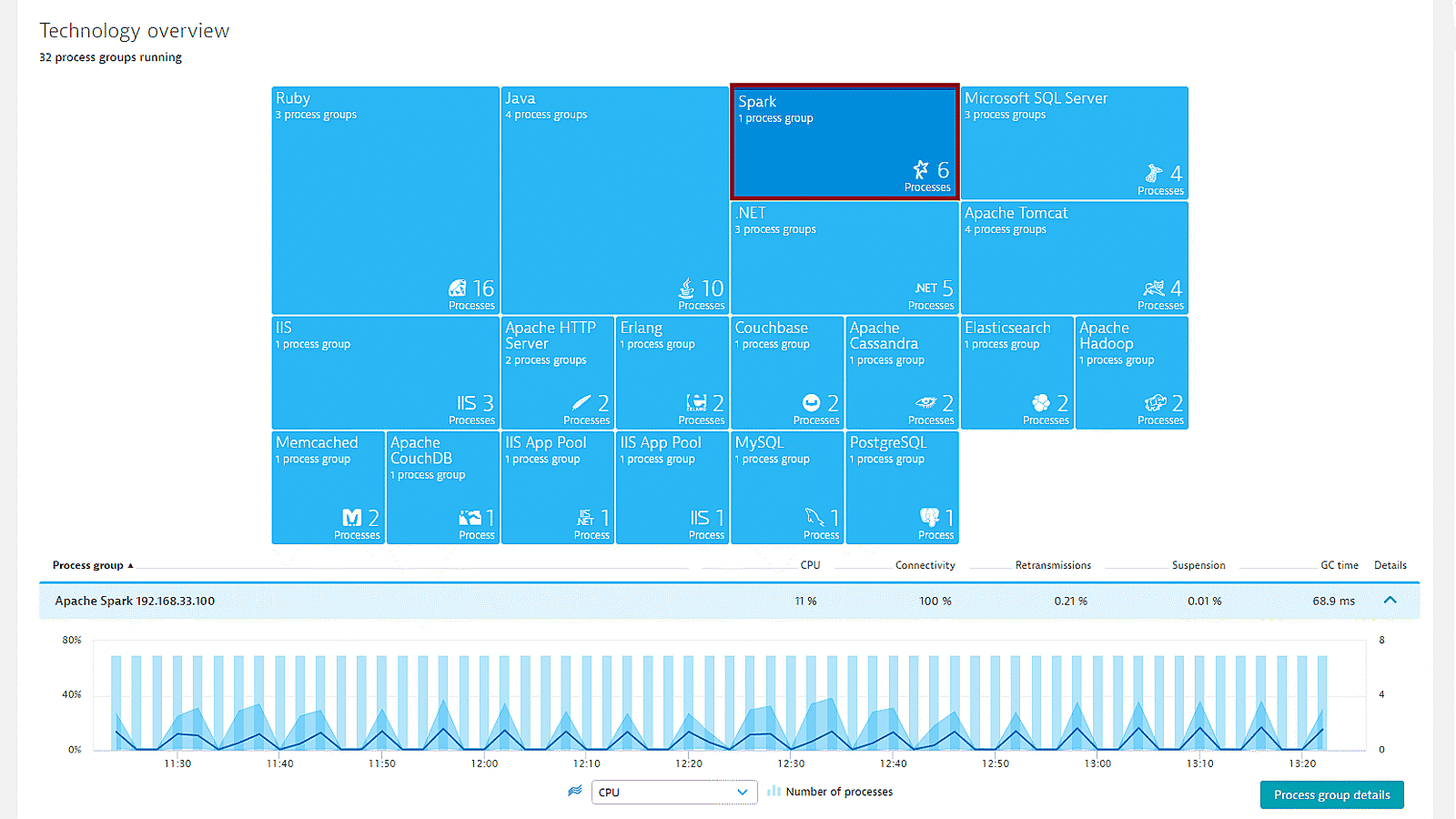

Dynatrace monitors and analyzes the activity of your Apache Spark processes, providing Spark-specific metrics alongside all infrastructure measurements. With Apache Spark monitoring enabled globally, Dynatrace automatically collects Spark metrics whenever a new host running Spark is detected in your environment. Therefore Apache Spark monitoring provides insight into the resource usage, job status, and performance of Spark Standalone clusters.

Start monitoring your Spark components in under 5 minutes!

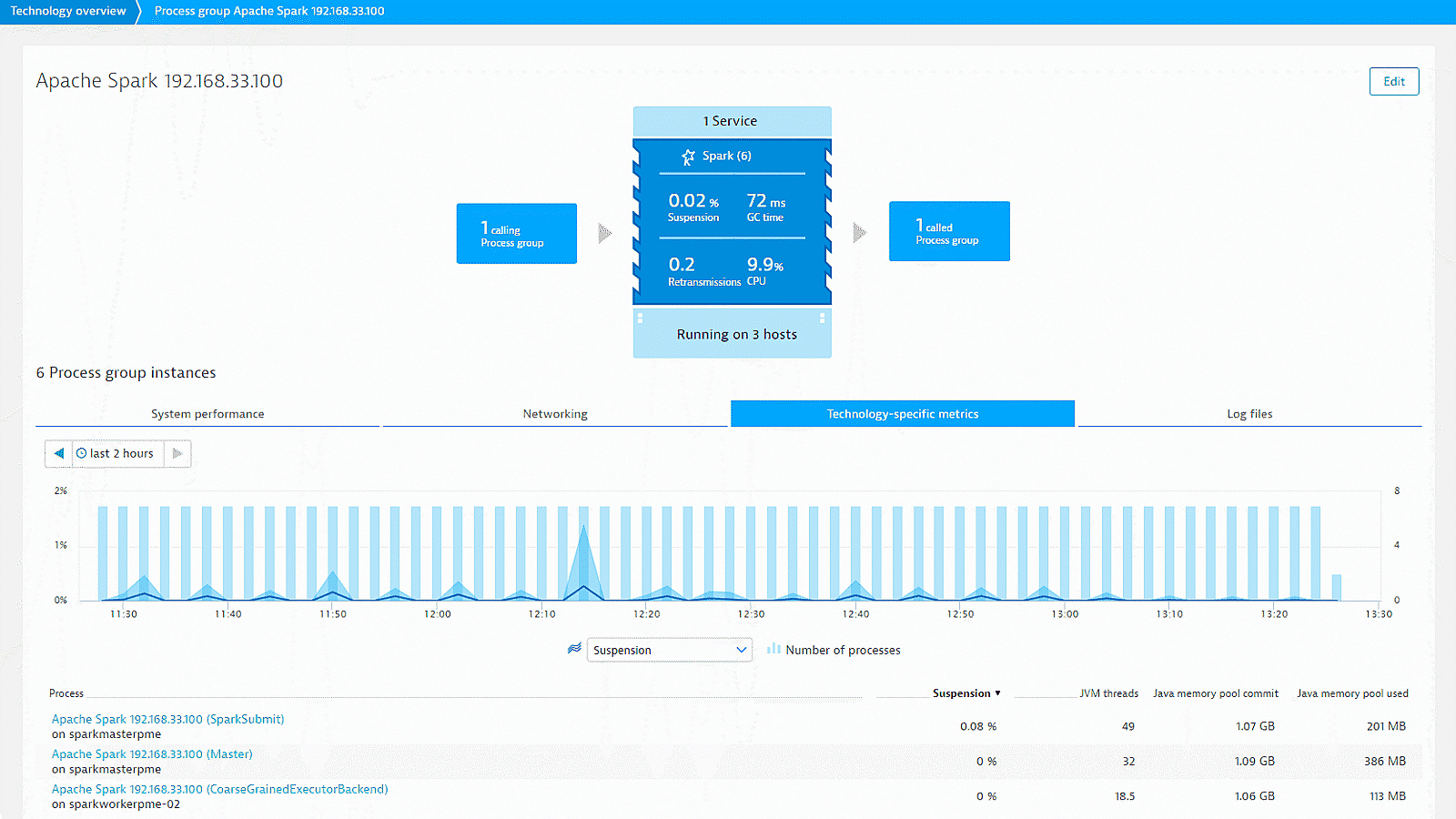

In under five minutes, Dynatrace detects your Apache Spark processes and shows metrics like CPU, connectivity, retransmissions, suspension rate and garbage collection time.

- Manual configuration of your monitoring setup is no longer necessary.

- Auto-detection starts monitoring new hosts running Spark.

- All data and metrics are retrieved immediately.

Monitor your Spark components

Dynatrace shows performance metrics for the three main Spark components:

- Cluster manager

- Driver program

- Worker nodes

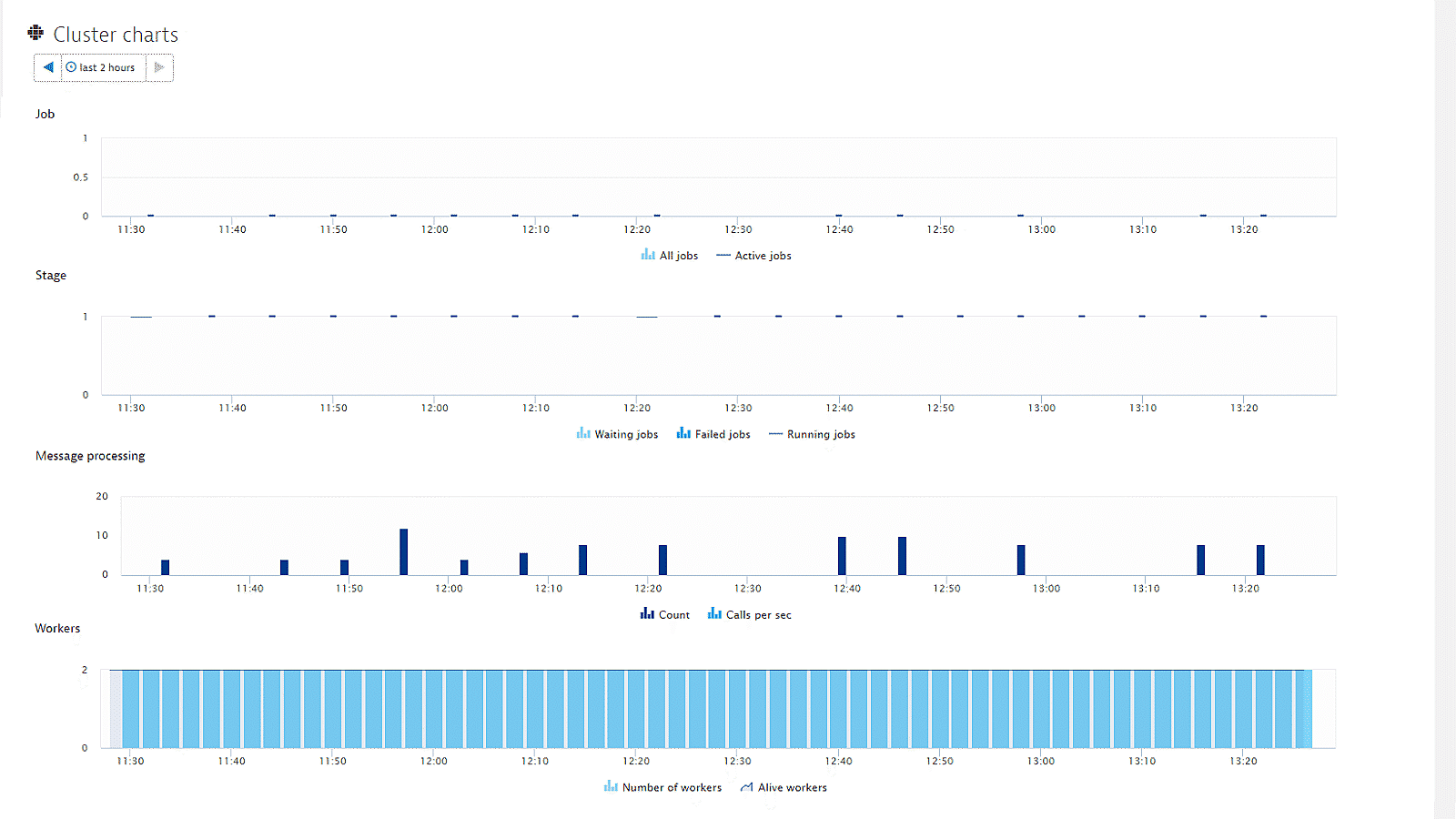

Apache Spark monitoring provides insight into the resource usage, job status, and performance of Spark Standalone clusters. The Cluster charts section provides all the information you need regarding Jobs, Stages, Messages, Workers, and Message processing.

For the full list of the provided cluster and worker metrics please visit our detailed blog post about Apache Spark monitoring.

Access valuable Spark worker metrics

Apache Spark metrics are presented alongside other infrastructure measurements, enabling in-depth cluster performance analysis of both current and historical data.

Spark node monitoring / Spark worker monitoring provides metrics including:

- Number of free cores

- Number of worker-free cores

- Number of cores used

- Number of worker cores used

- Number of executors

- Number of worker executors

- and many more

A full list of all provided worker metrics is discussed in our detailed blog post about Apache Spark monitoring.

Apache Spark advantages

A big advantage of Spark is that developers can create applications to exploit its power, derive insights, and enrich their data science workloads within a single, shared dataset. Spark enables all apps in Hadoop clusters to run way faster in memory and even when running on disk.

Since Dynatrace gathers all relevant metrics and data as soon as new hosts are running Spark within your environment, a big data picture of the entire IT system can be seen easily.