Oberservability in Enterprise applications requires more than infrastructure monitoring, synthetic testing or a log Analytics tool. Agent based technology is the most effective solution when combined with a full-stack approach that includes all of the above. Combined with Software Intelligence (AI) the Dynatrace OneAgent is the only full-stack agent you need to accelerate your enterprise application modernization strategy.

Agent-based Monitoring

In this series, we have been reviewing the various approaches to enterprise application monitoring and their cost-benefit analysis. If you haven’t read the previous posts regarding logs, infrastructure or the customer perspective and synthetic monitoring please do so or risk confusion by irrelevant physics references and analogies. However, if you have read the first 3 entries in this series and are willing to read on, I promise you will learn why agent based monitoring is necessary in your overall enterprise strategy.

Ultimately, when we think of observer effect and resource costs with respect to application monitoring, we are referring to instrumenting the application code. Code-level monitoring can be accomplished through emitting metric data (eg. JMX) or through some sort of profiling agent. I will admit that on the component level you may see increased CPU, Memory, or execution time due to the monitoring overhead of an agent technology. However, even if the increases were as significant as 2-3% of resources as many APM solutions are, I have seen customers using Dynatrace’s OneAgent technology increase the performance of their applications upwards of 90% in some cases. The modest resource utilization would be easily recouped in the performance gains and downtime avoidance by discovering small problems early and that is a significant ROI.

The first step towards utilizing agent based monitoring is bytecode instrumentation. In Java and .NET, there are native interfaces to interact with the JVM or CLR to accomplish this, but this is not always the case for other programming languages. Many agent technologies use these interfaces to enable the addition of sensors and counters to the applications, called profiling. One could manually configure each sensor and counter throughout the bytecode, but think of that airplane with a billion lines of code spread across thousands of applications. Manual instrumentation requires access to the source code, expertise in a specific language and an intimate knowledge of the application. Monitoring all of the applications manually could consume an entire career and the plane would be end-of-life by the time it is completed. The Dynatrace OneAgent automates the bytecode instrumentation so that you can make sure all of your applications are monitored without the need for specialized personnel and skill-sets.

Some agent technologies must also reside inside of the JVM and share heap space. In such scenarios, garbage collection (GC) can become a gap in your data, but how would you know? When garbage collection happens, your applications are paused while the JVM clears up memory, this would include any embedded agent technology. This is another big differentiation between the Dynatrace OneAgent and other technologies in that it runs natively as its own process on the host rather than inside the JVM and can capture GC events when they happen. This way you aren’t missing valuable insight into performance issues. If you have gaps in your data the analysis will not be very effective for analysis or career longevity.

Garbage collection in your monitoring data is only one example of where traditional profiling agents fail. Many highly sample the application and transaction metrics to reduce resource consumption. If you are sampling your environment, how do you determine what is the right sample rate? What if your radar suddenly identifies that there is another aircraft in your airspace? How many samples do you need to identify where it is? What is the imminence of a problem? At 500+ miles per hour, things happen very quickly. If you can’t get all of the information to identify and prioritize a problem, how can you know how to prepare or will you only have time to react? Is there even an impact you should be concerned about? You can try to look back to find out how you got here, but what if you have no data to show the speed and trajectory of the aircraft because it wasn’t instrumented? In many cases like this, poor instrumentation or gaps in your data could lead to a CEE (Career Ending Event).

Microservices

When considering modern cloud-native technologies like Kubernetes or Cloud-Foundry in the enterprise, the thought of manual instrumentation of agent technologies is no longer an option. The environments are so dynamic and complex that traditional monitoring methods are unable to adapt because they were not designed for such rapid change. In addition, the container technologies that enable this paradigm shift are designed to be immutable which means that your monitoring strategy needs to be embedded inside your applications before they are deployed. Many customers I have spoken with had even decided not to monitor as they felt they could just destroy the misbehaving microservice and respawn a replacement. This has not worked out well for most, because the use of microservices doesn’t negate the need to understand what is causing performance and stability headaches. Throwing more hardware at a problem is the old-school IT way to fix things and shouldn’t be renewed in a modern stack.

This is one more way that the Dynatrace OneAgent excels because rather than purpose-built agents for each application technology, it was built with all of these technology stacks and platforms in mind and automates the instrumentation of our supported technologies whether they are native microservice processes running on a host in your data center, in a virtual machine in the cloud, in a PaaS (eg. Azure, OpenShift, or Cloud Foundry), or already distributed as a container.

Software Intelligence

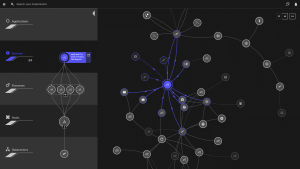

Metrics without context are just data points with no definition. In most solutions, data is only captured as key-value pairs. This is useful in a 2-dimensional world, but Dynatrace works in the real 3-dimensional world and differentiates by applying context to the collection of key-value pairs. That context includes dependency data between the keys, and this is how the Software Intelligence of Dynatrace is able to make sense of the millions of metrics that are collected. A transaction has a direct relationship between a user, the services and APIs consumed, the processes on which those services and APIs are hosted, the hosts on which those processes are run and which cloud technology or virtualization platform on which those hosts reside. Dynatrace visualizes this as the ‘SmartScape‘ but as you can now imagine, this is more than pretty picture. The real multi-dimensional data that is represented in this view is only a taste of what’s inside.

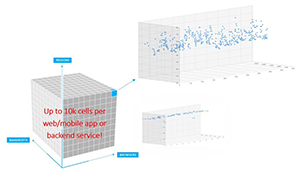

These relationships are the context that can only be obtained when using a full-stack monitoring approach unique to the Dynatrace OneAgent. As you can see above, in a 3-dimensional world, the relationship of the 3rd axis to the data makes it actionable. The Dynatrace Software Intelligence analyzes and baselines the metric data in the same way across multiple dimensions and as many as 10,000 different cells of context. For more details on this I encourage you to read my colleague Tad Helke’s post on AI.

Assuming you have read all of the posts in this series, we have discussed why it is valuable to collect metrics and context from multiple sources across your infrastructure, users and application code in an automated way. As I have just highlighted, high-fidelity data collected in the most efficient manners is only as valuable as your ability to make that data actionable via context. With a complex application stack, the data sets can be incredibly large and parsing that data requires a solution beyond a cockpit of charts and dials. Think of how complex an airplane is and how the airline industry has moved from humans actively flying aircraft to fly-by-wire. Pilots now chart courses and automation handles many of the actions based on billions of inputs from sensors. In complex application environments, you need Software Intelligence and machine learning to identify changes and the anomalies in the dynamic data set. Now it can be actionable through auto-discovery, automatically generated baselines, root-cause analysis, and remediation (auto-pilot).

TL;DR

Enterprise applications require more than infrastructure monitoring, synthetic testing or a log Analytics tool. The Dynatrace OneAgent technology is a full-stack agent which includes all of the above. The cost-benefit analysis when seeking monitoring tools is often too narrow in scope and confined to an individual team in the organization. In reality the scope should be bigger as the problems in enterprise applications on hybrid-cloud infrastructures spans the entire enterprise adding complex dependencies that cross silos. The Dynatrace OneAgent introduces software intelligence across the enterprise landscape. Imagine you are that big airline and your ground crew isn’t communicating with the pilots about a needed repairs or the gate attendants aren’t able to close the manifest and release the plane for travel. Silo’d data and problems leave passengers stranded, repairs not addressed, lost cargo, and airplanes stuck on the tarmac. Observing a plane in the air (uptime) is much more valuable than one on the ground. In Quantum parlance, observation of application performance does have an effect, but it is one of customer satisfaction, application performance and job security.

*No planes or robots were harmed in the making of this blog. Some side-effects of reading may include nausea, groaning and disbelief at the poor analogies, impossible bluster and high-school level physics. The only cure for this is to try Dynatrace for yourself and call us in the morning!

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum