In the fledgling stage of the Internet of Things (IoT), the focus was all about connecting a handful of high-end appliances to the Internet. Then along came the cloud, which boosted the development of IoT. Suddenly, there was an “always-on” place for storing information and running code at low cost. At that time, it was fine to send all the data to IoT platforms running in the cloud for processing. But not anymore.

Why? The IoT has emerged in remote places where connection is anything but steady. The number of connected devices and the amount of data that must be transferred has exploded, so it’s no longer efficient or even possible to send everything to the cloud. Furthermore, use cases with latency-sensitive information processing are becoming more common.

To handle these new challenges, a solution was required that didn’t always have to be connected to the cloud to store and process data with no latency issues. This is where edge computing comes into play. Edge computing allows data to be processed at the edge, near where it is created, instead of sending it to the cloud. Like the revolution in software architecture of splitting monolithic applications into microservices, we now see a trend where entire datacenters or cloud infrastructures are split into “micro-datacenters” – edge devices with compute power all around.

Processing data at the edge will play a decisive role in the future. One might think the big cloud providers would be opposed to this, but they have realized the importance of edge computing for IoT and have provided solutions for it. This includes cloud providers like AWS, Microsoft Azure, Google, IBM and SAP, along with IoT platform providers like Bosch, GE Predix, PTC Thingworx and C3 IoT. These solutions provide services for local compute, analytics, messaging, data caching, sync and machine learning.

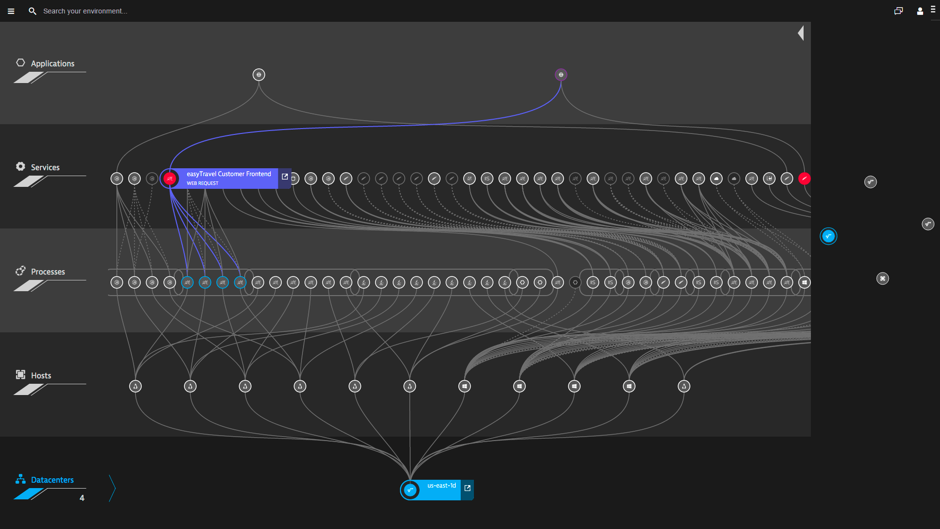

No matter what technology is leveraged to bring processing power to the field, the complexity of the application increases enormously. To keep the overview of sensor hubs, edge compute nodes, gateways, cloud backends with services, data stores and business applications, it’s essential to have smart automated monitoring systems. The dynamic of the connected structure does not allow manual configurations any more. Dependencies must be auto-detected and auto-analyzed. End-to-end visibility into transactions and providing a full stack view into the connected services is a requirement for gaining insight into the IoT environment. The Dynatrace monitoring platform uses the collected data in its AI layer, applying machine learning models to automatically find the root cause for a detected problem. This guarantees anomalies and outages to be detected in real-time to keep the system up and running.

You may think this monitoring would best be done by the cloud or IoT platform providers, but that would be the wrong conclusion. While these providers may be able to provide detailed insights within their own environment, their capabilities are limited to that scope. Tracking errors and identifying dependencies to find the root cause becomes a time-consuming search for the needle in the haystack.

A better solution? Use Dynatrace to monitor all your IoT processes. From real user monitoring if users are engaging with things, to processes running on edge devices, to web-scale back-end applications, to highly dynamic environments – Dynatrace sees it all. All the relationships, all the dependencies, in context, end-to-end. I welcome you to try it now!

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum