We’re proud to announce the immediate beta availability of Dynatrace OneAgent for Go, the open-source programming language. This is the industry’s first auto-instrumenting monitoring solution available for Go-based applications. No code changes to your Go applications are required to use Dynatrace. As such, OneAgent for Go can monitor not only your own Go applications, it can also monitor 3rd party Go-based applications that your applications may rely on.

The unique OneAgent for Go monitoring technology enables you to capture data that is well beyond the capabilities of traditional monitoring solutions. Dynatrace can extract internal Go runtime information that is inaccessible with public Go runtime APIs.

Activate Go support

Monitoring of Go applications is disabled by default during the beta release period.

Note: This feature requires Dynatrace OneAgent version 125 or higher and Dynatrace Cluster version 127 or higher.

To activate Go support

Monitor Go with Dynatrace

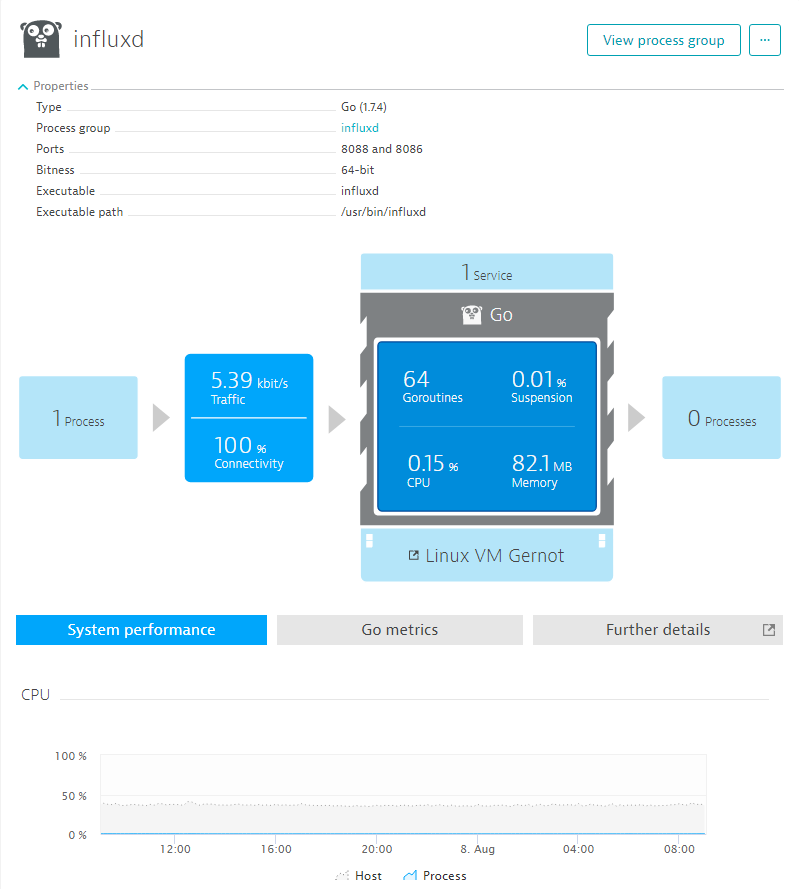

To illustrate how Go monitoring works, and to show you the available metrics that Dynatrace provides for Go applications, we’ll use an example influxdb database that receives requests via a load-test script. This example uses the out-of-the-box influxdb Ubuntu package binary. To begin monitoring, following installation of Dynatrace OneAgent and activation of Go support (see above), restart the influxdb service by running the command systemctl restart influxdb.

After the restart the influxd process front page

Following restart of the influxd process, the process view will automatically display Go specific metrics (see example below). Note that the Go version that the executable was built with (1.7.4) is listed under Properties.

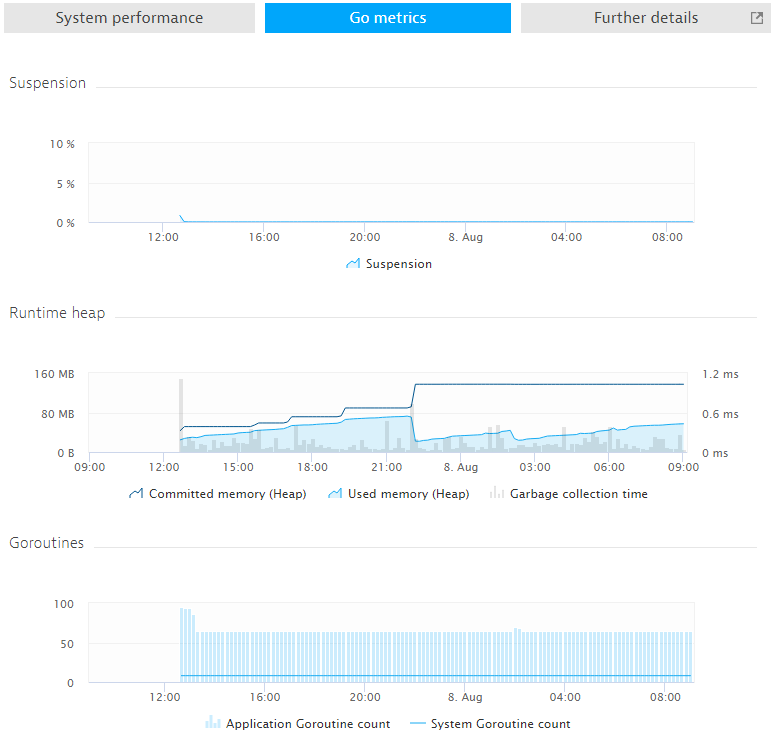

Key metrics

Dynatrace OneAgent immediately begins tracking the following important Go metrics.

| Suspension | Go garbage collector percentage share compared to overall application CPU time. |

| Runtime heap | The number of bytes used/committed to Go heap and Go garbage collector execution times. |

| Goroutine count | The number of Go routines instantiated by the application and Go runtime infrastructure. |

Click the Further details tab to drill down to the details of the running process.

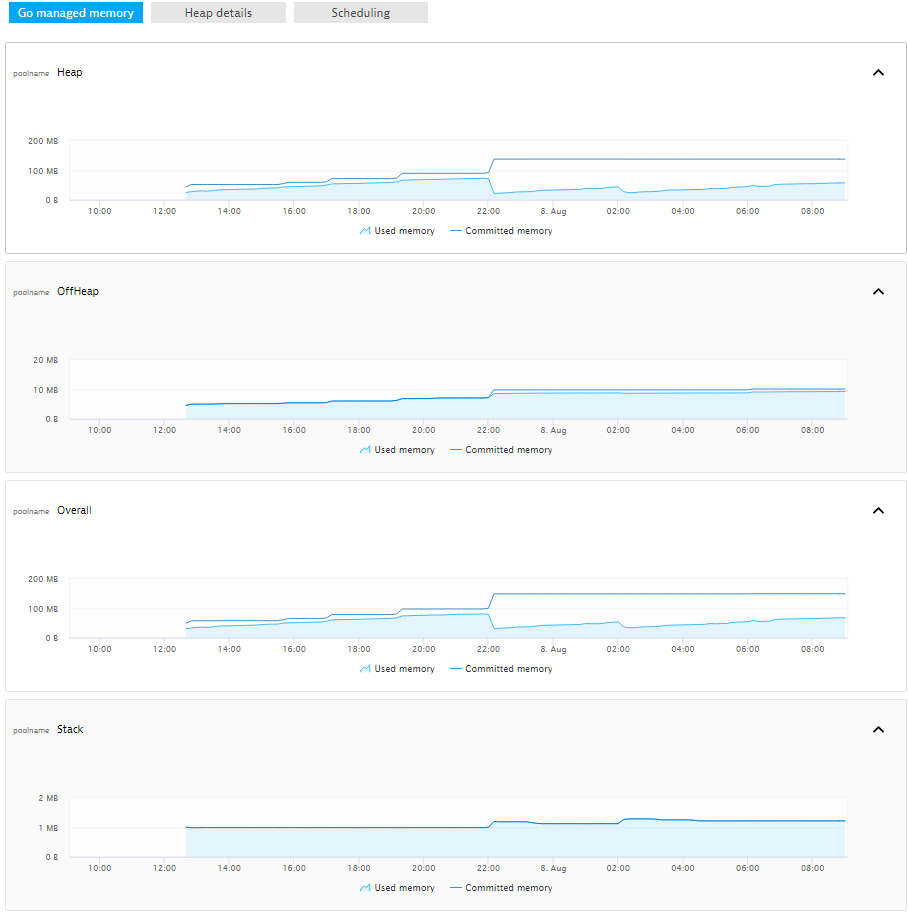

Go managed memory

The Go managed memory tab breaks down memory metrics into various categories:

| Heap | Bytes of memory used/committed to Go runtime heap. |

| Stack | Bytes of memory used/committed to dynamic Go stacks. Go stacks are used to execute Go routines and grow dynamically. |

| OffHeap | Bytes of memory used/committed for Go runtime internal structures that are not allocated from heap memory. Data structures used in Go heap implementation are an example of OffHeap memory. |

| Overall | The sum of Heap, OffHeap, and Stack memory. |

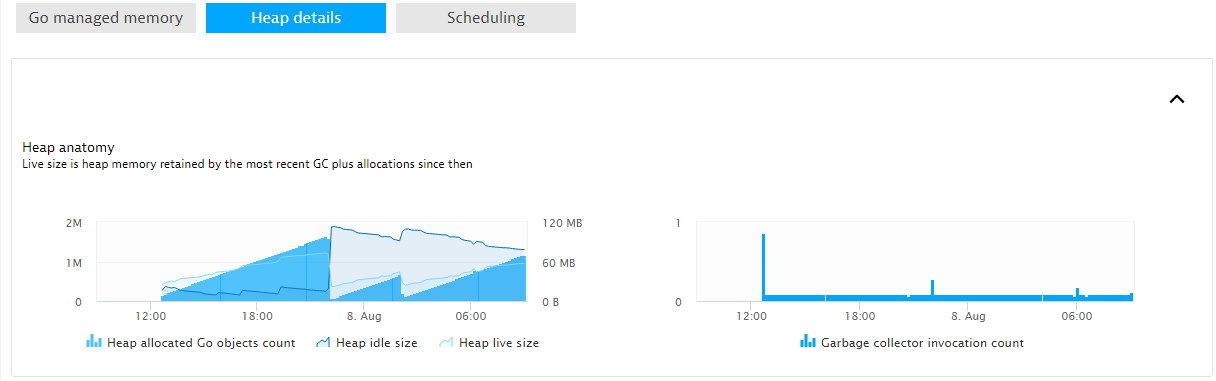

Heap details

The Heap details tab digs deeper into the anatomy of Go heaps.

| Heap allocated Go objects count | Number of Go objects allocated on the heap. |

| Heap idle size | Number of bytes currently not assigned to Go heap or stack. Idle memory may be returned to OS, or retained by Go runtime for later reassignment to heap or stack category. |

| Heep live size | Number of bytes considered live by the Go garbage collector. This metric accumulates memory retained by the most recent garbage collector run and allocated since then. |

| Garbage collector invocation count | Number of Go garbage collector runs. |

Understand Goroutine scheduling

Go does a fantastic job of hiding Goroutine scheduling details from application developers. A basic understanding of the internal scheduling mechanics will help you read scheduling metrics and detect potential anomalies. As documentation of Go internals is sparse, following is a short primer to get you started.

The implementation of Goroutine scheduling deals with three central object types: M (Machine), P (Processor), and G (Goroutine). You’ll find many references to these object types by browsing through Go runtime source code. For expressiveness, we use the following alternative terms for M, P, and G:

| Go runtime nomenclature | Expressive name |

|---|---|

| M | Worker thread |

| P | Scheduling context |

| G | Goroutine |

Go executes Goroutines in context of worker threads acquired from a pool of native operating system threads. A Goroutine may be assigned to a different worker thread at any point in time (unless runtime.LockOSThread is used to enforce worker thread affinity).

Multiple Goroutines are typically assigned to a single worker thread. A scheduling context is responsible for the cooperative scheduling of these Goroutines. The Go compiler adds code to each Go function prologue which checks if the currently executing Goroutine consumed its 10 milliseconds execution time slice (the actual mechanism cleverly uses stack guards to enforce rescheduling). If the time slice is exceeded, the scheduling context sets up the next Goroutine to execute. This is why scheduling is cooperative: if a Goroutine doesn’t invoke Go functions, it won’t be rescheduled.

Each set of Goroutines is executed by a worker thread. Execution is controlled by a scheduling context. But what happens if a Goroutine writes a large chunk of data to disk or blocks waiting for an incoming connection? The Goroutine will be blocked in a system call and no other Goroutine will be scheduled (remember, reschedule happens only upon invocation of Go functions). Thus all Goroutines assigned to the same worker thread will also be blocked!

But no worries, Go deals elegantly with this situation. If a Goroutine executes a blocking system call, the scheduling context with the other Goroutines will be assigned on the fly to a different worker thread (either a parked or newly instantiated thread). This explains why the total number of worker threads is larger than the number of scheduling context objects. Once the blocking call returns, the Goroutine is again assigned to a scheduling context or, if the assignment fails, to the global Goroutine run queue. By the way, the very same principle applies to cgo (Go to C language) calls.

It’s notable that the number of scheduling contexts is the only user configurable setting in the Go scheduling algorithm (see the GOMAXPROCS environment variable and runtime.GOMAXPROCS function). You can’t control the number of worker threads.

Therefore, writing a large chunk of data to disk or waiting for incoming connections won’t block other Goroutines that are initially assigned to the same worker thread. These Goroutines will continue execution on their newly assigned worker threads.

With these fundamentals in mind, you’re now ready to dive into scheduling metrics.

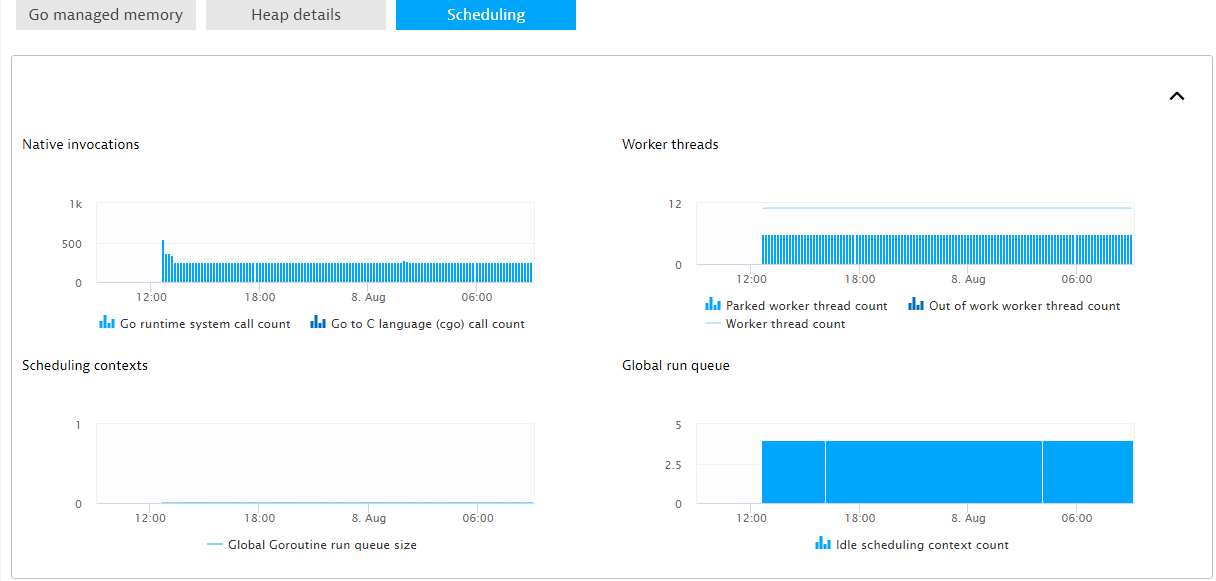

Scheduling

The Scheduling tab provides unique insights into Goroutine scheduling.

| System call count | Number of system calls executed by Go runtime. The number does not include system calls performed in the context of cgo. |

| cgo call count | Number of cgo calls. |

| Parked worker threads count | Number of worker threads parked by Go runtime. A parked worker thread doesn’t consume CPU cycles until Go runtime unparks the thread. |

| “Out of work” worker threads count | A worker thread is considered out of work when the associated scheduling context has no more Goroutines to execute. The worker thread attempts to steal Goroutines from another scheduling context and global run queue. If the stealing fails, the worker thread will park itself after some time.

The same mechanism applies to a high work load scenario. If an idle scheduling context exists, Go runtime will “unpark” a parked worker thread and associate the idle scheduling context. The unparked worker thread is now in the ‘out of work’ state and will start Goroutine stealing. |

| Worker thread count | Number of operating system threads instantiated to execute Go routines. Go does not terminate worker threads; it keeps them in a parked state for future re-use. |

| Global Goroutine run queue size | Number of Goroutines in the global run queue. Goroutines are placed in the global run queue if the worker thread used to execute a blocking system call can’t acquire a scheduling context. Scheduling contexts periodically acquire Goroutines from the global run queue. |

| Idle scheduling context count | A scheduling context is considered idle if it has no more Goroutines to execute and Goroutine acquisition from the global run queue or other scheduling contexts have failed. |

Future outlook

This initial beta release of Dynatrace Go monitoring provides some great metrics and Go version detection, but there’s much more to come! The next version of Dynatrace Go monitoring will feature full web-request tracing capabilities—similar to those currently offered for all other supported technologies. Our goal is to make Dynatrace the first and only full-stack monitoring solution in the world that supports end-to-end transaction monitoring of Go processes, with no required changes to your code. As Go adoption grows, this promises to be a game changer.

Credits

Many Dynatrace folks have made important contributions and valuable input to Dynatrace Go technology support. But one individual deserves special acknowledgment: Michael “Mr. Runtime” Obermueller. Our Go support would not have been possible without Michael’s valuable input and vast knowledge of Go internal mechanisms.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum