Last week at AWS re:Invent we heard Werner Vogels, CTO at AWS, talk about how to build the applications of 2020! Listening to Werner’s talk, it became apparent to me, that this it also means that we have to “re:Think” how we go about Performance Engineering. Because of what I heard at re:Invent I almost wanted to title this blog “Performance Engineering is Dead!“, but that wouldn’t reflect what I think is currently happening in our discipline!

Performance Engineering IS NOT DEAD, but, it is being disrupted just as we have seen Dev and Ops being disrupted. Want proof? Besides re:Invent just look at the agendas of your performance engineering related conferences. They’re all about Containers, Cloud, PaaS, AI or Serverless. Every talk with DevOps or NoOps in the title is typically jam packed!

Two recent conferences I was invited to speak at were CMG imPACT and Neotys PAC. These two events were dedicated to Performance and Capacity Management as well as Performance Engineering. I had several vivid discussions about how capacity management is supposed to work in virtual and cloud environments, and what it really means to Shift-Left and Shift-Right. At Neotys PAC, Wilson Mar gave a great talk about Performance in DevOps and shared his thoughts on what I would label “The Trades of a Performance Engineer in 2020!”

- Automation: Not only test execution but also automated root cause analysis and auto-mitigation

- Shift-Left: Provide automated performance feedback earlier in the pipeline into Dev and CI tools

- Shift-Right: Influence and leverage production monitoring

- Self-Service: Don’t become the bottleneck! Provide your expertise as DevOps self-service

- APM: You must master APM (Application Performance Management) just as you mastered load testing tools!

- End User Monitoring: That’s the bottom line! Happy Users make Happy Business!

- Cloud Native: Learn and embrace Cloud, Containers, IaaS, PaaS

- Cloud Scale: Static monitoring and planning is not how IT works in today!

- artificial intelligence: Leverage machine learning and big data for better and faster performance advice!

- NoOps: Combine Cloud, Monitoring and AI to help your organization from DevOps to NoOps!

Change is hard: I speak from and share my experience!

When talking with conference attendees or delivering my Performance-, DevOps- and NoOps- workshops I very often sense the “fear” of inevitable change that is coming. I sense this fear immensely amongst “traditional” performance engineers (experts in LoadRunner, SilkPerformer …) whom seem to understand that the “good old” “Performance Center of Excellence” times will be over soon. Some engineers already find themselves in situations where they’re seen as bottlenecks and not good quality gates in a faster-moving DevOps Pipeline.

I keep getting emails and LinkedIn messages from friends I met over the years asking me for advice on what their next best career move should be, which technologies they should learn and whether they should pick up some more development skills.

Below you will find a list of things I tell them. It’s based on my own personal transformation from being a SilkPerformer expert (10 years ago) to working in a 1h Code to Production Deploy Organization. It also includes things I see and hear when I’m out in the field and observe how others are successfully mastering performance engineering today. (And how they think it will be in the years to come.)

#1 – Learn a new script language

If you haven’t learned a new language in the last 2 years it is about time. My personal project was to learn Python. The best way for me to learn was to write the Dynatrace CLI (Command Line Interface). This was both a great learning experience as well as it helped me automate many of the tasks that I am doing on a day-to-day basis with Dynatrace, such as creating reports by calling the Dynatrace REST API and storing it later in Jira Tickets as an attachment:

This project also forced me to understand Git and GitHub which I hadn’t touched that much before either. Whether it is Python, Ruby, Go or any other scripting language, I am sure you have some tasks you do manually right now. Pick a language you don’t already know and figure out how to automate your most manual, boring and time-consuming tasks. It’s a Win-Win situation!

#2 – Understand the power of APM

I understand that I am biased as I work for Dynatrace, an APM vendor. BUT – almost every session at the recent conferences I have been to referenced APM. The consensus was that: “If you don’t performance engineer with APM tools then you may as well not do any performance engineering!”

Why? Because our world is centered around Services, APIs, Applications and End Users. And modern APM solutions are delivering full end-to-end visibility into these components.

You may think that APM is this hard to install and configure beast that requires you to understand how to instrument your applications. This statement may have been true not too long ago but it’s been solved. Dynatrace solved it through our OneAgent technology, which automates installation and configuration regardless of your technology stack (Infrastructure, OS, Application Runtime, …). All you need to do is download a single file and run the install script. That’s it!

If you want to learn more about APM, I suggest you watch my 30 minute “What is Dynatrace and How to get Started” YouTube video. Just follow along by signing up for our SaaS-based Trial or ask for an On-Premise trial if that is what you prefer.

#3 – Shift-Left: Automate Performance into CI/CD

Shift-Left means a lot of different things depending on who you ask. The best explanation I heard is that you want to provide performance feedback as early and often to your developers so that this feedback impacts the next line of code developers are writing. Only if we can deliver feedback that is frequent and actionable, can we then consider our work to be real performance engineering – otherwise we are just doing testing at the end of a development cycle.

There are two great examples of Automating Performance into CI/CD that I keep talking about

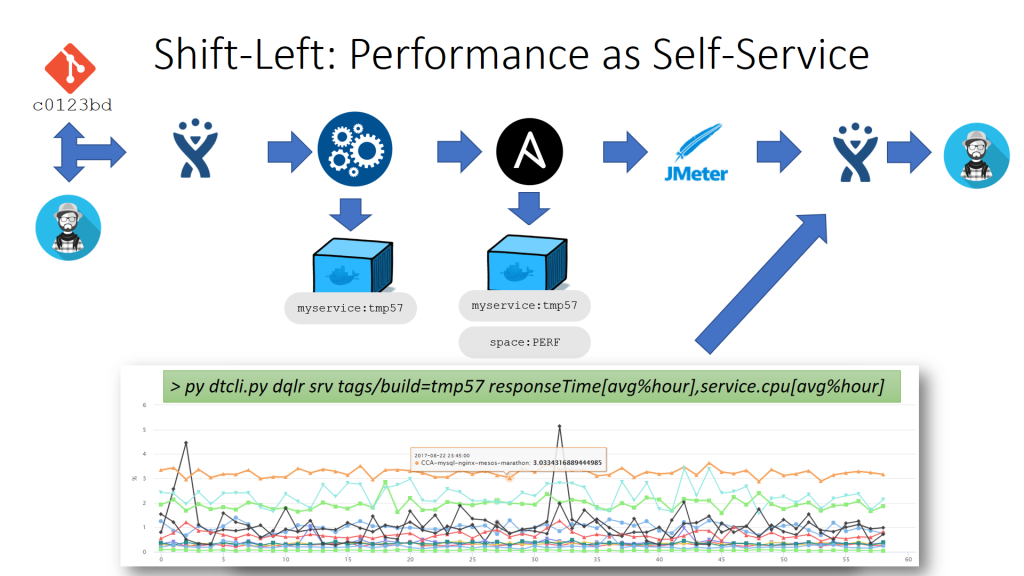

#1: Performance as Self-Service at PayPal

My friend Mark Tomlinson, currently working at PayPal, has fully automated the performance feedback loop and provides it as a self-service to developers. A developer simply creates a Jira ticket which references the new code he/she wants to get performance feedback on. Mark built an automated process that watches for these Jira tickets, deploys the referenced code change into a performance environment that is constantly under load and then automatically pulls performance data from the load testing tool as well as from Dynatrace back on the Jira ticket. This is true Performance as a Self-Service.

If you want to learn more check out my webinar called “DevOps Around the World” where I explain in more detail how Mark has implemented Performance as a Self-Service. Or join him at PERFORM – our Dynatrace User Conference where he will be presenting on this same story!

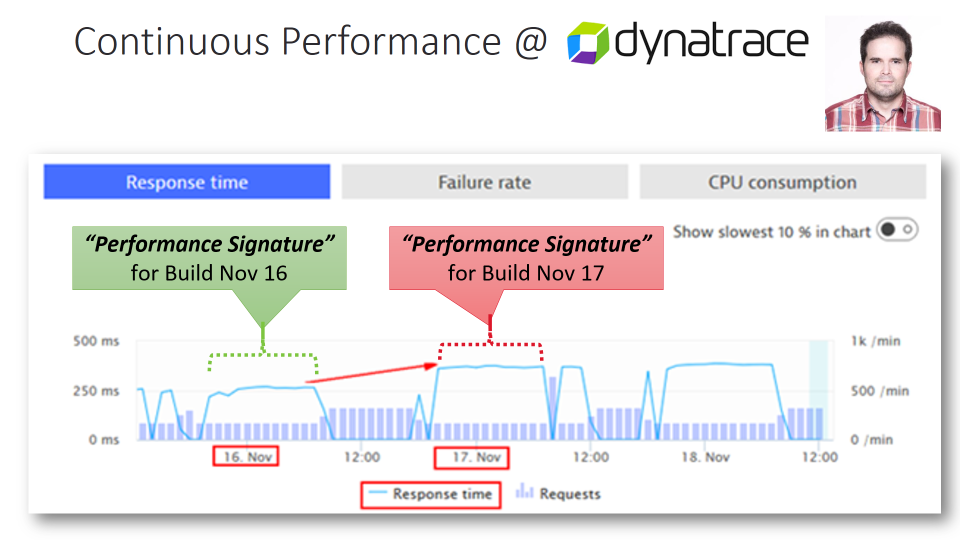

#2: Continuous Performance at Dynatrace

Thomas Steinmaurer, Performance Architect at Dynatrace, is also hosting a performance environment that is constantly under load. He simulates different types of workloads throughout a 24 hour period. Every day he automatically deploys the latest nightly built into his environment using the same deployment scripts as used when deploying to prod. After the deployment happens he observes key performance metrics of the different components and services he has under load. He calls these metrics the “Performance Signature”. Because he does this every day, for every build, he can automatically compare these metrics across builds and automatically informs the development teams in case the “Performance Signature” of the tested components shows a negative trend, e.g: less throughput, higher response time, higher failure rate, higher resource consumption.

If you want to learn more check out the following blog I wrote with Thomas earlier this year: Dynatrace on Dynatrace: Continuous Performance

#4 – Shift-Right: Influence Production Monitoring

I keep hearing more people using the term Shift-Right but it seems the understanding of what it means is not yet as clear as when people talk about Shift-Left. For me it means these things:

#1: Define and Test Production Monitoring and Alerting

Production Monitoring, dashboards, and alerting must be a non-functional requirement for every feature that is being developed. Performance engineers provide their expertise in defining what should be monitored, which dashboards should be available, and how alerting works in production. The data collection, usefulness of the dashboards, and alerts also must be validated and tested and must be part of the “Definition of Done!”

#2: Leverage Production Data for Pre-Prod Testing

At Neotys PAC we had several sessions that talked about extracting real workload from production and then applying it to the tests executed in pre-production. Using LIVE production monitoring data gives us all the data we need to create more realistic “production like” test scenarios, e.g:

- What are the top transactions executed?

- What is the load distribution during a business day, week, or month?

- What are the most common navigation paths through the app?

- What type of browsers, devices and bandwidths do real users have?

- How much percentage is bot traffic?

Mark Tomlinson is extracting workload information once a day from his Dynatrace production environment through the Dynatrace API. With this data he is then automatically updating the workload configuration for his JMeter load testing scripts. This allows him to automatically calibrate/synchronize the generated workload in pre-prod with what is really happening in production.

#3: Performance Engineering in Production

I strongly believe that executing load tests in pre-production environments is going to decrease over the next couple of years. I believe that performance engineering will move into production with different flavors:

#3.1: Running tests on production hardware

This aspect is not that new. Instead of building a separate test environment we simply use production hardware to run our load test. Either carving out servers during non-busy hours, running tests against the DR environment or temporarily launching a duplicated prod environment using the same infrastructure as code automation as you use for production.

Depending on the application you might be able to duplicate production traffic into your “production test environment” or generate load “the traditional” way!

#3.2: Performance Experiments with Real Traffic

More application architectures will allow us to deploy canary releases or leverage feature flags, to expose new functionality to one part of your real user population. If that is possible, performance engineering can optimize deployment, configuration or analyze performance issues once the new code gets directly deployed into production. Tested by real users!

The key requirement is to have Real Time Monitoring – both Real End User, as well as Fullstack APM. Performance Engineers work hand in hand with the Application Architects and DevOps Teams. In the case of performance problems optimization steps must be executed live in production. Worst case scenario would be to rollback a deployment, however, thanks to feature flags and canary releases, the time it takes and that involved risk is minimized. Many of these Performance Engineering Optimization steps can also be fully automated. Here we start talking about Auto-Mitigation, Auto-Optimization or Self-Healing Systems. To learn more about that check out my recent blog post on Auto Mitigation vs Self-Healing.

#5 – Get familiar with the latest Cloud & Container Tech

If you haven’t launched a server in the cloud or deployed a docker container, then get it on your to-do list for this week. I personally had a tough time getting around launching EC2 instances on AWS. My biggest fear was working with Linux as I have mainly worked with Windows most of my professional life. I started by following the AWS Tutorials where I learned how easy it is to sign up for a free AWS account, launch your first EC2 instances and remote connect into those Linux machines.

I picked AWS because I volunteered to prepare an internal training course that walks you through the basics of AWS and how to monitor AWS with Dynatrace. I ended up putting that course on GitHub for others to benefit from my work. If you want to get your feet wet with Cloud then check out my AWS Monitoring Tutorial. I also recently extended it with more labs. Instead of just launching Linux machines I also walk you through deploying applications, services, and containers.

#6 – Use AI to your advantage

AI (Artificial Intelligence) is one of the most hyped words this year. Many tool vendors are claiming that they leverage AI, and many AI vendors claim that their solution is the only true AI.

Whether we want to call it AI, Machine Learning, or just “analyzing a lot of data faster, in a meaningful way, fully automated and accessible via REST” doesn’t matter. What matters is that Performance Engineering can leverage the recent advances in that space. Analyzing performance data has always consumed a lot of our time as performance engineers. Today – and even more so in the coming years – we will have even more data we must analyze. The only way we can sift through the noise, find anomalies and patterns – is by leveraging the advances the industry has made around AI.

Ask your tool vendors on what they are doing around AI. Make sure they provide ways to not only analyze more data but also understand the dependencies and relationships between individual data points. Check out the Automation APIs as you will need to automate data feeding from your different environments into that AI engine and pull the results back into your pipeline to feed your quality gates.

At Neotys PAC, I did a session on Dynatrace AI Demystified. I hope this serves you well as a benchmark on what you should expect from your future toolset when it comes to AI.

#7 – NoOps: No longer a buzzword

The first time I used the word NoOps I got aggressive push back from my discussion partner. He was in Operations and I guess he just feared I was talking about optimizing his job away.

The more I talk about NoOps – talking about our own Dynatrace NoOps story – the more I get the feedback that this is really the next thing after we mastered Continuous Delivery and Continuous Operations. NoOps is all about smart automation of traditional Operations teams, e.g: provisioning resources, configuration changes, restarts, dealing with outages, and other problems …

If you want to learn more then check out my blog on Auto-Mitigation vs Self-Healing. That will be a good starting point for your research.

Change Now! Tomorrow might be too late!

I hope these thoughts will help you make the next move in your career as a performance engineer. I understand that not everyone in our industry may think the same way as I do but I believe many understand that change is necessary. If you agree or disagree let me know. Discuss with me on Twitter, via @grabnerandi.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum