Distributed cloud systems are difficult to manage without the proper tools. Learn how comprehensive log management helps improve security, operational efficiency, and uptime.

What is log management?

Log management is the practice of continuously gathering, storing, processing, and analyzing data from various programs or applications. In log management, logs are leveraged to optimize system performance, identify technical issues, manage resources efficiently, enhance security, and improve compliance. Most infrastructure and applications generate logs that need log management. In cloud-native environments, there can also be dozens of additional services and functions all generating data from user-driven events.

A log is a computer-generated file that captures activities within the operating system or software applications. Log management involves activities such as log collection, aggregation, parsing, storage, analysis, search, archiving, and disposal.

Key components of log management include:

- Collection: Aggregating data from different sources, Dynatrace collects logs through One Agent or APIs or Open Telemetry

- Observability: Tracking events and activities across logs, metrics, users’ sessions, and distributed traces

- Analytics: Reviewing logs, metrics, and traces to identify bugs or security threats

- Retention: Designating how long log data should be kept

- Search: Filtering and searching data across all logs

- Reporting: Automating reporting for operational performance, security, and compliance

Metrics, logs, and traces make up three vital prongs of modern observability. This is critical to ensure high performance, security, and a positive user experience for cloud-native applications and services. Event logging and software tracing help application developers and operations teams understand what’s happening throughout their application flow and system which is another key component of log management. Together with metrics, three sources of data help IT pros identify the presence and causes of performance problems, user experience issues, and potential security threats.

Log management tools help organizations manage the high volume of log data generated across the enterprise by determining what data needs to be logged, for how long it should be saved, and how data should be disposed of when no longer needed.

Dynatrace log management involves aggregating all log data into Dynatrace Grail data lakehouse to provide visibility into workloads, applications, multiple environments, forgotten assets for optimizing cloud spending, and improving compliance posture. Log management is crucial for compliance requirements such as FISMA, HIPAA, SOX, GLBA, PCI DSS, and GDPR. Log management tools are essential for monitoring system performance, enhancing security measures, troubleshooting issues effectively, optimizing resource usage, improving compliance posture, and providing real-time insights into operations.

While logging is the act of recording logs, organizations extract actionable insights from these logs with log monitoring, log analytics, and log management.

The types of logs

Understanding the various types of logs is crucial for effective monitoring, troubleshooting, and security in log management. Here, we’ll explore the primary categories of logs and their significance.

1. System Logs

The operating system generates system logs, often referred to as syslogs. These logs provide insights into the system’s operations, including kernel activities, boot processes, and hardware events. They’re essential for diagnosing system-level issues and ensuring the overall health of the infrastructure.

2. Application Logs

Software applications produce application logs. They record events such as user actions, application errors, and transaction details. These logs are invaluable for developers and IT teams to debug issues, monitor application performance, and understand user behavior.

3. Security Logs

Security logs capture security-related events, such as login attempts, access control changes, and potential security breaches. These logs are critical for identifying and responding to security incidents, ensuring compliance with regulations, and maintaining the system’s integrity.

4. Audit Logs

Audit logs, also known as audit trails, track changes and activities within a system or application. They provide a chronological record of who did what and when. Audit logs are essential for compliance auditing, forensic analysis, and ensuring accountability within an organization.

5. Network Logs

Network logs include data from network devices like routers, switches, and firewalls. They capture information about network traffic, connection attempts, and data transfers. Network logs are vital for monitoring network performance, detecting anomalies, and troubleshooting connectivity issues.

6. Event Logs

Event logs are a broad category encompassing several types of logs generated by different components of an IT environment. They include system events, application events, and security events. Event logs provide a comprehensive view of the activities and status of the entire IT ecosystem.

Understanding these different types of logs and their purposes is fundamental for effective log management. Organizations can leverage these logs to enhance operational efficiency, security posture, and compliance readiness.

The benefits of log management

In today’s digital landscape, effective log management is crucial for maintaining the health, security, and performance of IT systems. Here are some key benefits:

- Enhanced Security

Logs provide a detailed record of system activities, which is invaluable for detecting and responding to security concerns. Organizations can detect unusual patterns, unauthorized access attempts, and potential breaches in real-time by monitoring logs. This proactive approach helps mitigate risks and ensure compliance with security standards.

- Improved Troubleshooting and Debugging

Logs are the first place to look for clues when systems encounter issues. They offer insights into what went wrong, when, and why. This information is essential for diagnosing problems quickly and accurately, reducing downtime, and improving system reliability. Developers and IT teams can use logs to trace errors, understand their root causes, and implement effective solutions.

- Operational Efficiency

Log management streamlines the process of monitoring and maintaining IT infrastructure. Automated log analysis tools can sift through vast amounts of data to highlight critical events and trends. This automation reduces the manual effort required to manage logs, allowing IT teams to focus on more strategic tasks. Additionally, logs can help in capacity planning by providing data on system usage and performance trends.

- Compliance and Auditing

Many industries are subject to strict regulatory requirements regarding data security and privacy. Log management helps organizations maintain compliance by providing a clear audit trail of all system activities. This transparency is essential for demonstrating adherence to regulations and conducting thorough audits.

- Performance Optimization

Logs contain valuable performance data that can be analyzed to optimize system performance. Organizations can identify bottlenecks, optimize resource allocation, and improve overall system efficiency by examining logs. This continuous monitoring and optimization lead to better user experiences and more efficient operations.

Why is a log management tool important?

Distributed cloud systems are complex, dynamic, and difficult to manage without the proper tools. Log management is a key factor in successfully deploying and operating large-scale cloud applications or resources, as cloud-native systems are often more difficult to observe than on-premises solutions. With comprehensive logging support, security, operational efficiency, and application uptime all improve.

Two major benefits of properly managed logs are their availability and searchability. Optimally stored logs enable DevOps, SecOps, and other IT teams to access them easily. This enables IT teams to quickly and efficiently find the answers they need. Essentially, log management tools help organize logs to ensure that log searches and queries are as efficient as possible.

How log management systems optimize performance and security

Logs are among the most effective ways to gain comprehensive visibility into your network, operating systems, and applications.

Logs provide detailed information about the data that traverses a network, and which parts of applications are running the most. This helps organizations identify application performance bottlenecks. Developers can use logs, for example, to identify areas of code that are executed more frequently than needed or run for longer durations than expected. Teams can also parse logs to show the application owner which actions users are taking most often.

When it comes to security, logs can capture attack indicators, such as anomalous network traffic or unusual application activity outside of expected times. In many cases, attackers erase their activities from some logs. Audit logs that record high-value and potentially problematic operations — such as the transfer of data outside the organization — need to be instantly stored and protected from tampering.

Log management challenges and how to face them

Log management faces two primary challenges in cloud environments: scope and variability. First, midsize and large organizations now generate and collect terabytes of data per day from thousands of events, and this trend shows no signs of slowing down. As a result, logging tools record large event volumes in real-time. Scalable storage is a hallmark of cloud applications, but real-time event recording, tracking, and indexing require specialized tools, especially for large-scale deployments with petabytes of data.

As logs are generated, log variability creates another challenge for modern DevOps and SecOps professionals. Logs are not a one-size-fits-all file, but they must suit the specific tool or metric on which they report. For instance, a user login log will generate a format that’s different from a cloud resource utilization alert. Log management tools are purpose-built to aid in generating and organizing the proper types of logs for each situation. The ideal solution automatically keeps these different types of logs in proper context with other data and dependencies.

The log management process

Log management is a critical aspect of maintaining the health, security, and performance of IT systems. It involves collecting, storing, analyzing, and monitoring log data generated by various systems and applications. Here’s a concise overview of the log management process:

1. Log Collection

The first step in log management is gathering log data from diverse sources such as servers, applications, network devices, and security systems. This data can include error messages, transaction records, user activities, and system events.

2. Log Storage

Once collected, logs need to be stored securely and efficiently. The storage solution should support high availability, scalability, and data integrity. Options include on-premises storage solutions, cloud-based services, and hybrid approaches. Proper indexing and compression techniques are essential to manage storage costs and improve retrieval speeds.

3. Log Analysis

Analyzing log data is crucial for identifying patterns, identifying irregularities, and troubleshooting issues. Advanced analytics tools and techniques, such as machine learning and artificial intelligence, can be employed to gain deeper insights.

4. Log Monitoring

Continuous monitoring of logs helps detect security threats, performance bottlenecks, and operational issues in real-time. Setting up alerts and notifications ensures that critical events are promptly addressed. Integration with Security Information and Event Management (SIEM) systems enhances the ability to correlate events and respond to incidents swiftly.

5. Log Retention and Compliance

Maintaining logs for a specified period is often required for compliance with industry regulations and standards. Implementing a log retention policy ensures that logs are archived and purged according to legal and organizational requirements. This also helps manage storage costs and maintain system performance.

Effective log management is essential for proactive IT operations, robust security posture, and regulatory compliance. By following these steps, organizations can harness the full potential of their log data to drive operational excellence and safeguard their digital assets.

Log management best practices for improved performance

Other than the obvious organization, searchability, and real-time monitoring use cases, log management tools provide ways to further use these records to improve organization-wide performance. For instance, powerful log breakdown and search features enable IT pros to use logs to find other logs. If IT teams identify a concerning event, but can’t find the root cause by looking at the incident log, log management dashboards can show other logs connected to that event or service. What else did the initial event affect? How did those secondary events affect performance or security?

Similarly, a comprehensive logging and management platform provides mechanisms to create custom recording and alerting. As a result, IT teams can detect event patterns. The platform targets specific resources to provide increased visibility in areas of concern.

The keys to a good log management system are flexibility, customizability, and depth of observability.

Comparing log monitoring, log analytics, and log management

Many people often confuse log monitoring, log analytics, and log management with one another, but each activity brings a unique capability to the table. Log monitoring is the process of continuously observing log additions and changes, tracking log gathering, and managing the ways in which logs are recorded.

Log analytics, on the other hand, is the process of using the gathered logs to extract business or operational insight. These two processes feed into one another. Log monitoring enables the collection of log data, and log analysis promotes intelligent, data-driven decision-making.

Log management brings together log monitoring and log analysis. It is common to refer to these together as log management and analytics. It involves both the collection and storage of logs, as well as aggregation, analysis, and even the long-term storage and destruction of log data. In short, log management is how DevOps professionals and other concerned parties interact with and manage the entire log lifecycle.

Automatic and intelligent log detection

Log management is critical to understanding system performance, user issues, and application security vulnerabilities. But log data can create another data silo for IT pros to manage and manually correlate with other data.

Observability is a multifaceted issue and can be split into three components: metrics, traces, and logs. For context, teams collect metrics for further analysis and indexing. They include user data, resource usage, application performance, and more. Traces are the observable ways in which cloud services interact with one another. User verification, payment validation, and other microservices may interact with each other in ways that are difficult to track without ecosystem-wide observability support.

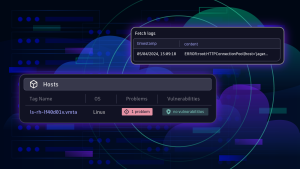

Logging solutions such as Dynatrace provide automated, intelligent tools for log gathering, ingestion, and dependency mapping, which allow DevOps, SecOps, and other professionals to have full observational power over complex cloud ecosystems.

The Dynatrace platform ingests logs, metrics, and traces. But it also provides a real-time dependency mapping of all applications, services, and other resources. Combined with the contextualized, real-time software intelligence of Davis, the platform provides precise answers for IT teams that need to identify issues proactively and at enterprise scale.

For more information on the use of logs and how Dynatrace increases observability, check out our power demo.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum