Microservices and microservices architecture are now the de facto standard for developing modern software. In response to the exponential rise in demand for digital services, software development practices must be fast, flexible, and scalable. Microservices fit the bill.

By building applications in small functional bits rather than maintaining a monolithic codebase and resource pool, developers can keep pace with innovation. In terms of adoption, a 2020 survey found that 61% of polled organizations have been using microservices for a year or longer, with 92% reporting a successful experience.

What are microservices?

Microservices are small, flexible, modular units of software that fit together with other services to deliver complete applications. An application is a collection of independent services that work together to perform a business function. This method of structuring, developing, and operating software as a collection of smaller independent services is known as a microservices architecture.

Using a microservices approach, DevOps teams split services into functional application programming interfaces (APIs) instead of shipping applications as one large, collective unit. APIs connect services with core functionality, which allow applications to communicate and share data.

The primary advantage is the DevOps teams responsible for developing and maintaining them can operate in smaller units, making the scope of each project more manageable.

Here are a few common features:

- Highly maintainable and testable. Microservices support agile development and rapid service deployment.

- Loosely coupled. With minimal dependencies, changes in the design, implementation, or behavior in one service will not affect other services.

- Autonomous. Each service internally controls its own logic.

- Independently deployable. Code can be written in different languages and updated in one service without affecting the full application.

- Business-focused. Organizations deploy microservices based on business demands. Additionally, they can use them as a building block for additional deployments.

Microservices are run using container-based orchestration platforms such as Kubernetes and Docker, or cloud-native function-as-a-service (FaaS) offerings — including AWS Lambda, Azure Functions, and Google Cloud Functions — to assist in managing and automating microservices.

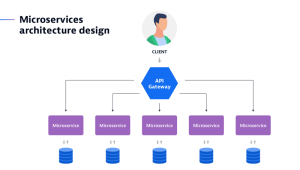

What is microservices architecture?

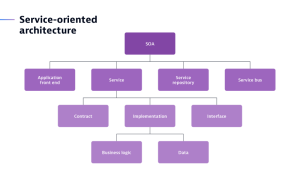

Microservices architecture is a cloud-native architectural approach used to build applications with independently deployable microservices. It’s like a newer version of service-oriented architecture (SOA).

A microservices architecture has many unique characteristics, including the following:

- Cross-functional teams that have development, database, and user-experience skills organize microservices architecture with broad-stack software implementation.

- Services are broken down into independently replaceable sections as opposed to libraries.

- Smart endpoints and dumb pipes act as communication patterns between different services separated by hard boundaries.

- Services remain independent, with each application function operating as a single service that can be independently deployed and updated.

Characteristics of a microservices architecture and design

Monolithic applications use one large codebase repository, creating massive, labor-intensive software to manage. When adding or improving a monolithic application’s features or functions, complexity becomes a real challenge as the codebase grows. Although you can still deploy features, this complexity limits experimentation and potentially affects how to test and deploy new ideas.

With monolithic applications, if a single process fails, it affects the entire application or service.

Because of these challenges, it’s essential to review the key characteristics of microservices and its design, including the following:

1. Independent

Within a microservices architecture, applications and services are independent components. These components run each application process as its own service. The benefit is these services then communicate through a well-defined interface using resource-light APIs. Microservices are independently operated. Therefore, teams can independently update, scale, secure, segment, and modify each service based on demand and an application’s specific function. In short, it removes that single point of failure through independent microservices.

2. Autonomous

It is much easier to deploy automation around microservices than monolithic architectures. Organizations can develop, update, deploy, and scale each microservice component without affecting any other service within an application. When designing an application that uses microservices, it isn’t necessary to share any code or implementation with other services, because all communication between microservice components happens through well-defined APIs. You can also automate deployment and scale for individual application microservices without affecting other application components.

3. Specialized

Using microservices elevates your ability to granularly define application functions. Each application service is designed for a specific set of capabilities, so developers can create microservices that focus on solving specific problems. As developers add to a service, they can also break up the microservice into smaller services to prevent complexity.

Microservices and DevOps

Microservices tie in closely with DevOps practices. Many developers believe a microservices architecture is directly optimized for DevOps and continuous integration/continuous delivery (CI/CD).

Indeed, microservices architects require DevOps. Microservices introduce numerous moving parts and complexities, as well as dependencies. Without DevOps, a microservices architecture might run into some issues. A solid DevOps practice provides better deployment, monitoring, lifecycle management, and automation solutions, which are all necessary to support microservices architectures. Therefore, it’s important to have good DevOps practices in place.

Benefits of a microservices architecture

Microservices architecture helps DevOps teams bring highly scalable applications to market faster and is more resilient than a monolithic approach. In a monolith, one service failure simultaneously affects adjacent services and performance, causing delays or outages. With a microservices architecture, each service has clear boundaries and resources. If one fails, the remaining services continue running.

Other benefits include the following:

- Flexible architecture

- Microservices architecture enables developers to use many different images, containers, and management engines. This flexibility gives developers tremendous latitude and configuration options when creating and deploying applications.

- Uses fewer resources

- Microservices tend to use fewer resources during runtime due to the cluster manager.

- The cluster manager automatically allocates memory and CPU capacity among services within each cluster based on performance and availability.

- As each cluster has many services, there are fewer clusters to manage.

- More reliable uptime

- There is less risk of downtime because developers do not have to redeploy the entire application when making an update to a service.

- A small service codebase simplifies troubleshooting, speeding up mean time to detect a problem (MTTD) and mean time to recovery (MTTR).

- Simple network calls

- Network calls made using microservices are simple and lightweight because the functionality of each service is limited.

- REST API represents the protocols, routines, rules, and commands used to build microservices. REST API is popular with developers because it uses easy-to-learn HTTP commands.

Challenges of a microservices architecture

Reaping the benefits of a microservices architecture requires overcoming a few inherent challenges. For example, a long chain of service calls over the network could decrease performance and reliability. When more services can make calls simultaneously, the potential for failure compounds for each service, especially when handling large call volumes. However, automated detection and testing can streamline and optimize these API backends and prevent potential IT downtime.

Here are some additional challenges that can arise with a microservices architecture:

- Steep learning curve. Initial setup can be a challenge, as configuring images and containers can be tricky without previous experience or expertise.

- Complexity. Fragmentation, while a unique benefit, means there are more pieces to manage and own. Teams must adopt a common language and implement automated solutions to help them manage and coordinate the complexity.

- Limited observability. Monitoring can be difficult with many dynamic services managed throughout disparate environments. Manually pulling metrics from a managed system such as Kubernetes can be laborious.

- Cultural shift. The cultural shift that comes with microservices architecture requires team members to think more efficiently and modularly. However, it also requires time and commitment for teams to transition smoothly.

Five best practices for microservices

When developing a microservices architecture, these five best practices are helpful to include during all stages of the process.

- Establish clear ownership. Make ownership of each component equally important, with each team member playing a critical role in all phases of the application development lifecycle.

- Define clear development processes. Clearly define CI/CD processes to help development processes run efficiently, with every team member capable of deploying an update to production.

- Reduce dependencies between services. Use asynchronous communication to achieve loose coupling and reduce dependencies between services so a change in one will not affect application performance and end users.

- Test comprehensively. Test early and often using multiple methods, including testing an instance of one microservice to test a separate service.

- Integrate security testing. Bake application security into all phases of development — starting with the design and continuing through DevSecOps. This is critical because numerous calls will be made over the network and more intermediary systems are involved in each instance.

Microservices managed

To manage the complexity of microservices architecture, DevOps and IT teams need a solution that puts automation and observability at the forefront of microservices monitoring and management.

Dynatrace delivers broad, end-to-end observability into microservices, as well as the systems and platforms that host and orchestrate them, including Kubernetes, cloud-native platforms, and open source technologies.

Fueled by continuous automation, the Dynatrace AI engine, Davis, helps DevOps teams implement the automatic detection and testing required to mitigate or eliminate reliability issues with complex call chains. With AI and continuous automation, teams can easily discover where to streamline.

Learn more and watch the magic in action at the on-demand webinar exploring PurePath, Dynatrace’s patented technology for end-to-end observability into microservices, serverless applications, containers, service mesh, and the latest open source standards, such as OpenTelemetry.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum