If you’re a child of the 80’s and a gamer at heart (or just love retro gaming systems), then you may be familiar with Atari’s blockbuster game, “Pitfall!”. Released in 1982 on the Atari 2600 console, it quickly became the best-selling video game that year. The game’s character, “Pitfall Harry”, ran through a jungle swinging over crocodile-infested rivers, jumping over rolling logs, and trying to avoid being bitten by rattlesnakes and scorpions. Success was measured by collecting treasure while trying to escape from the jungle.

This may be a stretch, but there are many similarities here with building and maintaining high performing web and mobile applications. Sure, you’re not having to avoid rattlesnakes and quicksand while burning down your user-story backlog…but there are plenty of pitfalls out there you hope to avoid, while trying to get your customers to engage with your latest features and improving conversions (think: “treasures”).

In this new “pitfalls” series, I hope to outline some of the shortcomings that have always been inherent in traditional synthetic monitoring and more importantly, how Dynatrace sought out to build, from the ground up, a new approach to Digital Experience Monitoring that overcomes these pitfalls. Let’s get started…

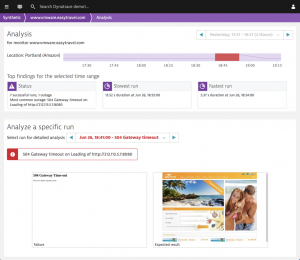

“Is it you, me, or the Internet?”

If you’ve been using a synthetic monitoring solution to provide an external view of the performance and availability of your website, then you know how common that question is. When your synthetic vendor sends you an alert indicating a problem with your site, often the next step is to devote time to determine whether the cause is due to:

- Random “Internet noise” that you have little control over

- Your application (or possibly a dependency with another application)

- Your infrastructure (physical or cloud)

- The node or site managed by your synthetic vendor (yes…even vendors can have a bad day!)

Unfortunately, there is little information in synthetic data alone that helps you determine this quickly or easily, which can sometimes cause you to spend hours chasing a ghost.

Sure, more advanced synthetic monitoring solutions provide some “directional data” as to where to focus your investigation, such as a “TCP Timeout Failure” could indicate a network issue or a spike in “Time to First Byte” could point to a server or backend issue, but this doesn’t always provide 100% assurance there is or is not an issue on your end. Most synthetic vendors introduced years ago the capability to “retry on failure” to at least help reduce some of the Internet noise or transient issues but this Band-Aid approach proves the point that synthetic monitoring by itself, or as I like to call it “disconnected synthetic monitoring”, can’t be relied upon without other monitoring tools to validate if an issue is legitimate. Pitfall number one: traditional synthetic monitoring always will require external validation.

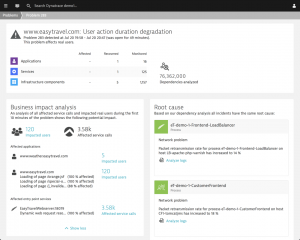

Focus on the $5,000,000 problem, not the $5 one.

With so many monitoring tools that now proliferate in enterprise organizations, you’d think it should be easy to prioritize your response to the myriad of alerts and incidents that come in on a weekly or even daily basis. In 2015, one researcher found that 65% of enterprises have 10 or more commercial monitoring tools with 19% having more than 50! When I talk to customers, they tell me that having more tools often generates more noise…and traditional synthetic monitoring is no different.

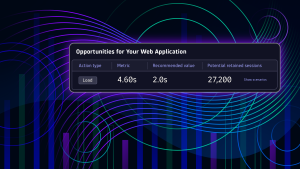

Making things even more noisy is that all synthetic alerts generally look alike. Yes, it’s possible to flag some as more important than others, say a workflow that monitors a key customer journey or purchase transaction, but even this won’t be able to tell you if your business was really affected by a performance or availability hit. To understand this, you have to look at other data to see if the incident occurred during peak time or when the application was rarely used. Wouldn’t it be great to know which of your high-value customers were affected so you could proactively follow up with them? Relying on synthetic monitoring alone is unable to tell you what’s most important (your customers) and what’s not. Pitfall number two: traditional synthetic monitoring cannot tell you the business impact of an incident.

Give me answers, not more data!

Of course, just knowing you have a problem and even the business impact of that problem is never going to be enough. The core of any monitoring strategy is having the solution tell you the root cause of an incident so that you can quickly resolve it. (Actually, it would be to fix the problem, but that is for another post!) This is where an isolated synthetic monitoring strategy falls short again. With all the advancements in synthetic monitoring over the last 20+ years, it is nowhere closer to figuring out the root cause of any incident it identifies. It was designed to provide an outside-in view of the health of your web application and is therefore blind to anything on the “inside”.

Even portable “private” or software-based synthetic monitoring nodes, which you deploy internally, are not able to pinpoint the cause an issue. It might help you isolate the domain of an problem, such as a data center, network segment, or even web or application server…but not the root cause. You will always be forced to take your synthetic data, drill down on the specific time period in question, and cross-reference it with other monitoring data (log data, server metrics, etc.) to figure out the real issue. Of course, all this additional work takes time—time that would be better served to actually fixing the problem and following up with impacted customers. Pitfall number three: traditional synthetic monitoring can never tell you the root cause of a problem…you always have to look elsewhere for answers.

A fresh way of thinking about synthetic monitoring…

I’ve been in the synthetic monitoring business for over 20 years. As Keynote Systems’ earliest employee in 1995, where we invented synthetic monitoring (see the patent #6,006,260), I’ve witnessed the rapid rise, fall, and now resurgence of external synthetic monitoring. With modern browsers supporting W3C’s Navigation Timing spec, more advanced methods to record, manipulate, and execute customer journeys, and even automating tasks such as traceroute-on-error or DNS diagnostics on failure, there are still areas where synthetic monitoring will always be lacking. Dynatrace knew this years ago and wanted to develop a new way of monitoring modern applications and more importantly, support modern DevOps teams.

So it was three years ago that we started an effort to re-invent Dynatrace and redefine monitoring as we knew it. (Forbes wrote a good article about it here.) This also gave us the opportunity to recreate Digital Experience Monitoring from the ground up in a way that takes advantages of the strengths of synthetic monitoring, namely availability monitoring and baselining performance, and weave it into the strengths of other digital experience monitoring capabilities, such as real user monitoring and session replay. Add to that a deep integration with infrastructure and application monitoring…and we have some that is magical. (Ok…it’s not magic. It’s really smart algorithms, deep machine learning, and automation but it sure feels like magic!)

With our new Digital Experience Monitoring approach in Dynatrace, you are now able to overcome these pitfalls. (Pitfall Harry can breath a sight of relief!) Because Dynatrace has full visibility into every layer of the application, capturing every customer action and tracing that action through the entire application, you can know with certainty if a problem is on your end or just somewhere on the Internet and alert accordingly. When an issue arises, our integration with real user monitoring lets you quickly see how many users are impacted and who these users are in order to prioritize your efforts on the biggest impacting issues and proactively follow up with your highest-valued customers. And with this full visibility into the application itself, all dependent services, processes, and hosts, Dynatrace creates a very accurate dependency map that lets us intelligently identify the exact root cause of an incident and even what caused it freeing you time to focus on your software and customer. Full 360-degree visibility into your infrastructure, applications and users is now possible!

These are things that modern DevOps teams have been dreaming about for years and its finally here! Don’t take my word for it though. Check out how Dynatrace has redefined monitoring and taken a fresh approach to synthetic monitoring yourself. Sign up for a free 15-day trial here or, if you are an existing Dynatrace synthetic customer, talk to your Account Executive or Customer Success Manager to learn how you can have an extended trial of Dynatrace. You can finally escape the jungle and avoid these pitfalls altogether!

Author’s note: When you are done reading this article, check out my follow up blog, The Pitfalls of Traditional Synthetic Monitoring (part 2), where I highlight three more pitfalls to avoid.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum