AI/ML Observability

Get a holistic view of your AI-generated parts of your system such as LLM, vector databases and prompt engineering frameworks to gain comprehensive insights.

Platform for Developing and Managing Models

Monitoring specific platform metrics and logs, such as memory, accelerator and CPU utilization

Google AI Platform

Get insights into Google AI Platform service metrics collected from the Google Operations API to ensure health of your cloud infrastructure.

Amazon SageMaker

Build, train, and deploy machine learning (ML) models quickly.

Machine Learning on AWS

Robust, cloud-based service that makes it easy for developers of all skill levels to use machine learning technology.

coming soon

TensorFlow Keras

Observe the training progress of TensorFlow Keras AI models

Azure Machine Learning

Collection of services and tools intended to help developers train and deploy machine learning models.

Orchestration Layer

Get insights about costs, prompt and completion sampling, error tracking, and performance metrics using logs, metrics, and traces of each specific workflow action

Semantic Layer

Monitor semantic caches and vector databases to optimize prompt engineering, search and retrieval, and overall resource utilization

Milvus

Gain insights about vector database resource utilization and cache behavior

Weaviate

Observe your semantic cache efficiency to reduce cost and latency for LLM apps

Qdrant

Gain insights about your Qdrant semantic vector collections

Elasticsearch

Monitor Elasticsearch Clusters, Nodes, Indexes, remotely or locally, via API.

Model Layer

Observe service-level performance (SLA) metrics such as token consumption, latency, availability, response time, and error count

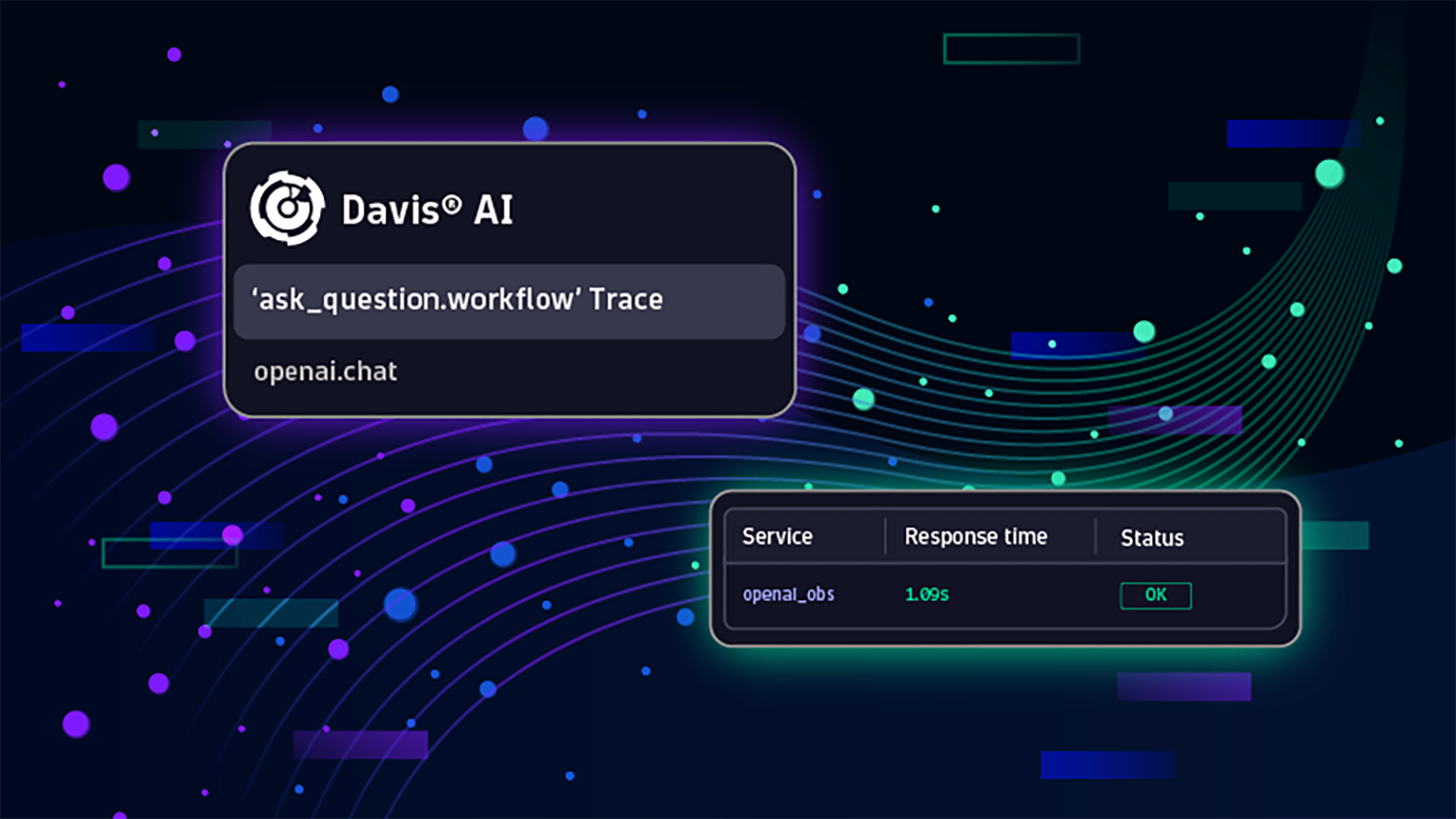

OpenAI Observability

Automatically monitor your OpenAI and Azure OpenAI services such as GPT-3, Codex, DALL-E or ChatGPT.

Amazon Textract

Makes it easy to add document text detection and analysis to your applications.

Amazon Translate

Text translation service using machine learning to provide high-quality translation on demand.

Azure Computer Vision

AI service to boost content discoverability, automate text extraction, analyze video in real time, and more.

Infrastructure Layer

Monitor infrastructure data, including temperature, memory utilization, and process usage to ultimately support carbon-reduction initiatives

Are you looking for something different?

We have hundreds of apps, extensions, and other technologies to customize your environment

More resources

Boosting innovation with ChatGPT and AI

AI Model Observability: Dynatrace & Traceloop