In the rapidly evolving landscape of artificial intelligence, ensuring your AI model’s optimal performance, reliability, security, and user trust is paramount. This blog post explores how combining the Dynatrace full stack observability platform and Traceloop's OpenLLMetry OpenTelemetry SDK can seamlessly provide comprehensive insights into Large Language Models (LLMs) in production environments. Observing AI models enables you to make informed decisions, optimize performance, and ensure compliance with emerging AI regulations.

“Engineers today lack an easy way to track the tokens and prompt usage of their LLM applications in production. By using OpenLLMetry and Dynatrace, anyone can get complete visibility into their system, including gen-AI parts with 5 minutes of work.”

Nir Gazit, CEO and Co-Founder Traceloop

Why AI model observability matters

The adoption of LLMs has surged across various industries, particularly since the introduction of OpenAI’s GPT model. While these models yield impressive results, the challenge of maintaining their operation within defined boundaries has increased.

AI model observability plays a crucial role in achieving this by addressing these key aspects:

- Model performance and reliability: Evaluating the model’s ability to provide accurate and timely responses, ensuring stability, and assessing domain-specific semantic accuracy.

- Resource consumption: Observing computational resource availability and saturation, whether deployed in cloud-native environments like Kubernetes or CPU-enabled servers.

- Data quality and drift: Monitoring the quality and characteristics of training and runtime data to detect significant changes that might impact model accuracy.

- Explainability and interpretability: Providing information on model versions, parameters, and deployment schedules, which is essential for interpreting and understanding model answers.

- Security and compliance: Actively preventing security threats at both the application and model levels to ensure responsible and compliant AI usage.

The challenge of AI model observability

One challenge in AI model observability is the diverse tooling landscape required to gain critical insights. OpenTelemetry has become a standard for collecting traces, metrics, and logs. However, seamless support for various SDKs and AI model frameworks, such as LangChain and Pinecone, remains essential.

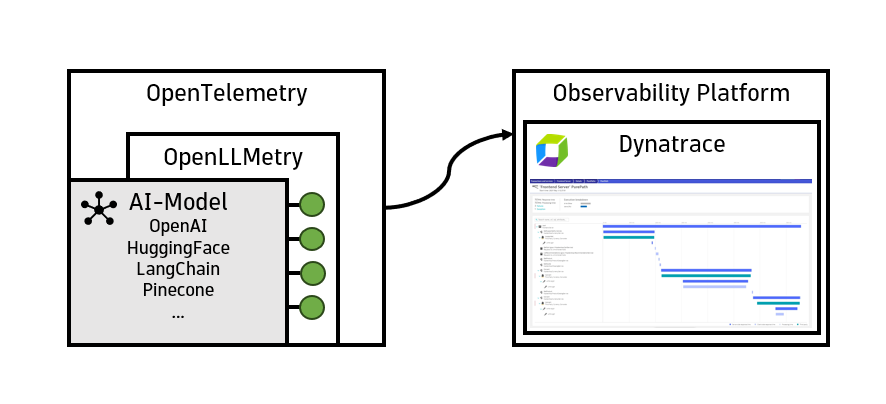

Combining Dynatrace with Traceloop’s OpenLLMetry addresses the heterogeneity challenge by supporting a range of popular LLMs, prompt engineering, and chaining frameworks. OpenLLMetry, an open source SDK built on OpenTelemetry, offers standardized data collection for AI Model observability.

How OpenLLMetry works

OpenLLMetry supports AI model observability by capturing and normalizing key performance indicators (KPIs) from diverse AI frameworks. Utilizing an additional OpenTelemetry SDK layer, this data seamlessly flows into the Dynatrace environment, offering advanced analytics and a holistic view of the AI deployment stack.

Given the prevalence of Python in AI model development, OpenTelemetry serves as a robust standard for collecting observability data, including traces, metrics, and logs. While OpenTelemetry’s auto-instrumentation provides valuable insights into spans and basic resource attributes, it falls short in capturing specific KPIs crucial for AI models, such as model name, version, prompt and completion tokens, and temperature parameters.

OpenLLMetry bridges this gap by supporting popular AI frameworks like OpenAI, HuggingFace, Pinecone, and LangChain. Standardizing the collection of essential model KPIs through OpenTelemetry ensures comprehensive observability. The open source OpenLLMetry SDK, built atop OpenTelemetry, enables thorough insights into your Large Language Model (LLM) applications.

As the collected data seamlessly integrates with your Dynatrace environment, you can analyze LLM metrics, spans, and logs in the context of all traces and code-level information. Maintained under the Apache 2.0 license by Traceloop, OpenLLMetry is a valuable asset for product owners, providing a transparent view of AI model performance.

The diagram below illustrates how OpenLLMetry captures and transmits AI model KPIs to your Dynatrace environment, empowering your business with unparalleled insights into your AI deployment landscape.

Dynatrace OneAgent® is perfectly capable of automatically injecting and tracing code-level information for many technologies, such as Java, .NET, Golang, and NodeJS. However, Python models are trickier.

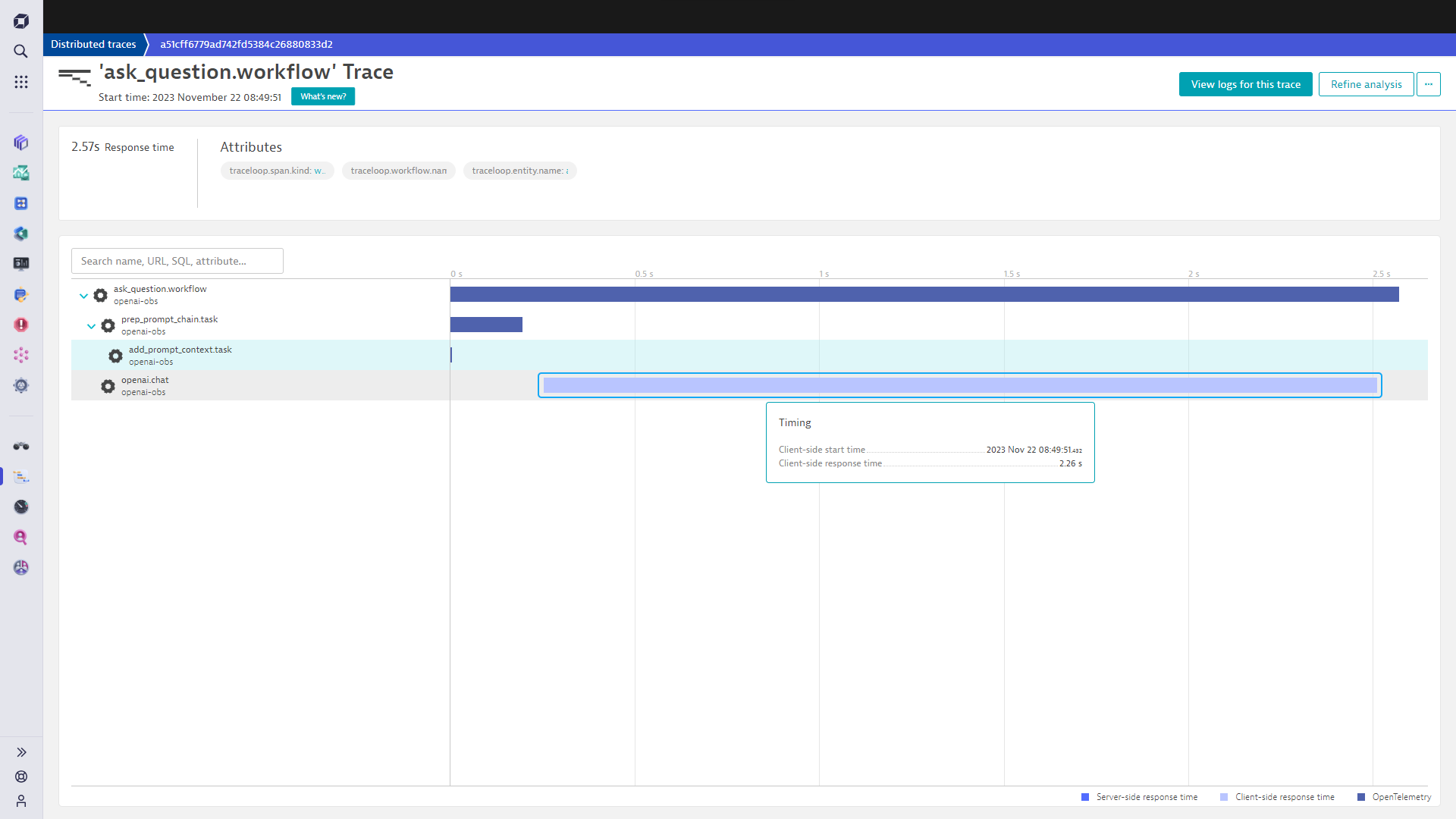

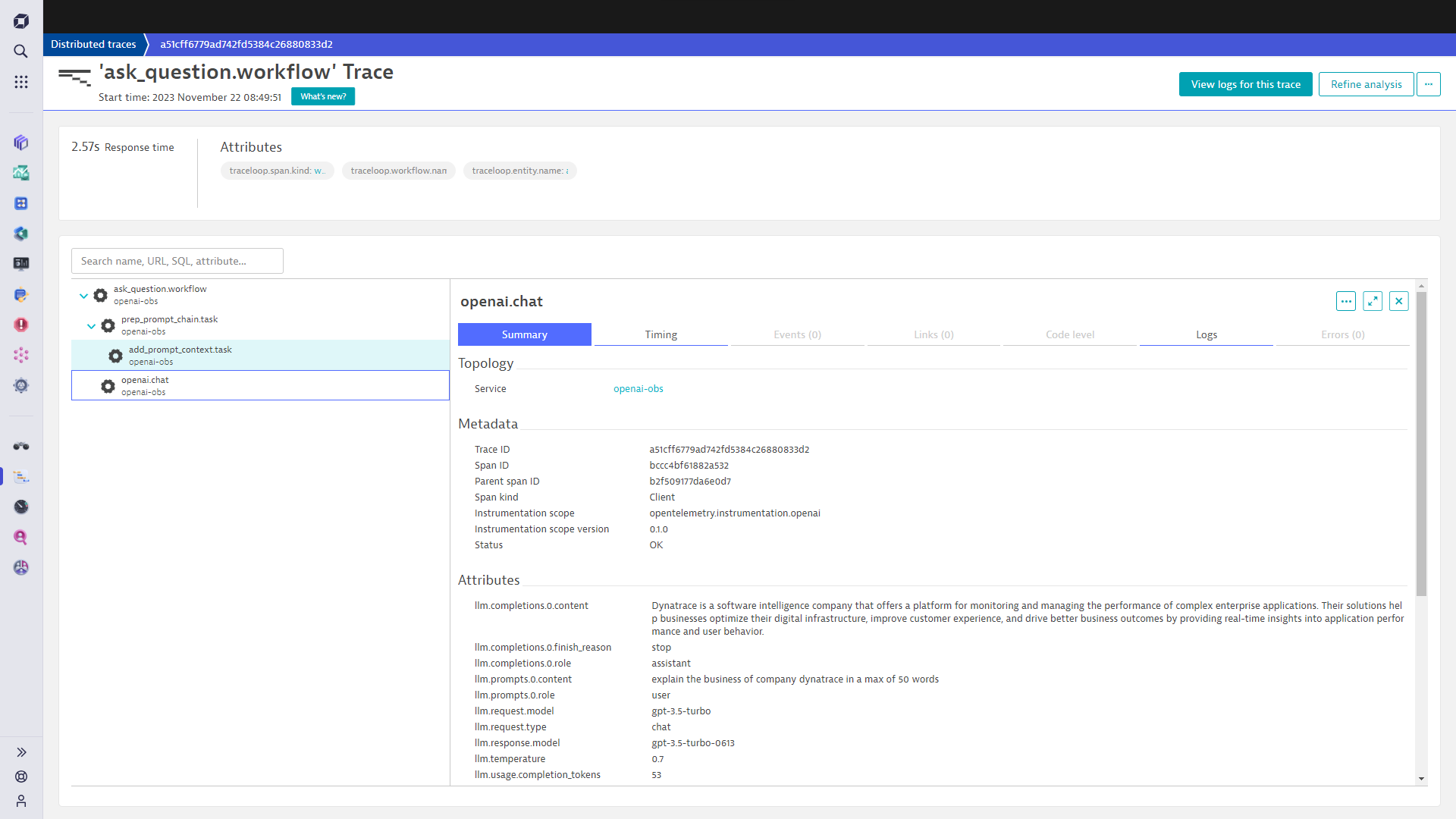

In the Dynatrace web UI, you can track your AI model in real time, examine its model attributes, and assess the reliability and latency of each specific LangChain task, as demonstrated below.

The captured span by Traceloop automatically displays vital details, including the mode utilized by our LangChain model gpt-3-5-turbo, the model’s invocation with a temperature parameter of 0.7, and the utilization of 53 completion tokens for this individual request.

With the growth of AI, maintaining transparency is essential

Observing AI models like Large Language Models (LLMs) in production is crucial for enhancing performance, reliability, security, and user trust. This includes the monitoring of AI-related costs to ensure they remain within acceptable margins. The Dynatrace platform, coupled with Traceloop’s OpenLLMetry OpenTelemetry SDK, offers comprehensive visibility from model inception to completion.

As AI adoption grows, maintaining transparency is essential for regulatory compliance. While Dynatrace automates tracing for various technologies, Python-based AI models require OpenTelemetry. OpenLLMetry bridges this gap, supporting popular AI frameworks and vendors to ensure standardized data collection. OpenLLMetry provides an open source SDK for LLM observability, seamlessly integrating with Dynatrace for in-depth analysis.

References

- Dynatrace Documentation: AI model observability

- Traceloop documentation: OpenLLMetry

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum