There are many cloud migration patterns that give guidance around moving workload to the cloud. While most of them are “good practices”, it seems that the good old “one size fits all” approach turned out to be impractical for the vast majority of cloud migration projects I have seen in the recent years.

One of our customers has shared with me lessons they learned from modernizing monolithic applications and re-fitting them into a hybrid-cloud continuous delivery architecture.

Lesson #1: Hybrid-Cloud means diverse tools & technologies

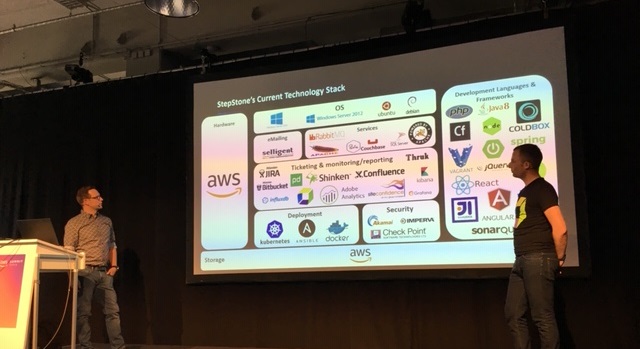

At the recent AWS Summit in Berlin, I was fortunate enough to share the stage with Jacek Jaworski, Development Manager at StepStone. They have been on a migration path over the past few years and started moving their existing monolithic architecture into the AWS cloud. Jacek started his presentation showing the following slide which is very representative for many other organizations I’ve talked to: a very diverse set of tools that are used to manage a very diverse and complex technology stack.

Lesson #2: There is a 7th Migration Pattern

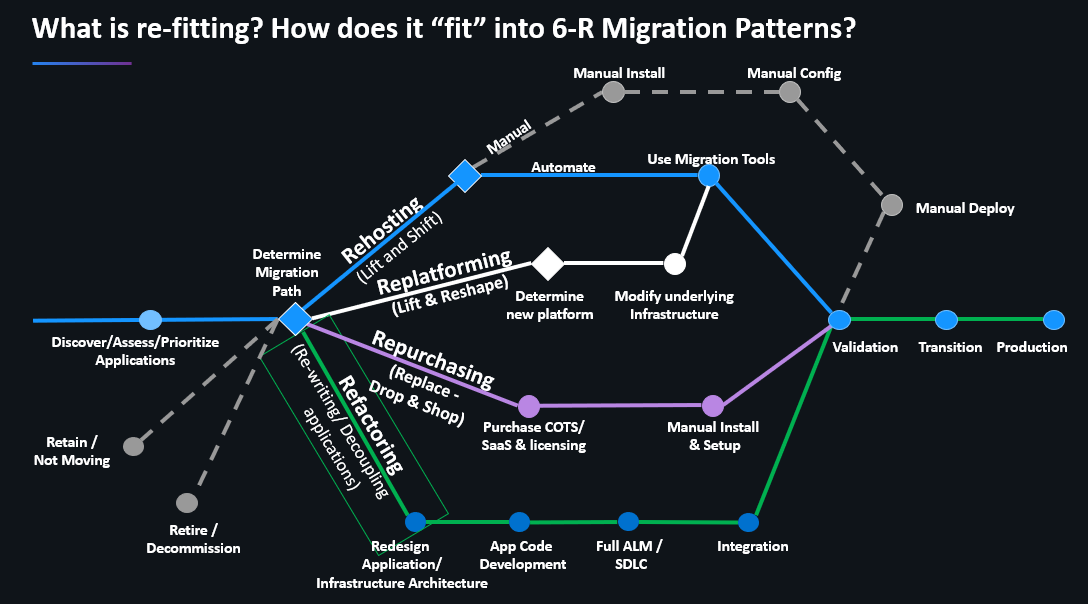

We then chatted about StepStone’s approach to migrate towards the public cloud. Turns out that their approach fits really well into what Brian Wilson and I recently discussed on our PurePerformance Podcast with AWS Evangelist Mandus Momberg: Application Modernization – the 7-R Approach. While our industry has been talking about the 6-R Migration Patterns, we see that most projects are really a combination of several patterns. And for that reason, we shall call the 7th R “Re-Fitting” as we try to re-fit existing architectures into a hybrid-cloud world:

To put this into context, StepStone initially started re-hosting On-Premises workloads to EC2 (Lift & Shift). A great way to gain experience with “The Cloud”. The next step involved re-platforming for example leveraging RDS vs. running their own database instances. Finally, they started re-writing and decoupling services to fully leverage cloud native capabilities and services such as ECS, EKS, Fargate or Serverless.

Lesson #3: Migrations lead to Hybrid-Cloud Architectural Meshes

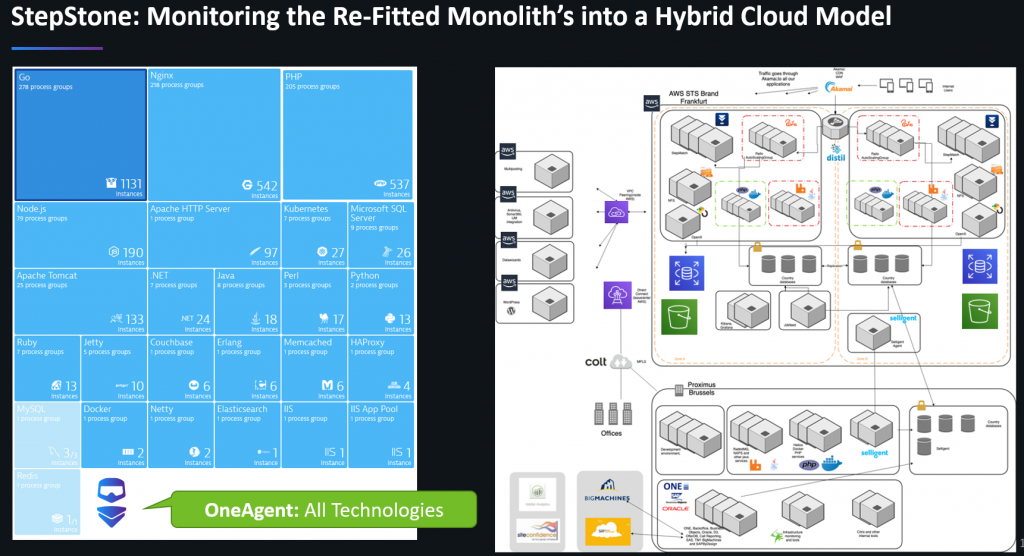

In this transition phase – and probably for a very long time in the future – there is going to be a mix of architectures & technology stacks. Jacek showed the following Dynatrace ServiceFlow that shows how re-fitting an architecture doesn’t simply make the monolith go away. You also don’t end up in a “less complex” architecture. To the contrary: Legacy, Microservices, Cloud-Services & 3rd party are all being mashed together. That’s true for StepStone and for most other projects I have seen:

Lesson #4: Invest in Hybrid-Cloud Monitoring

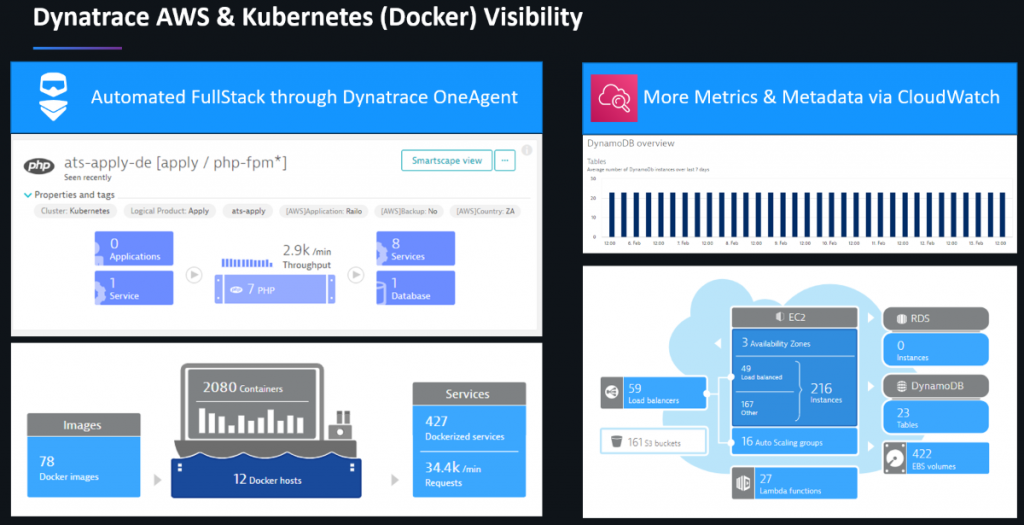

The above screenshot already shows a core capability of modern hybrid-cloud monitoring solutions such as Dynatrace: automatic monitoring of all architectural components across hybrid cloud environments.

For that and other capabilities, StepStone chose Dynatrace, as it also allowed them to consolidate point monitoring tools for different technology stacks. The following is a slide Jacek used to explain their current architecture (below right). It shows how they operate across AWS Frankfurt and their Data Center in Brussels. It also shows the different technologies and services they use in each data center.

On the left, we see that Dynatrace OneAgent in fact covers all these technologies across the data centers:

Jacek used the following slide to highlight how Dynatrace supports a set of core capabilities they identified a hybrid-cloud solution has to provide:

- Automatic code level insights into Java, .NET, PHP, GO, Node without code change

- Automatic instrumentation of containers without changing the image

- Automatic monitoring of AWS Services through CloudWatch

- Automatic Tagging of hosts, processes & services based on AWS & Container Tags

To learn more about Dynatrace’s capabilities on container and cloud native monitoring, check out Docker Monitoring and Cloud Platform Monitoring.

Lesson #5: Embrace Continuous Optimization

Re-Fit once and you are done! Well – that’s not how it works in real life. Like with any “living organism/architecture” you must nurture it to make sure it keeps running smoothly, leveraging the resources it is given in the most cost-efficient way. For that reason, Jacek and team have embraced Continuous Optimization. They leverage Dynatrace’s data and the built-in hotspot assistants to optimize frontend code, backend code and deployment configurations. Here are three examples Jacek had in his slides showing how Dynatrace allows all their engineers to continuously optimize their current implementation.

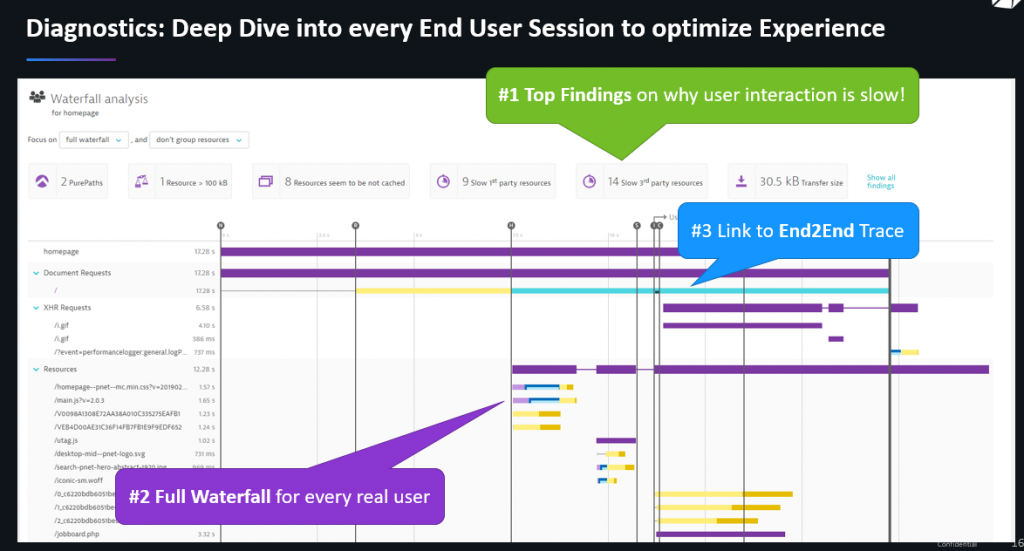

Optimization 1: Frontend Performance

Dynatrace Real User Monitoring captures full browser details of every user, every click and every swipe. Frontend Engineers can either look at an individual user’s page load actions or use Dynatrace’s feature to analyze pages that impact the largest number of users or are most critical. In any case, Dynatrace provides full waterfall details and an automatic top findings section as you can see in the following screenshot. This particular page suffers from 14 slow 3rd party resources, includes un-cached static resources and has one long running server-side request:

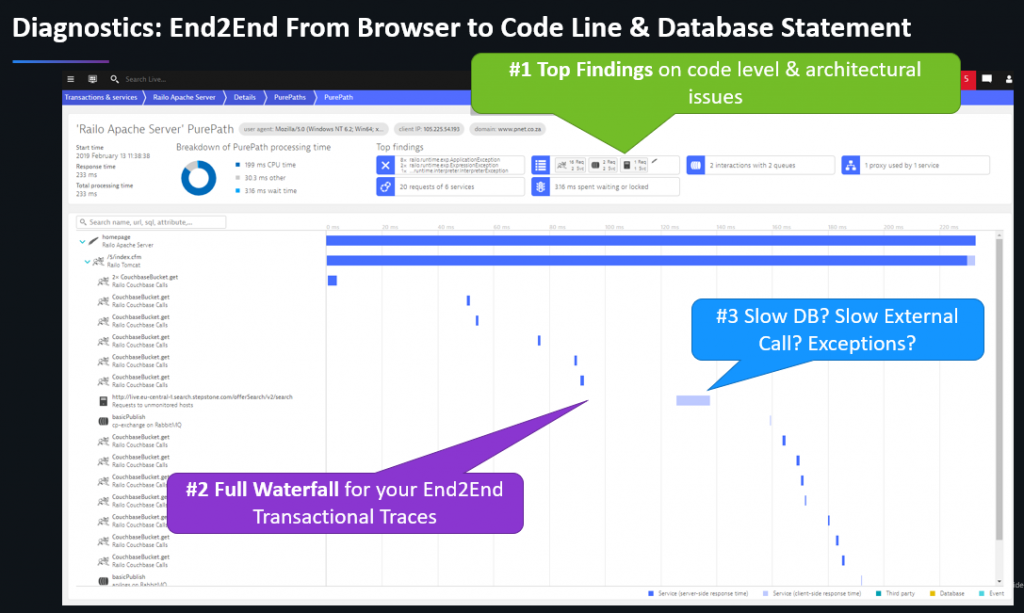

Optimization 2: Backend Performance

Dynatrace OneAgent captures and traces every single request in the backend, whether it is a request triggered when loading a web page, an API call or any backend batch transaction. Just as with frontend analysis, an engineer can identify hotspots across a set of transactions or focus on individual requests that have an issue. The following screenshot shows the captured PurePath (=transactional trace) that impacted the page load shown in the previous screenshot. Just as with frontend analysis the engineer can analyze the full waterfall including method calls, SQL executions, Exceptions … or just follow the top findings which really speed up hotspot analysis:

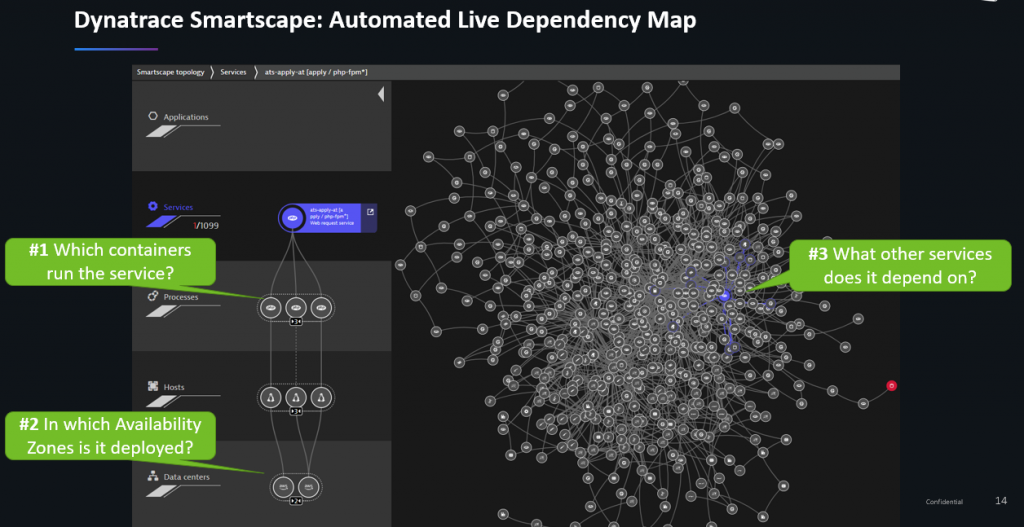

Optimization 3: Deployments & Dependencies

The Dynatrace OneAgent automatically tracks horizontal dependencies (by tracing transactions end-to-end using PurePath) as well as vertical dependencies (by doing full-stack instrumentation from the host all the way up to the end user). This live dependency map is called Dynatrace Smartscape which is used by the Dynatrace AI for problem and root cause detection. For optimization purposes this dependency data can be used to validate different use cases:

- Are services deployed & scaled based on current specification?

- What are the real service dependencies?

- How do these dependencies change with a code or configuration change?

StepStone is not yet fully utilizing the potential of Smartscape as part of their development and CI/CD process but are planning to leverage Smartscape data – which is also exposed through the Dynatrace API – to automatically validate correct deployments and dependencies. In the following screenshot we learn that one of their PHP-based services currently runs on 3 EC2 Linux instances across 2 AWS Availability Zones. We also learn which services depend on this PHP-based service (incoming calls) and on which services it is depending on (outgoing calls). This data can be used to validate service contracts as well as making sure that e.g: a pre-production service is not connecting to a production database. Thanks to the API these checks can also be fully automated into the Continuous Delivery Pipeline to stop pipelines that resulted in faulty deployments or dependency configurations!

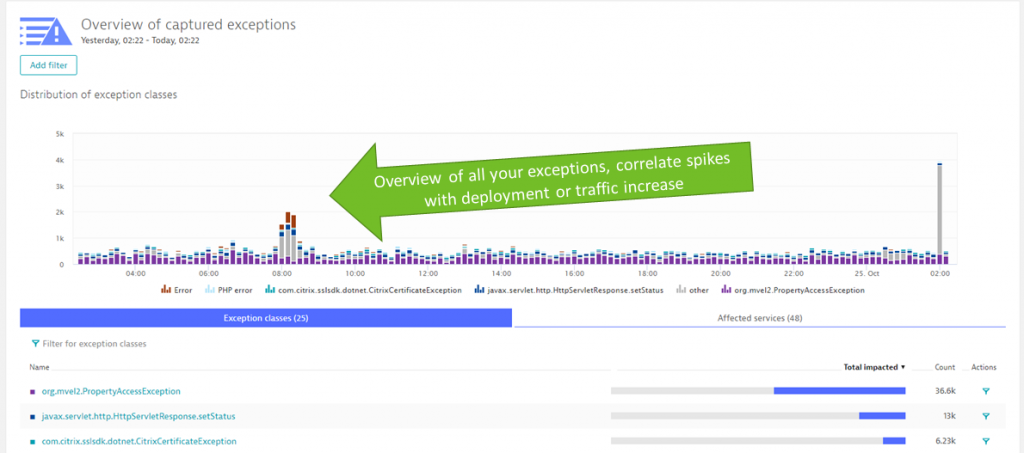

More Optimizations: Through the Diagnostics Views

There are many other optimization options such as finding CPU, Memory, Exception or Database hotspots. For those I suggest you check out the Diagnostics Views in Dynatrace. Here is a screenshot of the Exception Analysis where Dynatrace automatically analysis all Exceptions, groups them by service and provides direct links to the code and transactions where these Exceptions are thrown. This makes it very easy to reduce Exception noise as compared to finding these exceptions in log files that are scattered around your hybrid-cloud infrastructure:

If you want to learn more about Diagnostics Use Cases check out my YouTube Tutorial on Advanced Diagnostics with Dynatrace.

What next? Unbreakable Continuous Delivery

As continuous improvement and migration journey typically never end, Jacek and team already picked the next projects focusing on optimizing their Continuous Delivery workflows by following our ideas around Unbreakable Continuous Delivery: Automated Quality Gates, Automated Build Validation, Blue/Green Deployments, Self-Healing:

If you want to learn more about these unbreakable continuous delivery use cases I can recommend looking at our OpenSource project keptn.sh, which strives to automate on-boarding of your cloud native services to a modern cloud native unbreakable continuous delivery pipeline.

Finally, I want to say thanks Jacek and StepStone for sharing your story. Glad to be a partner on that journey and looking forward to more stories like this as you keep innovating and optimizing!

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum