What is WebLogic?

Oracle WebLogic Server is a Java EE application server currently developed by Oracle Corporation.

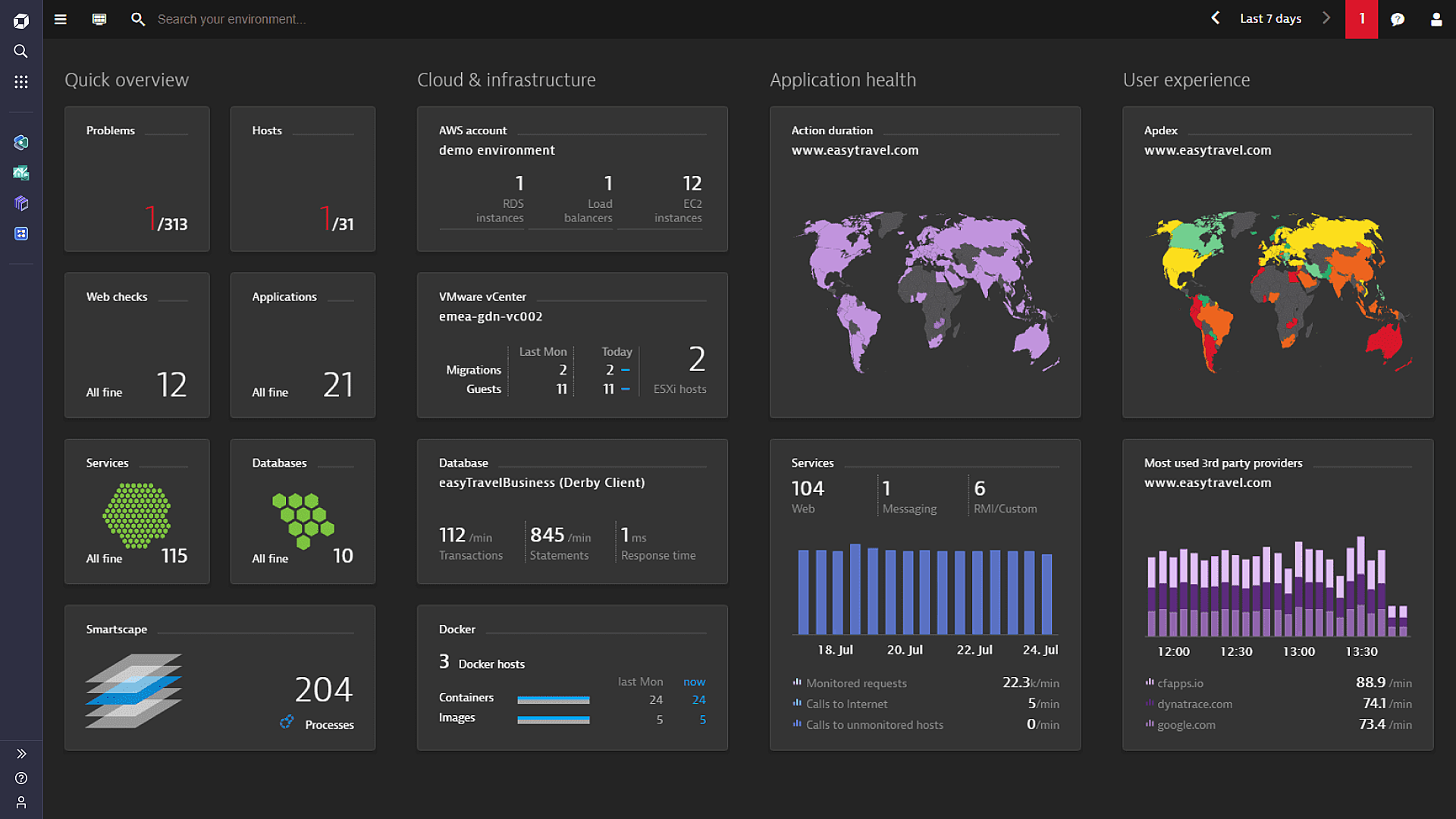

Gain visibility into your Java applications running on WebLogic

Dynatrace monitors and analyzes the database activities of your Java applications running on WebLogic, providing you with visibility from the browser all the way down to the individual database statements. The performance metrics you will be able to monitor for WebLogic include:

- JVM metrics

- Custom JMX metrics

- Garbage collection metrics

- All database statements

- All requests

- Suspension rate

- All dependencies

In under five minutes the Dynatrace OneAgent automatically discovers your entire Java application running on WebLogic.

Start monitoring your WebLogic servers in under 5 minutes!

In under five minutes, Dynatrace shows you your WebLogic servers’ CPU, memory, and network health metrics all the way down to the process level.

- Manual configuration of your monitoring setup is no longer necessary.

- Auto-detection starts monitoring new virtual machines as they are deployed.

- Dynatrace is the only solution that shows you process-specific network metrics.

Visualize WebLogic service requests end-to-end

Dynatrace understands your applications’ transactions from end-to-end. Service flow shows the actual execution of each individual service and service-request type. While Smartscape shows you your overall environment topology, Service flow provides you with the view point of a single service or service-request type.

Understand the impact of issues on customer experience

While monitoring your WebLogic servers, Dynatrace learns the details of your entire application architecture automatically.

- Artificial intelligence automatically identifies the dependencies within your environment.

- Dynatrace detects and analyzes availability and performance problems across your entire technology stack.

- By making root cause analysis, Dynatrace visualizes how problems evolve and how they impact the user experience.

We’re intrigued by its capability to work almost out of the box as well as being able to monitor system aspects as well as application performance and user experience.

Get the full picture with WebLogic server monitoring!

Sign up, deploy our agent and get unmatched insights out-of-the-box.