Making Garbage Collection faster

Chapter: Memory Management

Short of avoiding garbage collection altogether, there is only one way to make garbage collection faster: ensure that as few objects as possible are reachable during the garbage collection. The fewer objects that are alive, the less there is to be marked. This is the rationale behind the generational heap.

The Generation Conflict—Young vs. Old

In a typical application most objects are very short-lived. On the other hand, some objects last for a very long time and even until the application is terminated. When using generational garbage collection, the heap area is divided into two areas—a young generation and an old generation—that are garbage-collected via separate strategies.

Objects are ussually created in the young area. Once an object has survived a couple of GC cycles it is tenured to the old generation. (The JRockit and IBM JVM make exceptions for very large objects, as I will explain later.) After the application has completed its initial startup phase (most applications allocate caches, pools, and other permanent objects during startup), most allocated objects will not survive their first or second GC cycle. The number of live objects that need to be considered in each cycle should be stable and relatively small.

Allocations in the old generation should be infrequent, and in an ideal world would not happen at all after the initial startup phase. If the old generation is not growing and therefore not running out of space, it requires no garbage-collection at all. There will be unreachable objects in the old generation, but as long as the memory is not needed, there is no reason to reclaim it.

To make this generational approach work, the young generation must be big enough to ensure that all temporary objects die there. Since the number of temporary objects in most applications depends on the current application load, the optimal young generation size is load-related. Therefore, sizing the young generation, known as generation-sizing, is the key to achieving peak load.

Unfortunately, it is often not possible to reach an optimal state where all objects die in the young generation, and so the old generation will often often require a concurrent garbage collector. Concurrent garbage collection together with a minimally growing old generation ensures that the unavoidable, stop-the-world events will at least be very short and predictable.

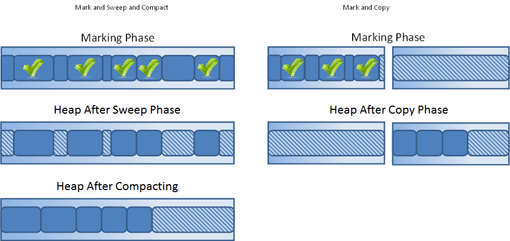

On the other hand, while there is a very high number of allocations in the young generation at the beginning of each GC cycle, there is only a small portion of objects alive after each GC cycle. This leads to a high level of fragmentation using the GC strategies we have discussed so far. You might think that using free lists would be a good option, but this will slow down allocations. An alternative strategy of executing a full compaction every time has a negative effect on pause time. Instead, most JVMs implement a strategy in the young generation, known as copy collection.

When Copying is Faster than Marking

Copy garbage collection divides the heap into two (or more) areas, only one of which is used for allocations. When this area is full, all live objects are copied to the second area, and then the first area is simply declared empty (see Figure 2.7).

The advantage here is that no fragmentation occurs, and thus there is no need for free lists and compaction. Allocations are always fast and the GC algorithm is simple. This strategy is effective only if most objects die, which is the default in the young generation. However, this can lead to a problem when the JVM is executing a high number of concurrent transactions.

If the young generation is too small, objects are tenured prematurely to the old generation. If the young generation is too large, too many objects are alive (undead) and the GC cycle will take too long. Contrary to what most people think, these young-generation GCs, often termed minor GCs, are full of stop-the-world events. Their negative impact on response time can be more severe than the occasional old-generation GC.

Not surprisingly, the generational heap does not provide a silver-bullet solution to the garbage-collection problem. The optimal configuration is often a compromise, with an adequately sized young generation to avoid overly long minor GCs, and a concurrent GC in the old generation to deal with prematurely tenured objects.

The Case for a Non-generational Heap

The Oracle HotSpot JVM uses a generational heap exclusively, while Oracle JRockit also supports a non-generational heap, and IBM WebSphere defaults to a non-generational heap and recommends that JVMs smaller than 100 MB always use a non-generational heap. In terms of both CPU and memory, a generational GC and the associated copy collection have a certain overhead, which makes sense.

If your application is designed for pure throughput and the number of temporary objects is relatively small, a non-generational GC has advantages. A full parallel GC has a better tradeoff in terms of CPU usage if you don't care about the response time of a single transaction. On the other hand, if the number of temporary objects is relative small, a concurrent GC in a non-generational heap might do the job and with less suspension time than a generational GC. Only a thorough performance test can determine this for sure.

Improving Allocation Performance

Two factors have a negative impact on allocation speed: fragmentation and concurrency. We have already dealt with fragmentation. Concurrency's problem is that all threads in the JVM share memory resources, and all memory allocations must be synchronized. When many threads try to allocate simultaneously, this quickly becomes a choke point. The solution to this is thread-local allocation.

Each thread receives a small, but exclusive, memory allotment where objects are allocated without the need for synchronization. This increases concurrency and the speed of application execution. (It's important that this not be confused with the heap area for thread-local variables, where allocated objects are accessible by all threads.)

A single thread-local heap (TLH) to accommodate a large number of threads can still be quite small. The TLH is not treated as a special heap area, but instead is usually a part of the young generation, which creates its own problems.

A generational heap with TLH requires a larger young generation than without a TLH. The same number of objects simply uses more space. On the other hand, a non-generational heap with active TLH is likely to become more fragmented and will need more-frequent compaction.

Table of Contents

Application Performance Concepts

Memory Management

How Java Garbage Collection Works

The Impact of Garbage Collection on application performance

Reducing Garbage Collection Pause time

Making Garbage Collection faster

Not all JVMS are created equal

Analyzing the Performance impact of Memory Utilization and Garbage Collection

The different kinds of Java memory leaks and how to analyze them

High Memory utilization and their root causes

Classloader-releated Memory Issues

Performance Engineering

Virtualization and Cloud Performance

Try Java monitoring for free