|

Johannes Bräuer Guide for Cloud Foundry |

Jürgen Etzlstorfer Guide for OpenShift |

| Part 1: Fearless Monolith to Microservices Migration – A guided journey | |

| Part 2: Set up TicketMonster on Cloud Foundry | Part 2: Set up TicketMonster on OpenShift |

| Part 3: Drop TicketMonster’s legacy User interface | Part 3: Drop TicketMonster’s legacy User Interface |

| Part 4: How to identify your first Microservice? | |

| Part 5: The Microservice and its Domain Model | |

| Part 6: Release the Microservice | Part 6: Release the Microservice |

In the previous article of this blog series, we decoupled the UI from the monolith to have the monolith and the UI as two separated maintainable, deployable, and testable artifacts. However, decoupling parts from an existing application in production might come with high risk. As said in the previous article, it is not recommended to release the new UI to all users at once, since there might be unforeseen side-effects.

In this article, we want to focus on how to deploy the new UI component to a restricted user group only and to not hit it with 100 % of the production traffic in the first place. Doing so, we can investigate if the new architecture influences our application in a negative way and can come up with counter-strategies early. Therefore, in this article, we will learn how to leverage the concepts of dark launches and canary releases to mitigate risks of failures and to enable a fearless go-live of new services into production. Furthermore, since the UI is still part of the monolith code base we will drop this legacy code from the monolith.

To follow this part of the blog post series, we need three sub-projects available on GitHub:

- tm-ui-v2

- backend-v1

- load-generator (optional)

Dark launch of the User Interface

Let’s start with making the decoupled UI from the previous article available to our users. As said, we don’t want to release the decoupled version of the UI all at once to the public, but instead we want to release it to a smaller group of users first to test its functionality and stability.

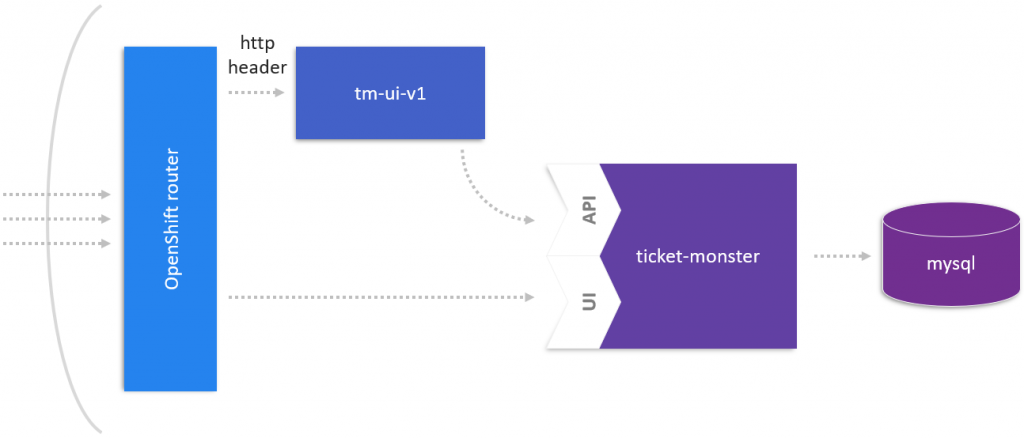

To enable a dark launch, i.e., a launch to a specifically selected groups of users, for example, only internal users, we have to edit the HAproxy-config.template of our OpenShift router component. The detailed steps can be found in our GitHub repository, but without going into detail, we add a section in the configuration that takes care of special HTTP header dedicated to the routing to annotated routes in OpenShift. Doing so we can control which HTTP requests we want to reroute. In our showcase, we will reroute all incoming requests that are marked with the special “Cbr-Header: tm-ui-v1” to the decoupled user interface.

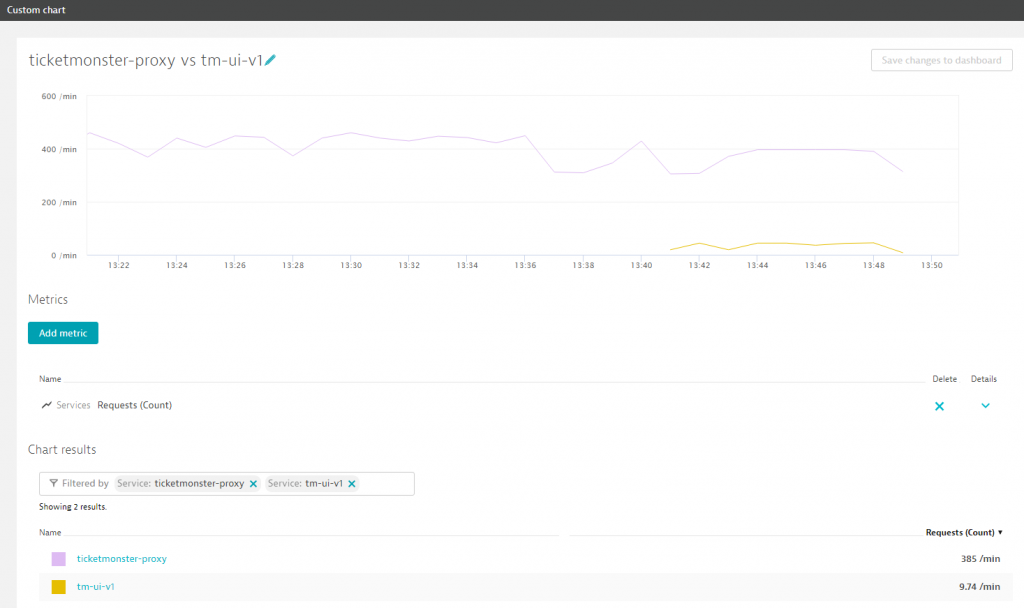

Verify the dark launch in Dynatrace

We can easily verify how many users are rerouted to the new version in Dynatrace. For example, we can create a custom chart to compare the number of requests to the ticket-monster in comparison to the new tm-ui-v1 service. As can be seen in the following, at around 13:41 we introduced the dark launch of the decoupled user interface.

From dark launch to canary release

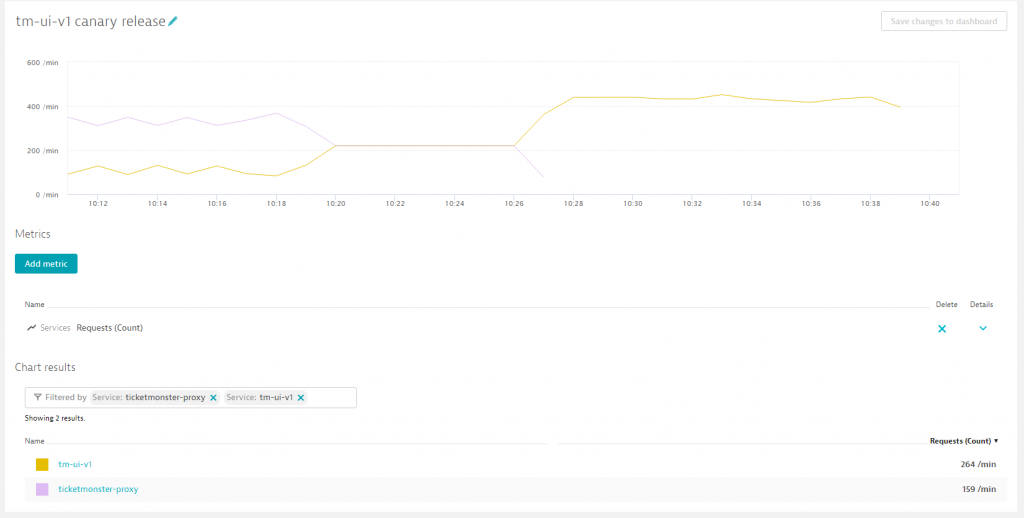

As a next step, we send more and more traffic to the decoupled user interface tm-ui-v1 by employing a canary release mechanism, meaning that we start with low traffic to the new service and increase it over time. In our showcase, we start by sending 25 % to the tm-ui-v1 and decide to increase it after some time to 50 % (around 10:19). If we don’t see any errors or anomalies we can then decide to redirect all production traffic (around 10:26) to the new service as seen in the following image.

During this period, we also verify the overall performance and error-rate of our application. As soon as we are confident with what we have achieved so far, we can start by cleaning the code-base of the monolith and removing all UI legacy code.

Shrink monolith by cleaning up the code base

In order to shrink the monolith, we have to get rid of the legacy code of the UI that is now maintained and deployed in its own service. We basically can delete all client-facing code (in the webapp folder) from our repository. In our showcase, we find everything prepared in a new “backend-v1” folder. The result is a more compact version of our monolith without any user interface related code. We can now deploy this new version of the monolith which we will call “backend-v1” going forward, since it is not a complete monolith anymore. We will configure our new “backend-v1” to also communicate with the same database as the monolith since we don’t want to duplicate the datastore. At this point, we are missing a client-facing UI for our new backend-v1 since all traffic is still sent to the monolith. Therefore, we will also have to release a new version of the TicketMonster UI which hits the new backend-v1. At this point in time we are moving a lot of parts in our application. As we have seen with the dark launch, we will need strategies to safely release new versions to our customers.

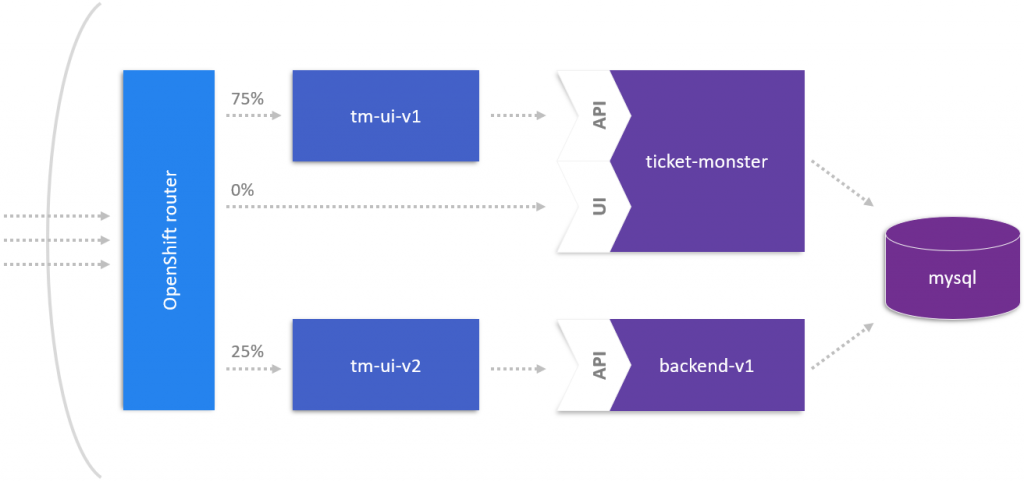

Canary release as a deployment strategy

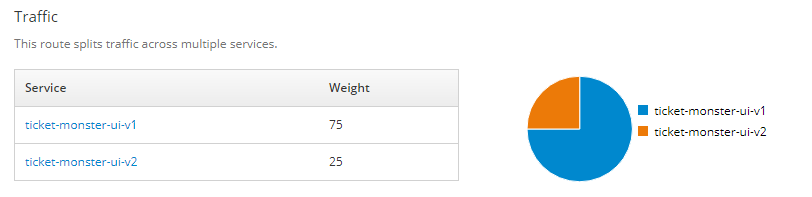

Canary releases are a suitable deployment strategy if we want to control the amount of production traffic hitting the original service and a new version of it. OpenShift has some built-in features which we can leverage to redirect small parts of the traffic to a new version of a service and to have fine-grained access how to increase the amount of traffic over time to make the new service-version available to all our users. As seen in the following image, we can control how the load distribution to different services. For example, we can send 75 % of the traffic via the decoupled user interface tm-ui-v1 and the other 25 % to the new tm-ui-v2 which communicates with backend-v1. Furthermore, we can hide other versions of our services from the public by not exposing them.

We assess the weight of the new services in our route with different values, in our case 75 % to ticket-monster-ui-v1 and 25 % to ticket-monster-ui-v2.

oc set route-backends production ticket-monster-ui-v1=75 ticket-monster-ui-v2=25

Once we decide to increase the load, we simply adjust the route in OpenShift to now send the majority of the trafic to ticket-monster-ui-v2:

oc set route-backends production ticket-monster-ui-v1=25 ticket-monster-ui-v2=75

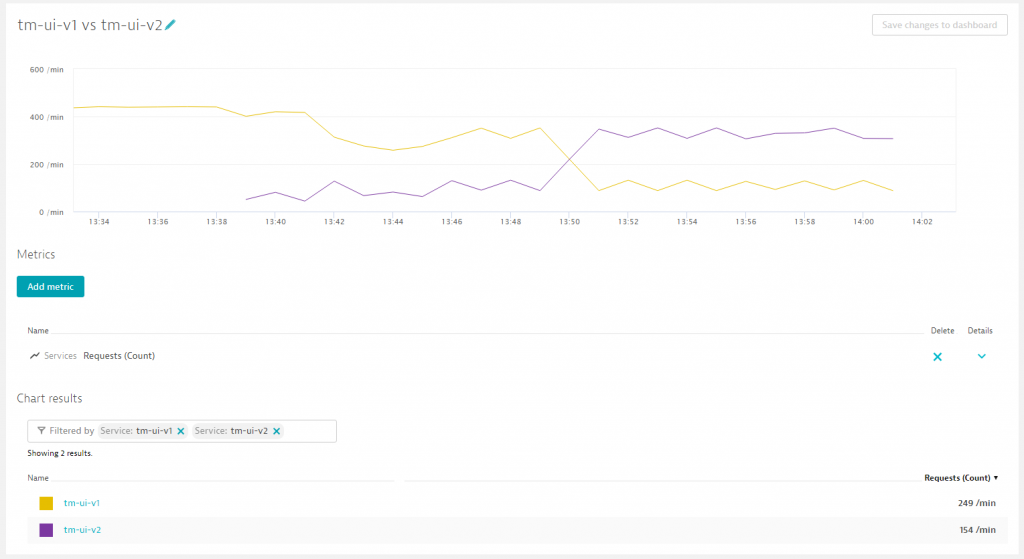

Again, we can verify in Dynatrace how the new distribution looks:

As we can see, we enabled the canary release of the tm-ui-v2 around 13:39 with 25 % of the traffic and increased around 13:50 to 75 % of the overall traffic.

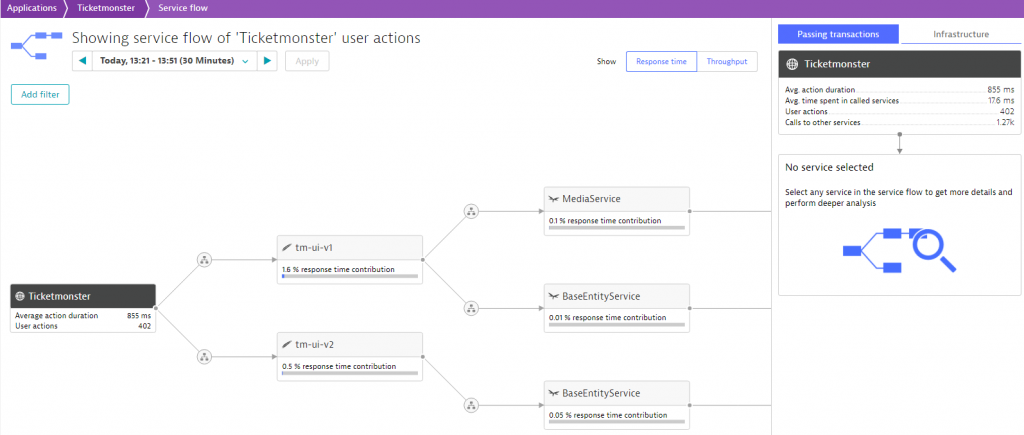

Additionally, we also want to verify the service flow of our new ticket-monster-ui-v2 to make sure it does not communicate with the monolith, but with the newly created backend-v1:

Furthermore, we can take a look at the backtrace of our database, to investigate which services are querying the database service. Dynatrace gives us detailed information about the requests that were made and from which services they are originating. As can be seen in the screenshot, the ticketmonster databases serves as a datastore for both the monolith (ticket-monster-mysql) as well as the new backend-v1. And each of those services has its own UI to be exposed to the user.

Summary & Outlook

Summarizing, we have investigated how we can drop the legacy code from our monolithic application and how we can fearlessly release new versions of our services by employing suitable deployment mechanisms such as dark launches and canary releases.

In the next article we will take a close look how we can identify and extract our first microservice. Stay tuned!

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum