Logs are a core pillar of observability, and in many organizations, logs serve at least a dual purpose. They drive day-to-day troubleshooting, root cause analysis, security investigations, and many other use cases.

Logs also need to be available for compliance audits, Business Intelligence (BI) analysis, and traditional Security Information Event Management (SIEM) solutions. The challenge is doing this without relying on bolted-on forwarders or rigid, costly integrations.

With the introduction of the new Dynatrace OpenPipeline® capability, we’re providing you with the freedom to precisely define your organization’s forwarding, paired with flexible and unified log collection and processing.

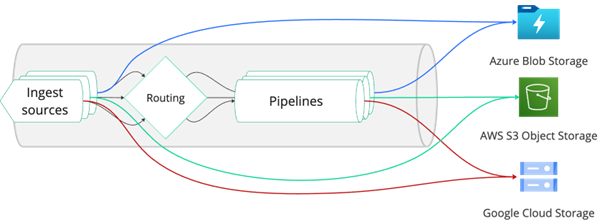

Figure 1. OpenPipeline provides rich configuration and customization options for each forwarding setup.

Centralized log collection and processing

OneAgent, Cribl, OpenTelemetry, AWS Firehose, journalD, Logstash, and Fluent Bit … Have you heard of any of these tools? Maybe you’re using all of these at the same time.

With Dynatrace, you have the choice of using one or all of these tools simultaneously. Dynatrace OpenPipeline allows you to process and transform them in the same way, regardless of their source, and to move seamlessly from one shipping method to another. Just as our customer, United Wholesale Mortgages, successfully consolidated their tools while moving logs off Splunk.

Forwarding logs un/processed

Depending on your industry or geography, you might be required to retain logs in an unprocessed state on third-party-managed storage, just as you might have used tape backups in the old days. With Dynatrace OpenPipeline, you can configure a forward-before-process to retain log events in an unaltered state.

Alternatively, you can first send log events to your defined processing pipeline, extract or convert the logs into metrics, and even drop unnecessary details before determining what to forward-after-processing to your object storage.

The last step in the pipeline is to decide whether to route log events to a Dynatrace Grail® bucket for retention or drop them.

Overcome vendor lock-in with precise, open forwarding

While other log management solutions provide proprietary log archive formats, you can overcome these challenges with Dynatrace and log forwarding for data archiving.

The Dynatrace OpenPipeline log forwarding capability delivers log events in a standardized and compressed NDJSON (Newline Delimited JSON) (.JSON.GZ) archive format, for which you can define the details, such as segmentation and filename prefixes.

Residing on destinations like AWS S3 or Azure Blob storage, other 3rd party solutions such as Microsoft Sentinel, Snowflake, or Tibco Spotfire can easily leverage these archives for use cases not covered by your observability platform.

Cost-effective long-term retention for logs

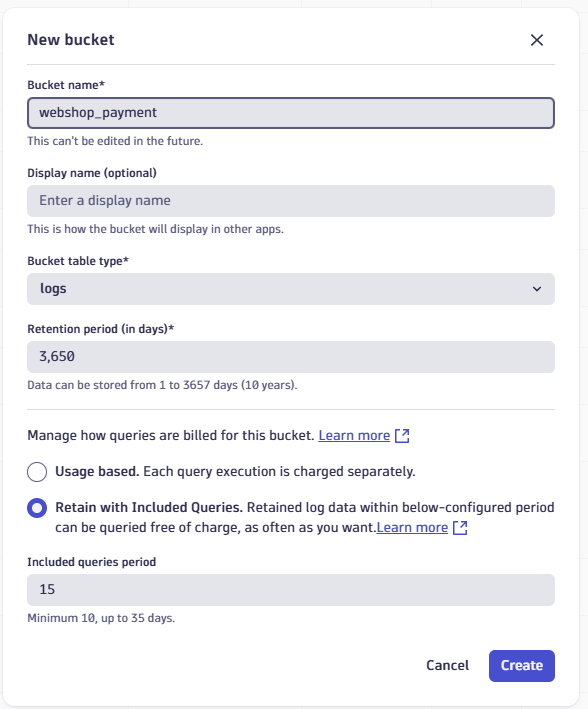

Dynatrace Grail offers cost-effective log retention for up to 10 years, accessible at any time with the same speed as on day one of ingestion, supporting a daily log ingest volume of 1 PB per tenant.

Many of our customers already retain years’ worth of logs today, always accessible without the headaches of re-ingestion, re-indexing, or storage tiering and scaling concepts.

Still, there are use cases where aged logs become low-value, low-touch data.

You may want to keep certain log events available in Grail for the first 60 or 90 days for troubleshooting purposes, and then store a copy in an archive for years.

NDJSON-exported log archives are an ideal file format for further increasing cost-effectiveness and are ready for handover to other teams or parties for investigation, thanks to the standardized JSON.GZ format. These are especially valuable for use cases like:

- Reviewing access logs during a post-mortem security incident

- Providing transaction logs to fulfill a court order

- File access audit-trial review

Tool consolidation with OpenPipeline and log forwarding

Log archiving presents an opportunity and an integration strategy for solutions that don’t yet integrate seamlessly with Dynatrace.

More importantly, OpenPipeline provides you with processing and transformation capabilities, volume control, and a reliable cadence for transmitting your log events.

You no longer have to rely on custom-defined scripts for the splitting and shipping of your logs. And there are no worries about truncating large log events; Dynatrace supports up to 10 MB.

Forget about all the retransmission headaches with custom log shippers when a connection breaks; all this is baked natively into Dynatrace components such as OneAgent, ActiveGate, and others.

Start leveraging OpenPipeline and log forwarding today and retire your third-party forwarding tool stack.

Register for the Preview today: Log Forwarding to Cloud Storage

Planned General Availability: Calendar Quarter 2 / 2026

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum