Over the last couple of weeks, a Dynatrace customer at a top US-based insurance company allowed me to share several internal success stories they had when applying Dynatrace for different Ops Use Cases. If you want to catch up on some of them check out Supporting IT help desk, Supporting Disaster Recovery, or the Making Davis® more Human story.

This latest use case is about the business impact of the crashing IBM Notes email application running on end-user workstations. Over the past weeks, some employees reported sporadic slowness and crashes. Opened support tickets with IBM resulted in several attempts to roll out fixes but none of these resulted in a real solution, as Notes kept crashing and employees started to feel the impact on productivity.

Step 1: Rolling out OneAgents on Windows Workstations

With the customer’s reputation within his company as “the guy who solves IT Operations issues with Dynatrace”, he got contacted by Chris, one of the email admins. Chris asked him whether it would be possible to install the OneAgent on Greg’s machine, which kept reporting that crash. The only thing the customer asked for was a quick remote session to Greg’s desktop. 3-minutes later, he had the OneAgent installed pointing it to the “End-User-PC” host group that he has been using to organize monitoring data for those end-user machines that are now actively monitored with Dynatrace. Chris was positively surprised that this was all it takes to get full-stack monitoring. No additional configuration, no plugins, no firewalls to open. Just run the default OneAgent installer!

Step 2: Dynatrace auto-detects problem and root cause

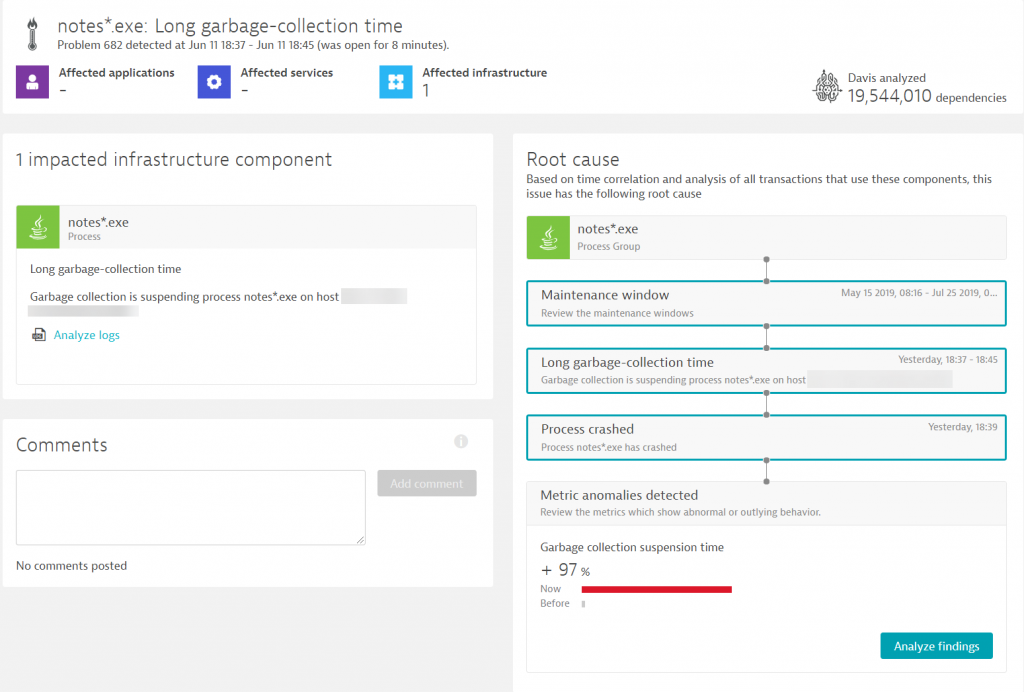

That same day at 6:39PM, Dynatrace auto-detected the crash of notes.exe including the actual root cause of the issue: high garbage collection that ultimately led to the crash! The following is the screenshot of the Dynatrace Problem Ticket:

Step 3: Supporting Evidence to IT Admins

In addition to the information in the problem ticket, Dynatrace provides all the insights into what has happened on that host, what happened with memory overall and per-process, as well as it captured the full crash log of the app. Here a couple of screenshots from Dynatrace views that the customer took back to Chris, the admin, to discuss the findings:

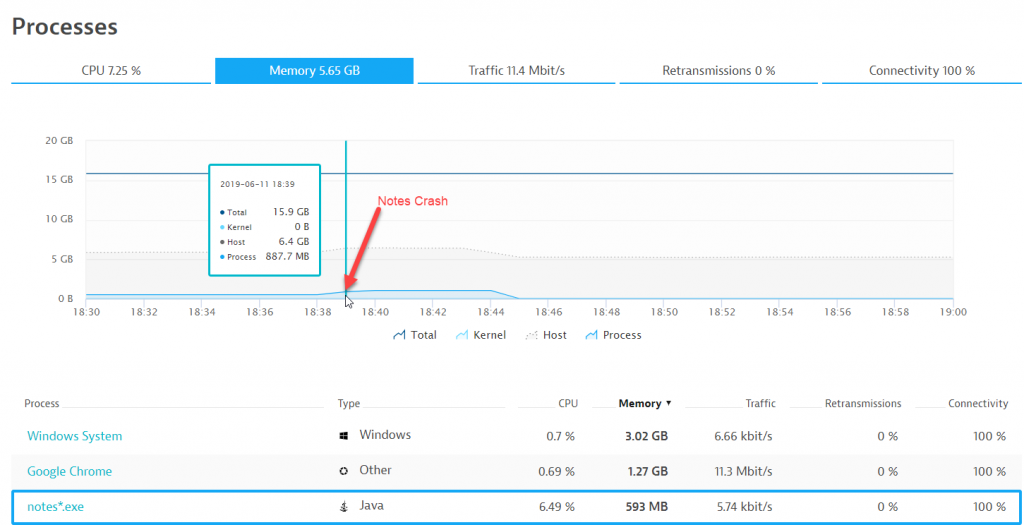

1: Process Memory not exhausting available host memory

Dynatrace provides resource monitoring for the host including each individual process. The following chart shows an interesting fact: the notes processes peaked at 593MB even though plenty of memory was still available on the host. The “process memory ceiling” therefore must be a JVM setting and is not enforced by the OS:

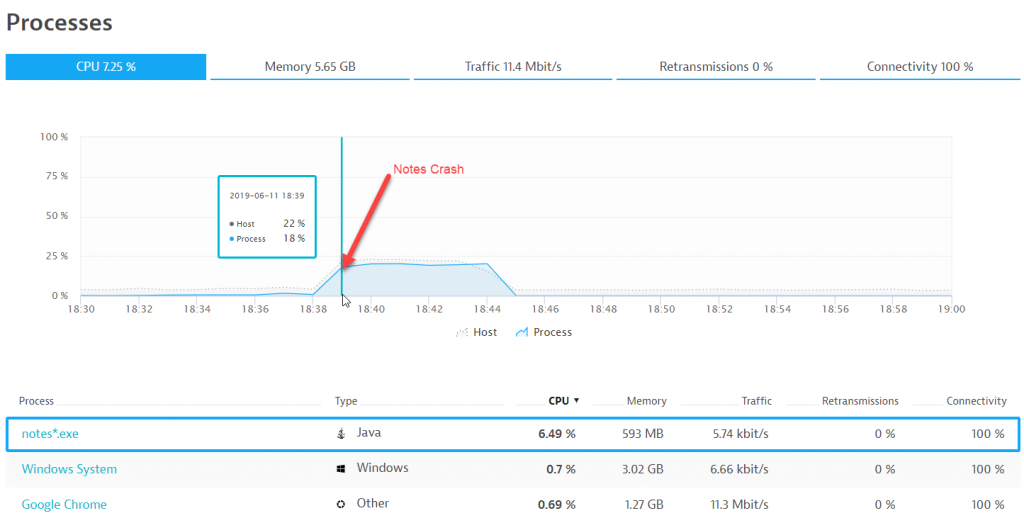

2: Garbage Collection Details highlight 100% Process Suspension & CPU spike

Dynatrace gives automatic insights into all runtime metrics. In our case here we get to see all relevant JVM memory, GC, threads, … metrics. The metrics clearly indicate that the Garage Collector kicked in and suspended all active threads while trying to free up some memory as the used memory reached the available limit. The suspension time metric is also the one Dynatrace already pointed out in the problem ticket in the section “Metric anomalies detected”:

We can also see the impact of Garbage Collection on CPU as CPU is what GC needs during the process of finding memory to clean up. In Dynatrace we can observe that the notes.exe consumes a large part of 1 of 4 cores (18% of total CPU):

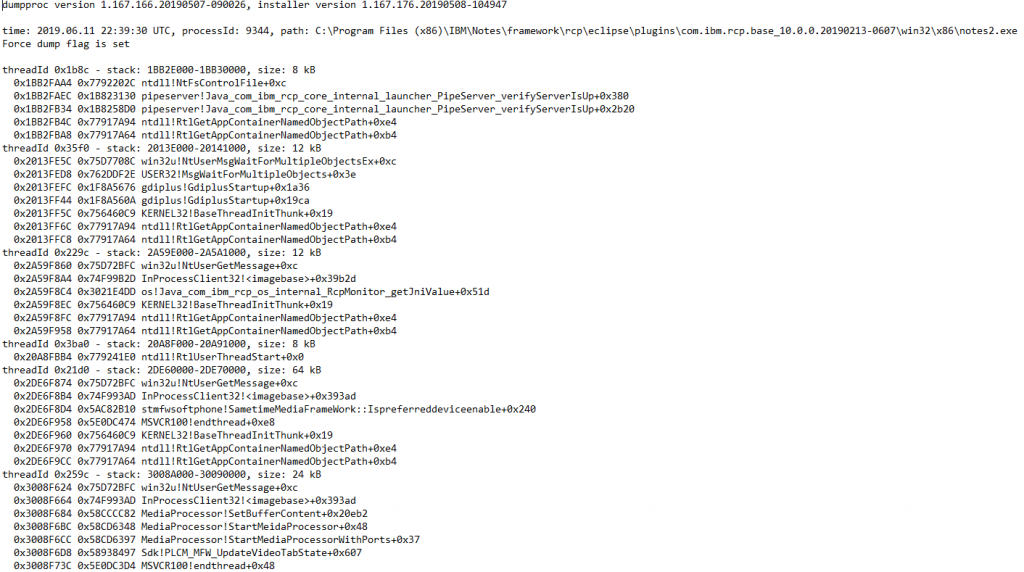

3: Crash Log with more indication on crash

Dynatrace has the built-in capability to capture process crash logs. This is particularly useful for in-depth crash analytics. In our case here it is additional information as we see which threads are currently active and what they are doing. We can, for instance, see a thread that is processing media content and pushing data into a memory buffer. We also get to see some internal IBM Java native calls – definitely useful in case someone from IBM needs to have a closer look at this:

Step 4: Analyze and Fix the Issue

Chris, the admin, was a bit surprised by the findings initially. He was especially surprised that the JVM only consumed 593MB as he just recently increased the JVM Memory setting to adhere to the best practices from IBM who suggested a much bigger heap size. After double-checking the setting on that host, to his surprise, he saw that his recent JVM Memory setting had been reverted back to the initial low value. That happened without his knowledge! Changing it back to the higher setting fixed the problem. Now it’s up to Chris to make sure nobody reverts that change as it would lead to the same issue!

Conclusion: The Power of the Dynatrace OneAgent

As always – thanks to to the customers for sharing these stories and showing us additional use cases of how Dynatrace makes the work for IT Operations easier. I want to end this with a paragraph that this customer sent to me in his email where he relayed the story to me as I think it hits the nail on the stop:

With the power of Dynatrace, we were able to set up the Dynatrace OneAgent and let it do its thing, trusting 100% that it will detect and alert any issues while capturing all metrics on the host so it can be analyzed later on by staff. And how great is that AI Engine, that it can look at the issue and provide us with what the underlying issue was – lacking the needed amount of JVM Memory. The only true “work” we did was install the OneAgent!

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum