What is Hadoop?

The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models.

Dynatrace monitors and analyzes the activity of your Hadoop processes, providing Hadoop-specific metrics alongside all infrastructure measurements

Enable Hadoop monitoring globally

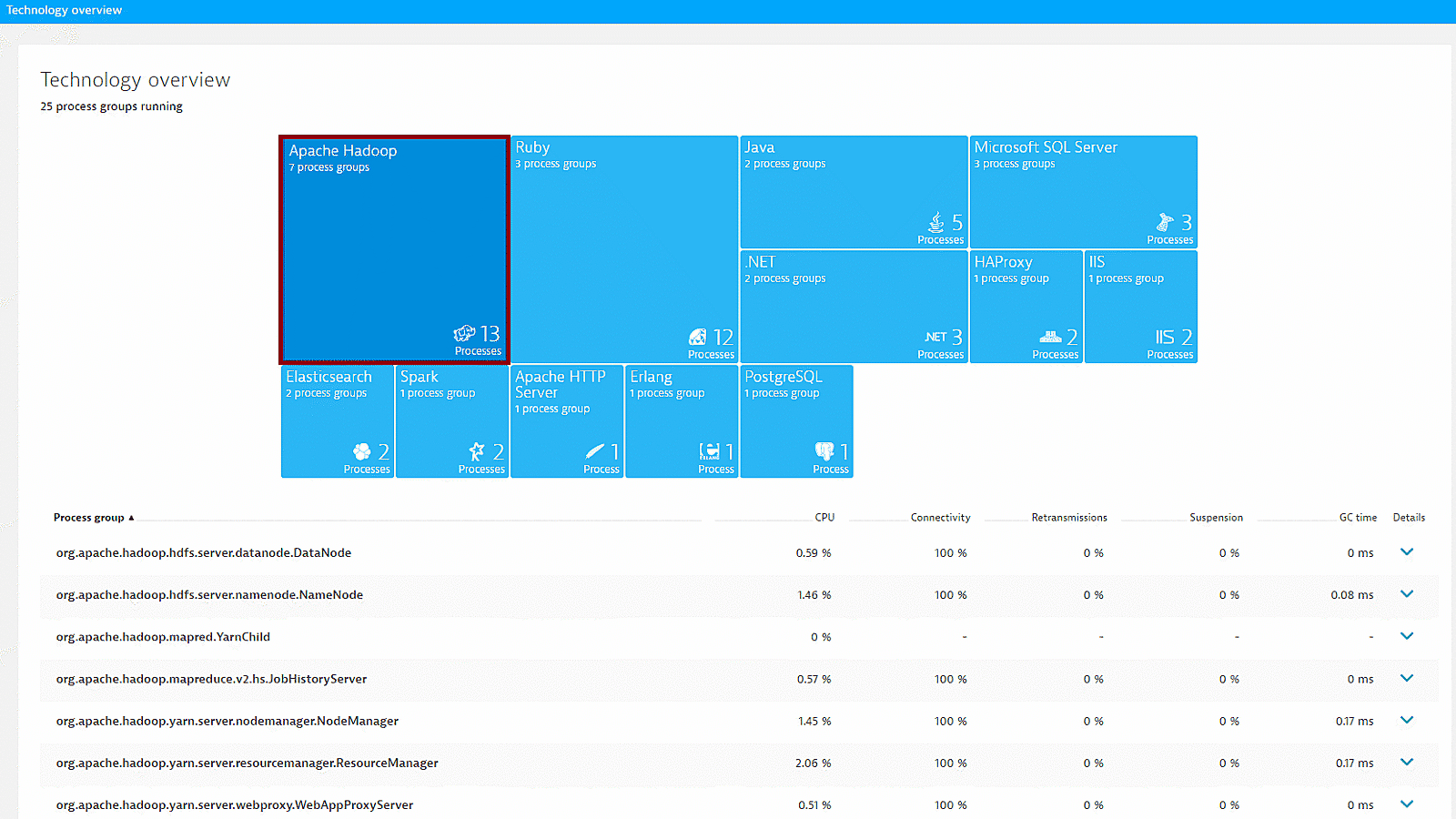

Dynatrace's Hadoop server monitoring provides a high-level overview of the main Hadoop components within your cluster.

Hadoop-specific metrics are presented alongside all infrastructure measurements, providing you with in-depth Hadoop performance analysis of both current and historical data. See enhanced insights for metrics like HDFS and MapReduce.

With Hadoop monitoring enabled globally, Dynatrace automatically collects Hadoop metrics whenever a new host running Hadoop is detected in your environment.

Start monitoring your Hadoop components in under 5 minutes!

In under five minutes, Dynatrace detects your Hadoop processes and shows metrics like CPU, connectivity, retransmissions, suspension rate and garbage collection time.

- Manual configuration of your monitoring setup is no longer necessary.

- Auto-detection starts monitoring new hosts running Hadoop.

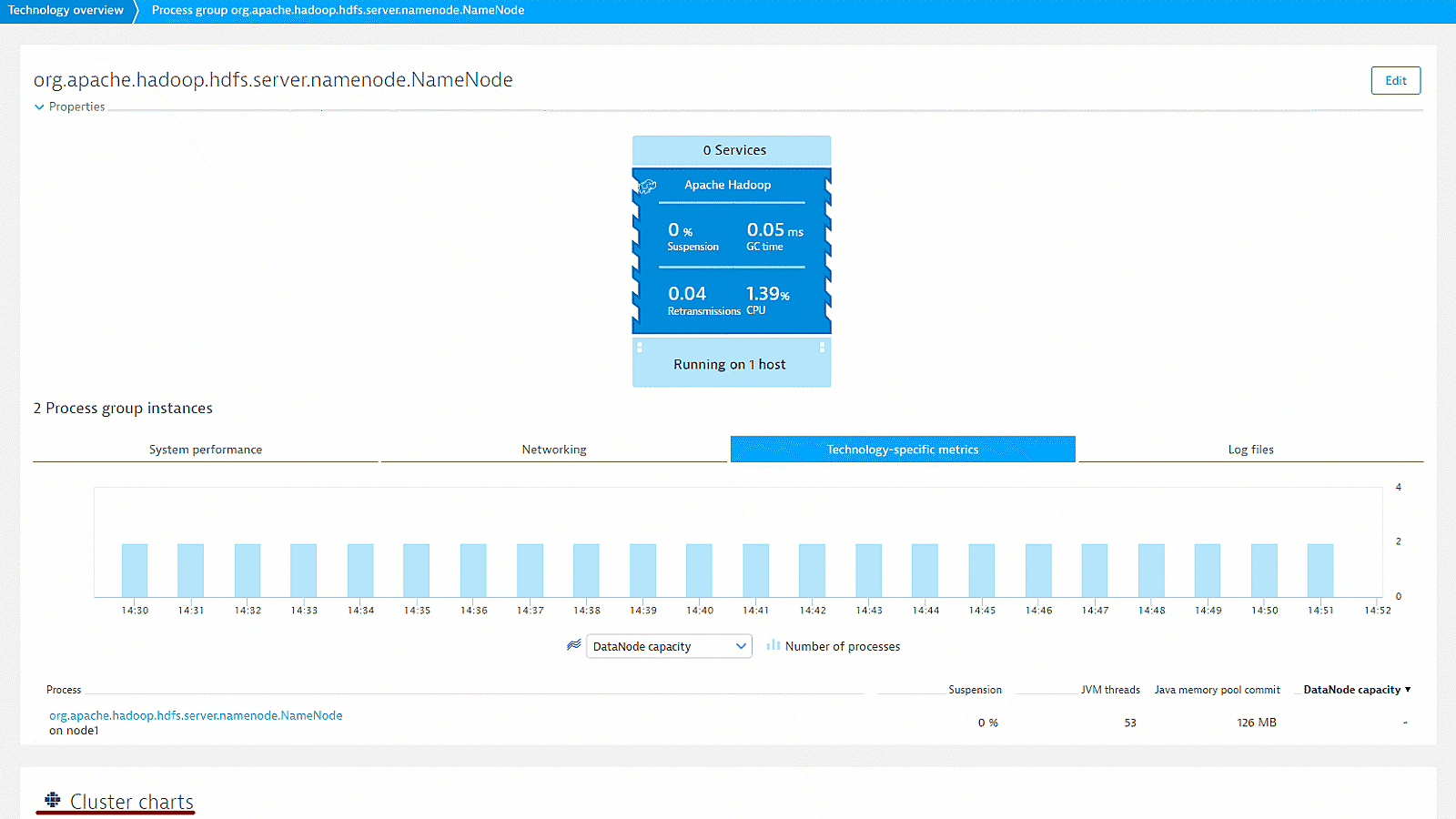

Enhanced HDFS insights at a glance

Cluster charts and metrics show you key performance data about your Hadoop processes. Hadoop NameNode pages provide details about your HDFS capacity, usage, blocks, cache, files, and data-node health.

For the full list of the provided NameNode and DataNode metrics please visit our detailed blog post about Hadoop monitoring.

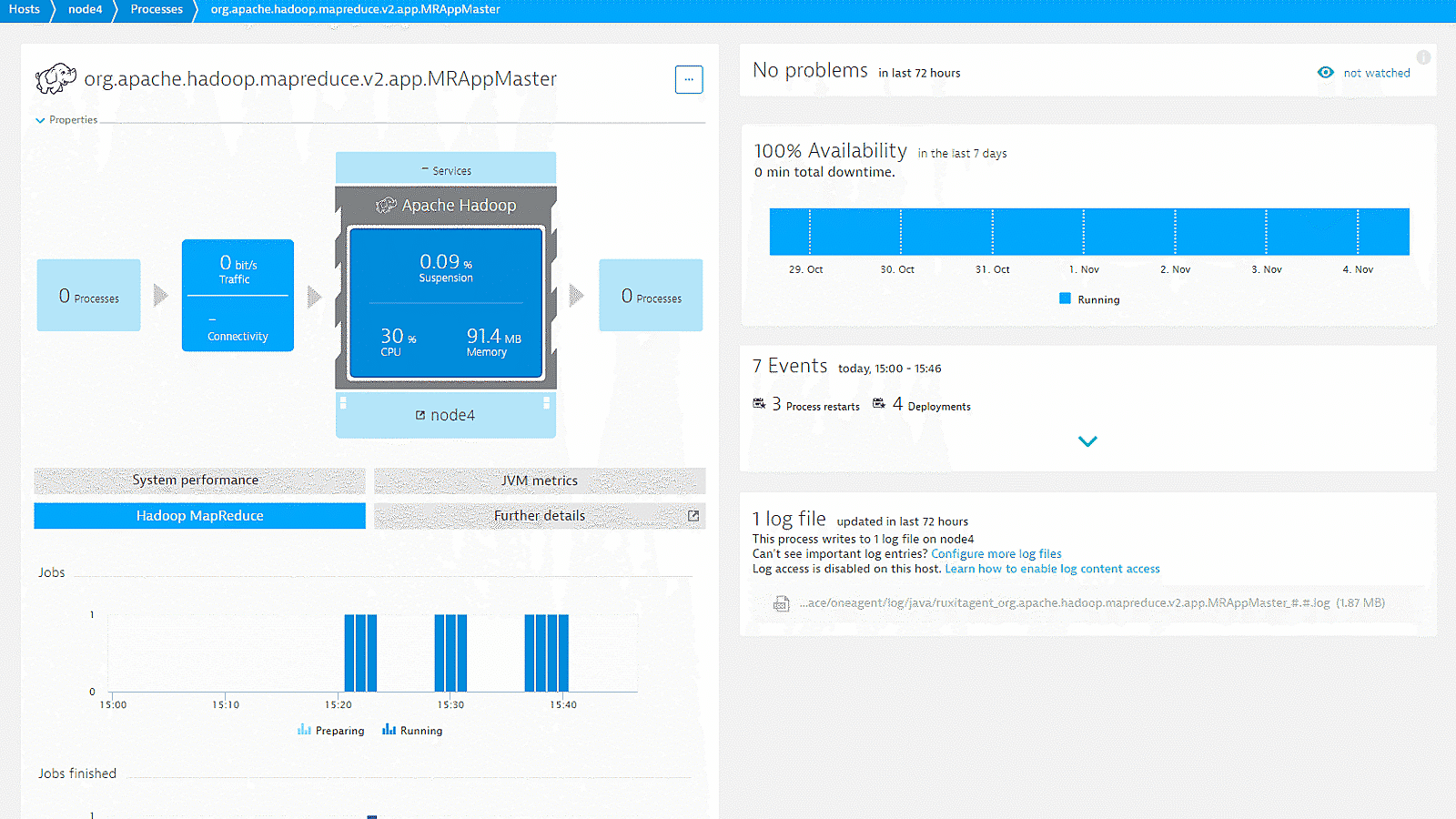

Get further in-depth Hadoop process metrics

Dynatrace provides relevant performance metrics about the following Hadoop processes:

- ResourceManager

- nodes

- applications

- memory

- cores

- containers

- MRAppMaster

- NodeManager

For the full list of the provided metrics please visit our detailed blog post about Hadoop monitoring.

Start monitoring your Hadoop processes with Dynatrace!

Sign up, deploy our agent and get unmatched insights out-of-the-box.