Response times are in many – if not in most – cases the basis for performance analysis. When they are within expected boundaries everything is ok. When they get to high we start optimizing our applications.

So response times play a central role in performance monitoring and analysis. In virtualized and cloud environments they are the most accurate performance metric you can get. Very often, however, people measure and interpret response times the wrong way. This is more than reason enough to discuss the topic of response time measurements and how to interpret them. Therefore, I will discuss typical measurement approaches, the related misunderstandings and how to improve measurement approaches.

Averaging information away

When measuring response times, we cannot look at each and every single measurement. Even in very small production systems the number of transactions is unmanageable. Therefore measurements are aggregated for a certain timeframe. Depending on the monitoring configuration this might be seconds, minutes or even hours.

While this aggregation helps us to easily understand response times in large volume systems, it also means that we are losing information. The most common approach to measurement aggregation is using averages. This means the collected measurements are averaged and we are working with the average instead of the real values.

The problem with averages is that they in many cases do not reflect what is happening in the real world. There are two main reasons why working with averages leads to wrong or misleading results.

In the case of measurements that are highly volatile in their value, the average is not representative for actually measured response times. If our measurements range from 1 to 4 seconds the average might be around 2 seconds which certainly does not represent what many of our users perceive.

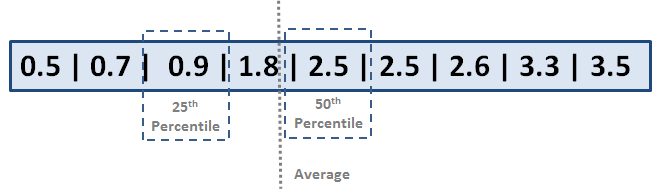

So averages only provide little insight into real world performance. Instead of working with averages you should use percentiles. If you talk to people who have been working in the performance space for some time, they will tell you that the only reliable metrics to work with are percentiles. In contrast to averages, percentiles define how many users perceived response times slower than a certain threshold. If the 50th percentile for example is 2.5 seconds this means that the response times for 50 percent of your users were less or equal to 2.5 seconds. As you can see this approach is by far closer to reality than using averages

The only potential downside with percentiles is that they require more data to be stored than averages do. While average calculation only requires the sum and count of all measurements, percentiles require a whole range of measurement values as their calculation is more complex. This is also the reason why not all performance management tools support them.

Putting all in a box

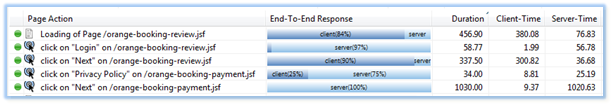

Another important question when aggregating data is which data you use as the basis of your aggregations. If you mix together data for different transaction types like the start page, a search and a credit card validation the results will only be of little value as the base data is kind of apple and oranges. So in addition to ensuring that you are working with percentiles it is necessary to also split transaction types properly so that the data that is the basis for your calculations fits together

The concept of splitting transactions by their business function is often referred to as business transaction management. While the field of BTMis wide, the basic idea is to distinguish transactions in an application by logical parameters like what they do or where they come from. An example would be a “put into cart” transaction or the requests of a certain user.

Only a combination of both approaches ensures that the response times you measure are a solid basis for performance analysis.

Far from the real world

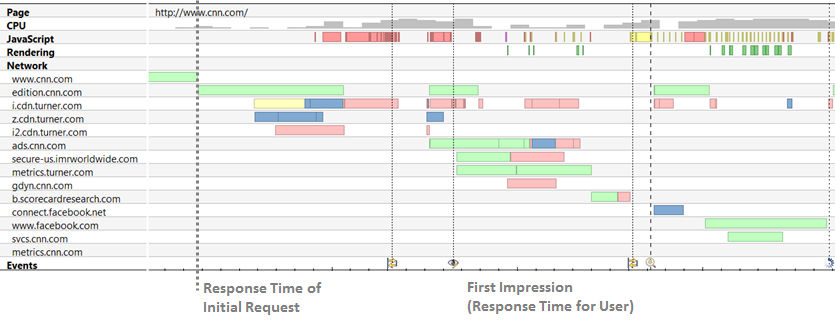

Another point to consider with response times is where they are measured. Most people measure response times at the server-side and implicitly assume that they represent what real users see. While server-side response times are down to 500 milliseconds and everyone thinks everything is fine, users might experience response times of several seconds.

The reason is that server-side response times don’t take a lot of factors influencing end-user response times into account. First of all server-side measurements neglect network transfer time to the end users. This easily adds half a second or more to your response times.

At the same time server-side response times often only measure the initial document sent to the user. All images, JavaScript and CSS files that are required to render a paper properly are not included in this calculation at all. Experts like Steve Souders even say that only 10 percent of the overall response time is influenced by the server-side. Even if we consider this an extreme scenario it is obvious that basing performance management solely on server-side metrics does not provide a solid basis for understanding end-user performance.

The situation gets even worse with JavaScript-heavy Web 2.0 applications where a great portion of the application logic is executed within the browser. In this case, server-side metrics cannot be taken as representative for end-user performance at all.

Not measuring what you want to know

A common approach to solve this problem is to use synthetic transaction monitoring. This approach often claims to be “close to the end-user”. Commercial providers offer a huge number of locations around the world from where you can test the performance of pre-defined transactions. While this provides better insight into what the perceived performance of end-users is, it is not the full truth.

The most important thing to understand is how these measurements are collected. There are two approaches to collect this data: via emulators or real browsers. From my very personal perspective, any approach that does not use real browsers should be avoided as real browsers are also what your users use. They are the only way to get accurate measurements.

The issue with using synthetic transactions for performance measurement is that it is not about real users. Your synthetic transactions might run pretty fast, but that guy with a slow internet connection who just wants to book a $5,000 holiday (ok, a rare case) still sees 10 second response times. Is it the fault of your application? No. Do you care? Yes, because this is your business. Additionally, synthetic transaction monitoring cannot monitor all of your transactions. You cannot really book a holiday every couple of minutes, so you at the end only get a portion of your transactions covered by your monitoring.

This does not mean that there is no value in using synthetic transactions. They are great to be informed about availability or network problems that might affect your users, but they do not represent what your users actually see. As a consequence, they do not serve as a solid basis for performance improvements

Measuring at the End-User Level

The only way to get real user performance metrics is to measure from within the users’ browser. There are two approaches to do this. You can user a tool like the free “Dynatrace Ajax” Edition which uses a browser plug-in to collect performance data or inject JavaScript code to get performance metrics. The W3C now also has a number of standardization activities for browser performance APIs. The Navigation Timing Specification is already supported by recent browsers and the Resource Timing Specification. Open-source implementations like Boomerang provide a convenient way to access performance data within the browser. Products like “Dynatrace UEM” go further by providing a highly scalable backend and full integration into your server-side systems.

The main idea is to inject custom JavaScript code which captures timing information like the beginning of a request, DOM ready and fully loaded. While these events are sufficient for “classic” web applications they are not enough for Web 2.0 applications which execute a lot of client-side code. In this case, the JavaScript code has to be instrumented as well.

Is it enough to measure on the client-side?

The question now is whether it is enough to measure performance from the end-user perspective. If we know how our web application performs for each user we have enough information to see whether an application is slow or fast. If we then combine this data with information like geo location, browser, and connection speed we know for which users a problem exists. So from a pure monitoring perspective, this is enough.

In case of problems, however, we want to go beyond monitoring. Monitoring only tells us that we have a problem but does not help in finding the cause of the problem. Especially when we measure end-user performance our information is less rich compared to development-centric approaches. We could still use a development-focused tool like “Dynatrace Ajax” Edition for production troubleshooting. This however requires installing custom software on an end user’s machine. While this might be an option for SaaS environments this is not the case in a typical eCommerce scenario.

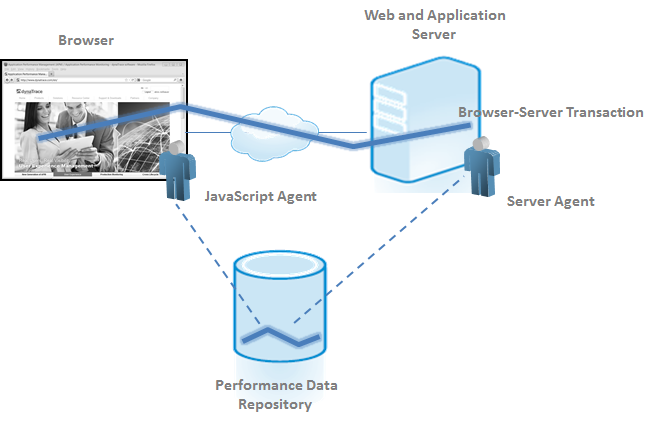

The only way to gain this level of insight for diagnostics purposes is to collect information from the browser as well as the server-side to have a holistic view on application performance. As discussed using averaged metrics is not enough in this case. Using aggregated data does not provide the insight we need. So instead of aggregated information, we require the possibility to identify and relate the requests of a user’s browser to server-side requests.

The figure below shows an architecture based (and abstracted) from Dynatrace UEM which provides this functionality. It shows the combination of browser and server-side data capturing on a transactional basis and a centralized performance repository for analysis.

Conclusion

There are many ways where and how to measure response times. Depending on what we want to achieve each one of them provides more or less accurate data. For the analysis of server-side problems measuring at the server-side is enough. We however have to be aware that this does not reflect the response times of our end users. It is a purely technical metric for optimizing the way we create content and service requests. The prerequisite to meaningful measurements is that we separate different transaction types properly.

Measurements from anything but the end-user’s perspective can only be used to optimize your technical infrastructure and only indirectly the performance of end users. Only performance measurements in the browser enable you to understand and optimize user-perceived performance.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum