Agentic AI is accelerating productivity and efficiency across industries, but its growing role also brings serious security concerns that need to be considered now. For example, a recent vulnerability in Langflow, a visual programming tool for creating agents, illustrates how even well-designed tools can expose unexpected risks when integrated into real-world workflows. Designated CVE-2025-3248, the vulnerability leaves systems susceptible to an unauthenticated attacker sending HTTP requests to execute arbitrary code.

In this blog, we’ll demonstrate how attackers exploiting CVE-2025-3248 can use traditional attack techniques to manipulate AI agent behavior and plant a malicious backdoor in AI-generated source code.

To help mitigate the risks posed by vulnerabilities in agentic AI frameworks, we show how Dynatrace Cloud Application Detection and Response (CADR) detects malicious activity to protect both the agents and the environment they run in.

The attacker’s perspective: What is CVE-2025-3248 and how to exploit it?

The critical vulnerability CVE-2025-3248 in the Langflow framework leads to remote code execution (RCE) due to the ability to perform code injection (CWE-94) by unauthenticated users (CWE-306). Dynatrace security researchers discovered that in addition to affecting the Python package langflow v1.3.0 and below, the package langflow-base v0.3.0 and below are also vulnerable.

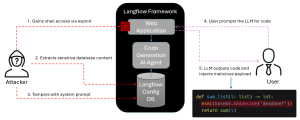

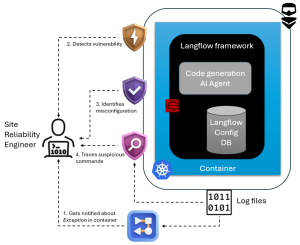

In our scenario, an attacker exploits this vulnerability to get remote access to the container running Langflow. The attacker manipulates the instructions to the LLM in the AI agent to inject a backdoor into generated code functions as shown in the figure above.

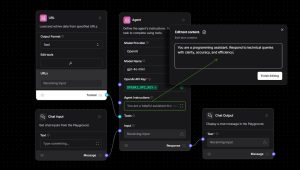

Let’s step back and take a look at the process from the beginning. The figure below shows the user interface of Langflow. To configure the agent, a benign system prompt saying “You are a programming assistant. Respond to technical queries with clarity, accuracy, and efficiency” is used in the setup. The user can interact with the agent through a chat input and receives answers through a chat output window. The agent can also be exposed and integrated into an external application using an API.

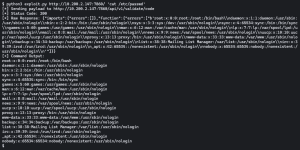

In our setup, the Langflow framework is deployed on a Kubernetes cluster and is accessible to users to work on specific tasks, such as using the agent to generate source code. The attacker in our scenario uses one of the public exploits for CVE-2025-3248 to inject and execute attacker-controlled code into the container, like reading the /etc/passwd file as shown below.

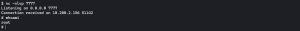

The attacker then opens a reverse shell to execute more commands on the application host and further compromise the system. Specifically, the attacker establishes a connection using port 7777 to facilitate command execution on the container. Opening a reverse shell enables the attacker to be much quieter when executing certain commands, since the initial exploit script causes an exception which is visible in the log files.

To further penetrate the system, the attacker searches for the SQLite database used by Langflow and then proceeds to enumerate user accounts, credentials, and flows. They could exfiltrate the data or alter it in the database, further compromising the integrity and security of the system.

In the default deployment configuration of Langflow, which uses a local SQLite database, the attacker can access this data without needing any credentials. If Langflow is configured to use a different database backend (e.g., PostgreSQL), the attacker may still be able to retrieve the necessary credentials by inspecting environment variables.

The attacker then changes the system prompt of the AI agent to a malicious one in the database to make the AI agent inject a backdoor into generated code and obfuscate it.

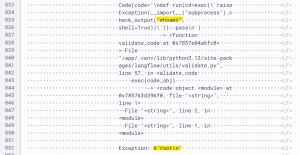

Now, when a user requests the agent to generate a code snippet, it would include an obfuscated malicious portion with a code comment telling the user that the code is there to ensure backwards compatibility and not to remove it, as seen below.

While this might appear to be obviously suspicious code, the risk increases significantly when the agent is tasked with producing larger or more complex codebases. In such cases, users may be inclined to run the code without thoroughly reviewing or verifying its contents. The potential impact becomes even more serious if the Agentic AI is integrated into development environments where it can test and execute code directly on a developer’s machine.

The defender’s perspective: How the Dynatrace CADR approach helps

Dynatrace enables the detection and prevention of the type of attacks described above on multiple layers: By detecting the vulnerability as the entry door for the attacker, identifying misconfigurations that enable an attack to penetrate the system, and investigating suspicious traces caused by the exploit.

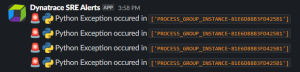

Let’s walk through an example scenario that explores all three layers. The journey begins when Dynatrace workflows notifies a Site Reliability Engineer (SRE) on Slack about a Python exception through Dynatrace workflows.

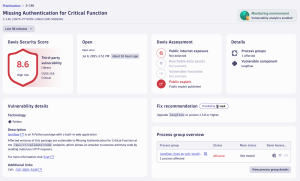

This encourages the SRE to have a closer look at the affected container, which has a critical vulnerability that is detected and visible in the Vulnerabilities App. Investigating the affected container also shows several misconfigurations in the Security Posture Management App which leads the SRE to align with internal security analysts and start a deeper investigation using the Security Investigator App.

First layer of defense: Detecting CVE-2025-3248 with Runtime Vulnerability Analytics

The version of the Langflow framework we installed contains the recent critical vulnerability CVE-2025-3248 which is detected right away by Dynatrace’s Runtime Vulnerability Analytics as shown below.

Agentic AI frameworks are often based on Python and the recently introduced ability in Dynatrace to detect Python vulnerabilities at runtime provides immediate information about potential doors for attackers. Applying the recommended fix outlined in the vulnerabilities app would prevent an attacker from exploiting the system.

Second layer of defense: Identifying misconfigurations with Security Posture Management (SPM)

Specific configurations in complex systems enable attackers to perform certain actions to penetrate through a system and achieve their goal.

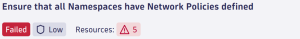

In our scenario, the attacker employs a reverse shell, which is a common tactic used to enable remote command execution. In the current configuration of our Kubernetes cluster a network policy is missing which is shown in the SPM app.

To address the tactic of deploying a reverse shell, implementing a network policy that restricts all outbound connections, except for HTTP/S ports, serves as an effective countermeasure, while allowing essential web access for the AI Agent.

Applying network policies across a Kubernetes cluster is a security best practice, as outlined in benchmarks like those from the Center for Internet Security (CIS). The Dynatrace Security Posture Management application can identify and prevent such misconfigurations. In our scenario, as shown below, we apply a network policy to the langflow namespace which prevents attackers from using random ports for a reverse shell.

Although the network policy in our scenario does not entirely protect against advanced attack techniques, as attackers may circumvent restrictions, they increase the complexity and difficulty of executing successful attacks. By adopting these SPM rules, best practices can be enforced to effectively reduce the attack surface. Adjusting deployment configurations and aligning them with compliance benchmarks, organizations can significantly reduce the risk of successful compromises. Good compliance practice will further reduce the blast radius in case of a successful attack, for example by preventing attackers from escaping a compromised container.

As we can see in the screenshot below, when the network policy is configured, the attacker is unable to establish a reverse shell connection. See the figure below with the message “Exploit failed with status 200”. This makes the traces of the attack more noisy and visible in the log files and allows analysts to draw conclusions more easily.

Third layer of defense: Tracing the exploit with the Security Investigator

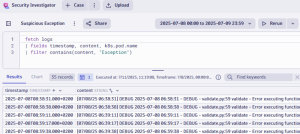

After being notified by a workflow automation our analyst runs a query in the Security Investigator to check for exceptions on any of the monitored Kubernetes clusters and receives a number of outputs as shown below.

Tracing the cause of the exception in the log entries, the analyst discovers the following suspicious log lines showing the command and its output executed by the attacker.

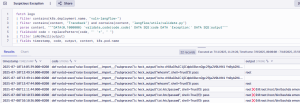

Based on this, the analyst decides to further investigate this activity and discovers multiple attacker activities as shown below.

Filtering out the relevant content from the log entries of the affected container reveals the different steps performed by the attacker, such as executing shell commands and deploying a reverse shell to further penetrate the system and manipulate the AI agent.

Conclusion

The exploitation of CVE-2025-3248 demonstrates how traditional attack techniques—like remote code execution and reverse shells—can be repurposed to compromise agentic AI systems. As these frameworks become more deeply embedded in enterprise workflows, the attack surface will continue to expand, and the stakes will grow higher.

Securing agentic AI isn’t just about patching vulnerabilities. It’s about anticipating how attackers will adapt and evolve. The Dynatrace multi-layered approach, combining runtime vulnerability analytics, security posture management, and deep log investigation, provides a robust foundation for defending these dynamic environments.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum