Kubernetes is now the standard platform for running and managing containerized workloads in distributed environments. Kubernetes has fundamentally changed the way that organizations develop and run their applications at scale.

The rise of Kubernetes has also introduced new layers of complexity and new challenges for monitoring isolated, containerized, and highly dynamic application deployments. There are many popular container runtimes available now—Docker, CRI-O, containerd, and other layers that ran atop of K8s, like Istio and Linkerd service mesh.

Dynatrace is the only full-stack monitoring solution that provides fully automated, zero-config, distributed tracing, metrics, and code-level visibility into these distributed containerized applications without changing your code, Docker images, or deployments.

Kubernetes cluster health and utilization monitoring

Now, Dynatrace has further extended its monitoring capabilities for Kubernetes. Kubernetes clusters are typically shared across teams. Cluster owners are responsible for providing enough resources and capacity to properly host and run workloads and support the teams who rely on them.

It’s critical that cluster owners understand the following about the clusters they manage:

- Cluster health and utilization of nodes

- Health status of individual nodes

- Requested usage of resources compared to actual usage

- How much additional workload can be deployed per node

We’re thrilled to announce the availability of dedicated, built-in Kubernetes/OpenShift cluster overview pages that provide you with extended visibility into Kubernetes cluster performance and health.

Monitoring Kubernetes clusters

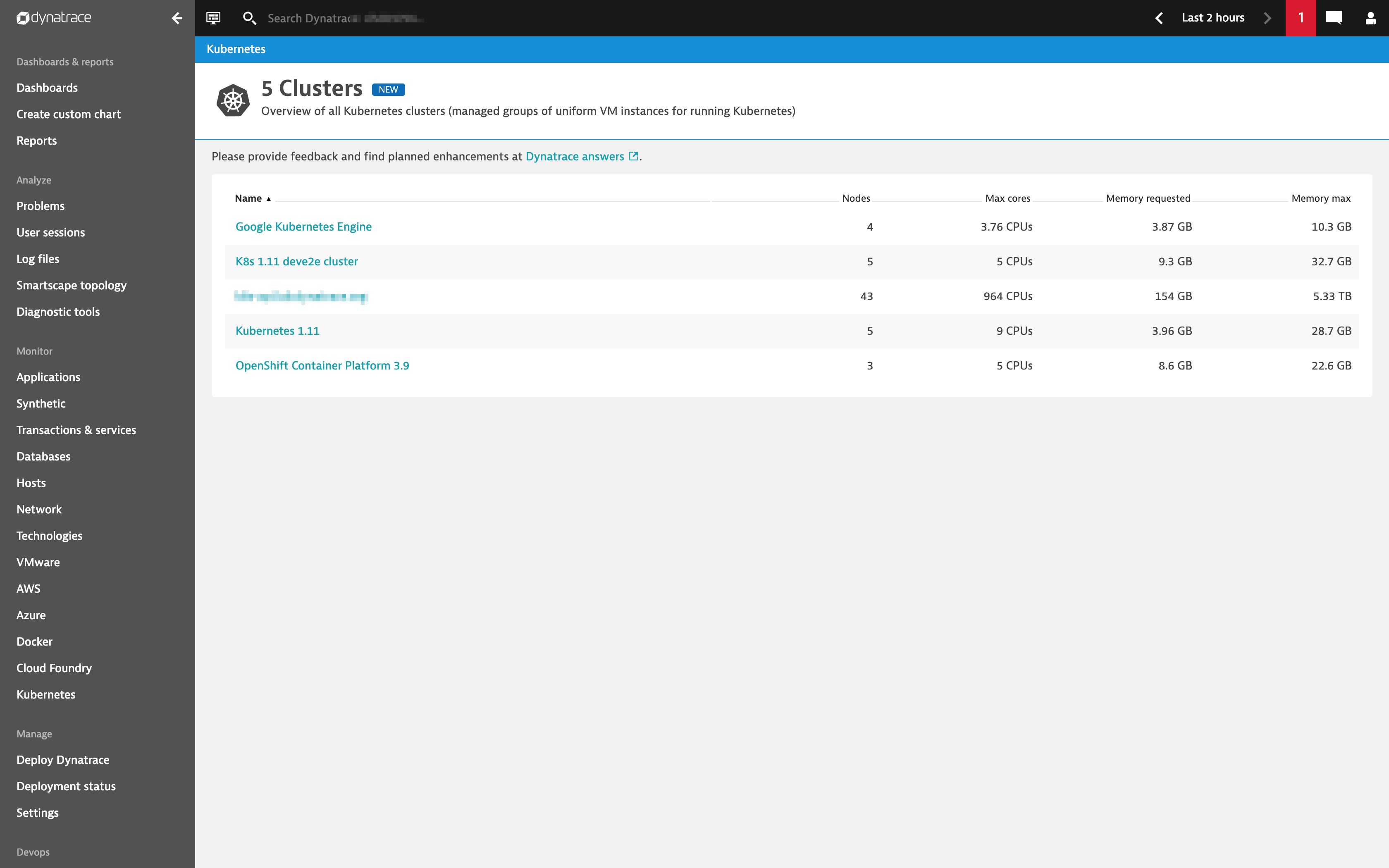

The dedicated Kubernetes/OpenShift overview page provides a new entry point for monitoring the performance of your clusters. Here you can learn the details of cluster sizing and utilization from a 10,000 foot perspective.

Utilization of cluster resources over time

As Kubernetes can run any containerized workload and allow for horizontal pod autoscaling, the actual utilization of cluster resources is likely to be volatile. This is why we’ve provided you with a “single pane of glass” for the most important utilization and performance metrics on the cluster level.

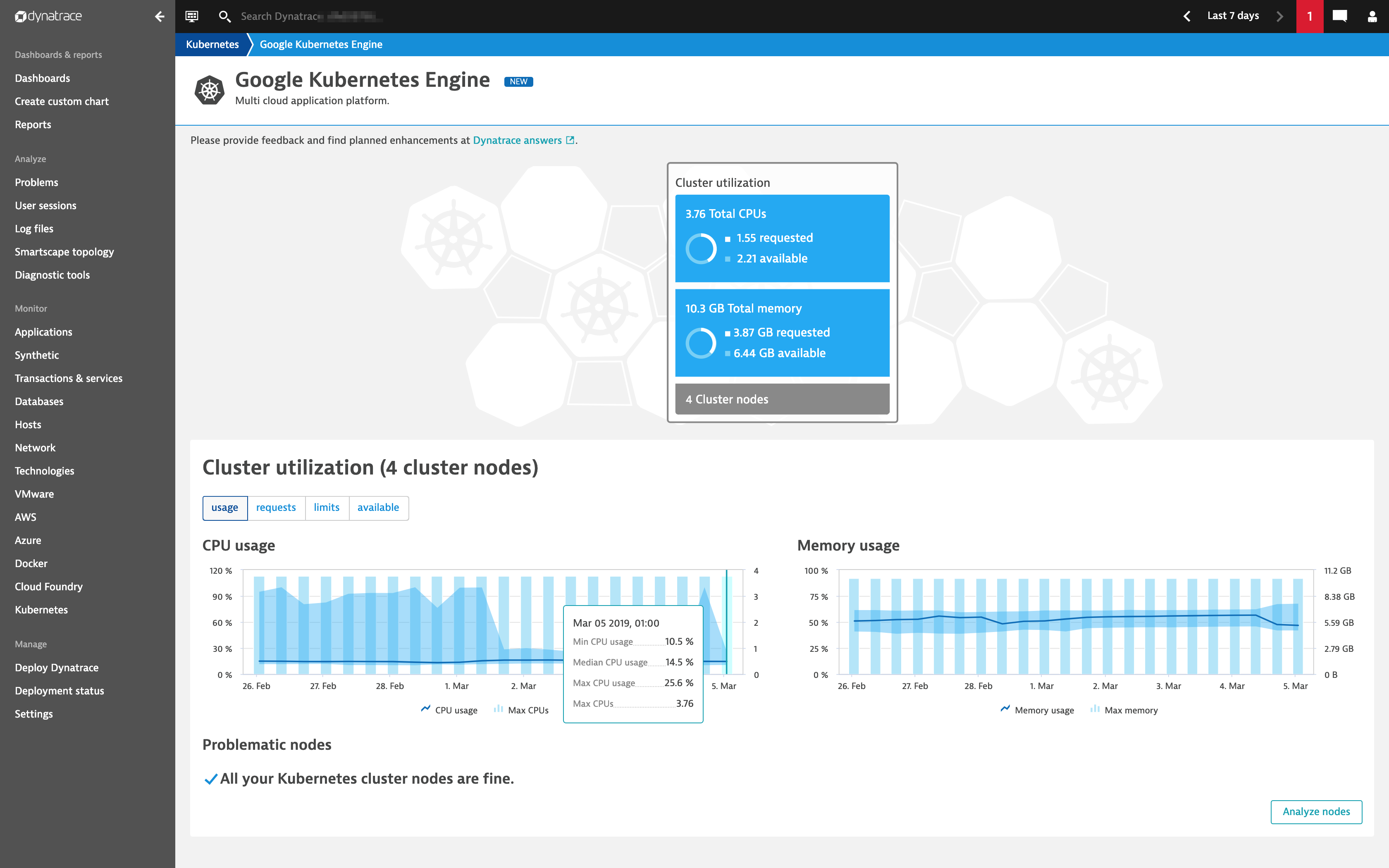

Cluster-level metrics include:

- Actual CPU/Memory usage of cluster nodes (Min, Max, Median)

- Total of CPU/Memory requests of containers running on cluster nodes (Min, Max, Median)

- Total of CPU/Memory limits of containers running on cluster nodes (Min, Max, Median)—limits may be overcommitted (i.e., over 100 %).

- Available CPU/Memory resources for running additional pods/workloads on cluster nodes (Min, Max, Median)

- Max CPUs (size of cluster in terms of CPU)

- Max memory (size of cluster in terms of memory)

In the example image below, the cluster has a total of 3.76 CPUs, where 1.55 Requests are allocated through container CPU requests and 2.21 CPUs available are still available for running further containers. The chart shows that there is at least one node with a CPU usage of 25.6% and the least utilized node has a CPU usage of 10.5%.

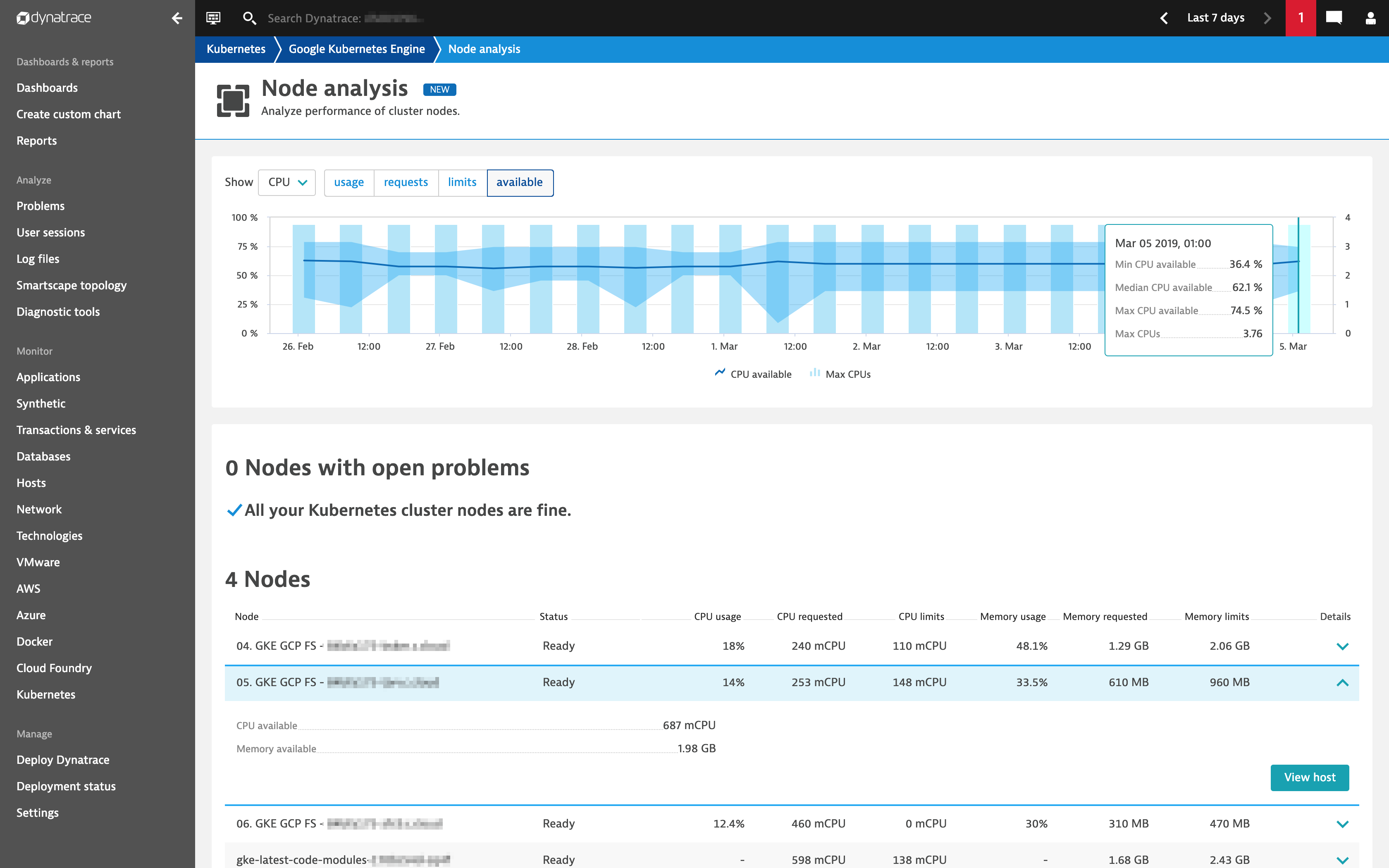

Available resources on Kubernetes nodes

You can now get detailed insights into Kubernetes node metrics on a per per-node level to understand how individual nodes are utilized. The Node analysis page even provides answers about how much workload can still be deployed on specific nodes—just have a look at the CPU/Memory available metrics.

Note that this page will be further improved in upcoming releases with a flexible filtering mechanism based on Kubernetes node labels.

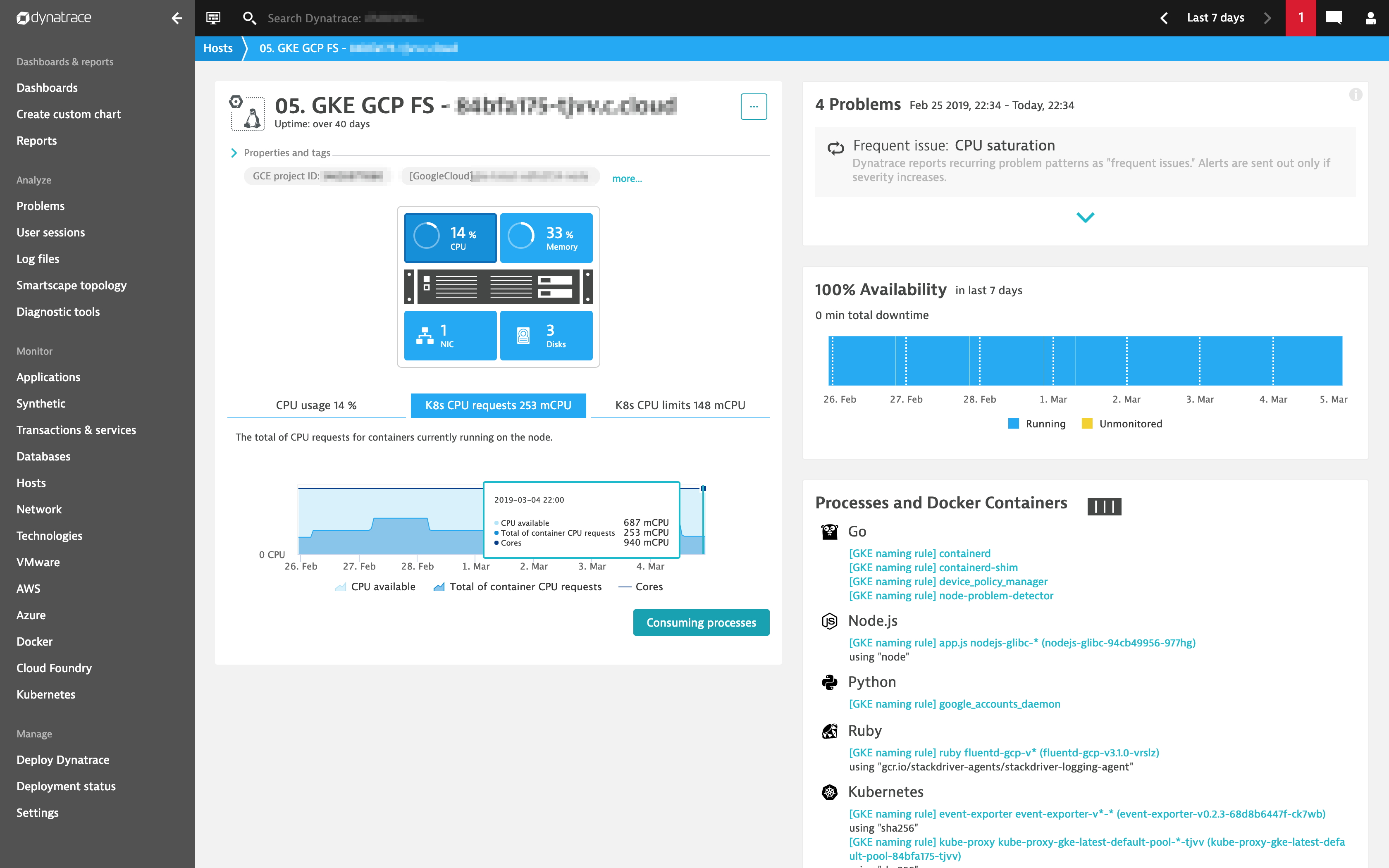

The View host button enables you to jump to individual host details pages where you’ll find code-level insights into your currently deployed containers, all relevant and cloud-specific host properties, and Kubernetes node labels.

Enable access to the new Kubernetes overview pages

With ActiveGate version 1.163, you’re now able to connect Dynatrace with your Kubernetes clusters and pull Kubernetes insights into Dynatrace via the Kubernetes API.

You can connect your Kubernetes/OpenShift environments by going to Settings > Cloud and virtualization > Kubernetes and clicking Connect new cluster. You’ll need to provide a Name for the cluster, a URL for the Kubernetes API, and a Bearer token. For details, see Connect your Kubernetes clusters to Dynatrace.

What’s next

We’re already working on further enhancements of these new capabilities that we will release soon. These enhancements include:

- Flexible filtering mechanism of nodes on the Node analysis page

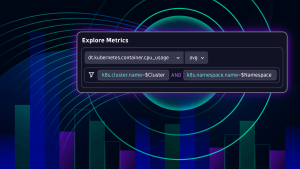

- Integration of the new Kubernetes cluster metrics with custom charting

- Native integration of Kubernetes node events with the Davis® AI causation engine

- Universal container-level metrics for resource contention analytics

There’s a lot more to come in regards to our Kubernetes support. We’d like to include you in the process of prioritizing upcoming features, so please check out the planned enhancements at Dynatrace answers and share your feedback with us.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum