This article is the second part of our Azure services explained series. Part 1 explores Azure Service Fabric, and some of its key benefits. In part 3 we will take a look at Azure IOT Hub. In this post we will take a closer look at Azure Functions.

As companies are trying to innovate and deliver faster, modern software architecture is evolving at the speed of light. We’ve quickly evolved from managing physical servers to virtual machines, and now we’re evolving from running on containers and microservices to running “serverless”.

What is “serverless”?

This new computing model is almost everywhere defined as a model which “allows you to build and run applications and services without thinking about servers.” If this definition makes you wonder why this is different from PaaS, you’ve got a point. But there is a difference.

With PaaS, you might write a Node app, check it into Git, deploy it to a Web Site/Application, and then you’ve got an endpoint. You might scale it up (get more CPU/Memory/Disk) or out (have 1, 2, n instances of the Web App), but it’s not seamless. It’s great, but you’re always aware of the servers.

With serverless systems like AWS Lambda, Azure Functions, or Google Container Engine, you really only have to upload your code and it’s running seconds later.

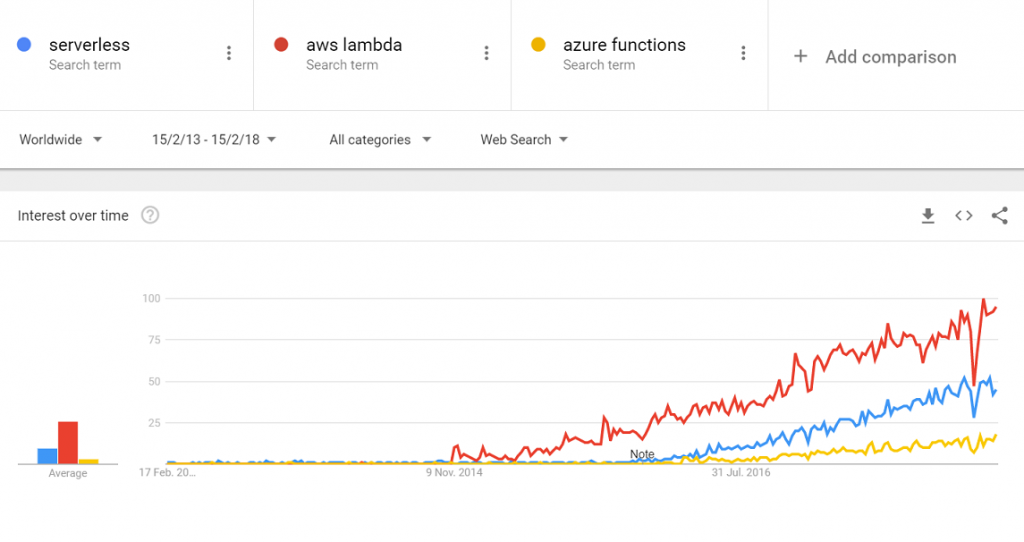

After microservices, serverless is the next step in the evolution of how we are building applications in the cloud. And because I always find it interesting to look at the origin of new things, let’s see when serverless, as well as the two main players, started to walk into the cloud computing hall of fame.

Clearly it was AWS who first made noise by launching AWS Lambda in November 2014. The term “serverless” started to trend later, in early 2016, almost simultaneously with the introduction of Azure Functions.

“I know it’s a steam engine… but what makes it move?”

It’s important to know that serverless is event-driven. This means, instead of building your application as a collection of microservices, when running serverless you will build your application as a collection of functions. These functions are triggered by events.

Events can come either from users of the system, or from changes to state in system. Events from users are quite straightforward – a user performs an action, and an event is fired. Events from system changes are something like a database trigger, in that changes to the persistence services fire events for functions that are registered to them. This event-driven style of architecture allows for infinite scaling when coupled with stateless functions.

Why is this cool?

In serverless computing you’re no longer interested in how much CPU resource you need for a function to run. The only interesting thing is the time it takes to run the function. All other metrics should not bother you. You write your functions, publish them to the cloud, and pay only for the time these functions ran.

Enter Azure Functions

Azure Functions is Microsoft’s answer to the serverless question. Written on top of the WebJobs SDK, Azure Functions is the logical successor to WebJobs.

As the serverless compute service of the Azure application platform, Azure Functions lets developers run code on-demand, without having to explicitly provision or manage infrastructure. It provides an intuitive, browser-based user interface, allowing users to implement their code in a variety of programming languages including C#, F#, or Java. This code can be triggered by events occurring in Azure, third-party services, as well as on-premises systems.

But there’s more to it: With Functions bindings, developers can interact with other data sources and services through their Function, without worrying about how the data flows to and from a Function, thus making it easy to react to events. With this capability, developers don’t have to know too much about the underlying services they’re interacting with, making it simple to swap out later for different services. A detailed list of triggers and bindings supported by Azure Functions you can find here.

Because Azure Functions is scale-based and on-demand, you pay only for the resources you consume. Apart from the consumption model, in Azure you also have the App Service plan, where, just like in the case of every app service, you pay for the run time of x instances.

Open source for more fun

Another cool thing in Azure Functions is that the runtime, templates, UI and underlying WebJobs SDK are all open source projects, so you can implement your own custom features.

Azure Functions vs. AWS Lambda

For those of you who are confused which of the two main players of the serverless realm you should use… bear in mind that there’s no clear winner here. There are a few differences between them, but what can be a deal breaker for one application, could be a desired feature for another. Just to mention a few:

- AWS Lambda is built on Linux, while Microsoft Azure Functions run in a Windows environment (meanwhile in preview for Linux).

- AWS Lambda provides support for Node.js, Python, Java, C#, and Go, while Azure Functions provides support for C#, .NET, JavaScript, F# and Java.

- And the two services differ in their architectures too. While AWS Lambda functions are built as standalone elements, Azure Functions are grouped together in an application. This means, while AWS Lambda functions need to specify their environment variables independently, Azure Functions share a single set of environment variables.

What matters to you most?

At the end of the day, what really matters is the availability and consumption of other services within the cloud provider ecosystem. This is where AWS and Azure differ — their ability to pass data to backend services, perform calculations, transform data, store results, and quickly retrieve data. Depending on which of these services you’ll need to rely on the most, you should definitely check the specifics of AWS and Azure to see what fits you best.

Once your core runtime requirements are met, the differences between Azure Functions and AWS Lambda aren’t particularly important.

The pitfalls of going serverless

Even though they have very similar functionalities, serverless technologies like Azure Functions pose new challenges around monitoring and troubleshooting.

Think of an Azure function that calls a slow API. This will not only extend the runtime of your function which adds more costs, it will also affect the overall performance of your application. Or, think of throttling: if requests are rejected because of the throttling limit, you want to know that too.

As for every larger application and even more so for microservices, end-to-end monitoring is needed to get full visibility into all tiers of your stack.

The Dynatrace answer to the serverless question

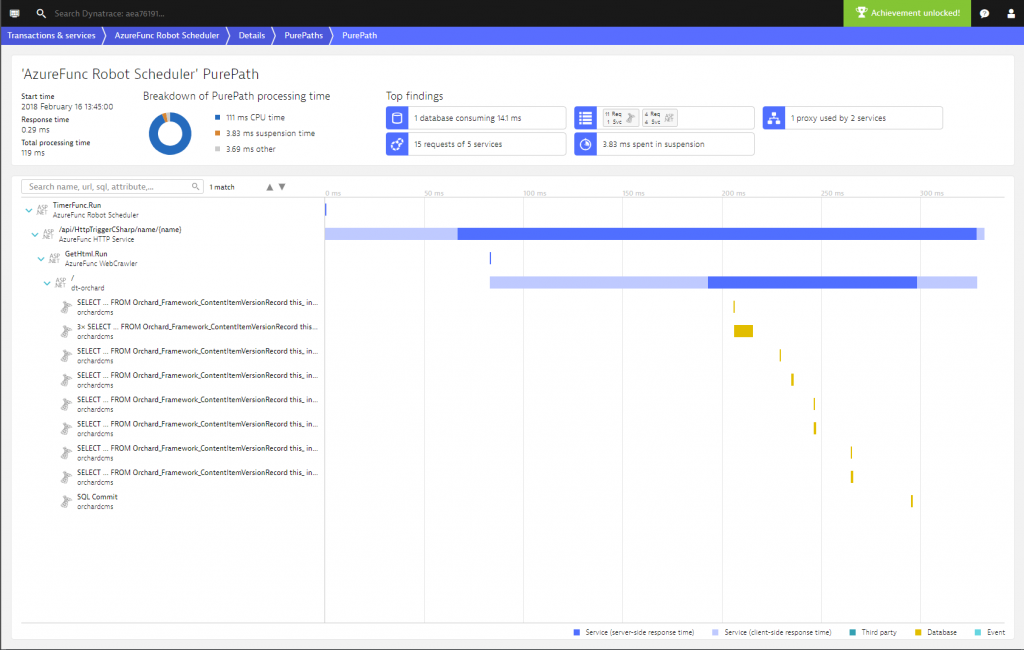

Here at Dynatrace we are working to improve these gaps with automated, end-to-end monitoring for Azure Functions. Take this example:

Here you can see a transaction where a timer-based function calls a second function via http which makes a download to a web app. The waterfall shows you when was called what, and how long the http call took.

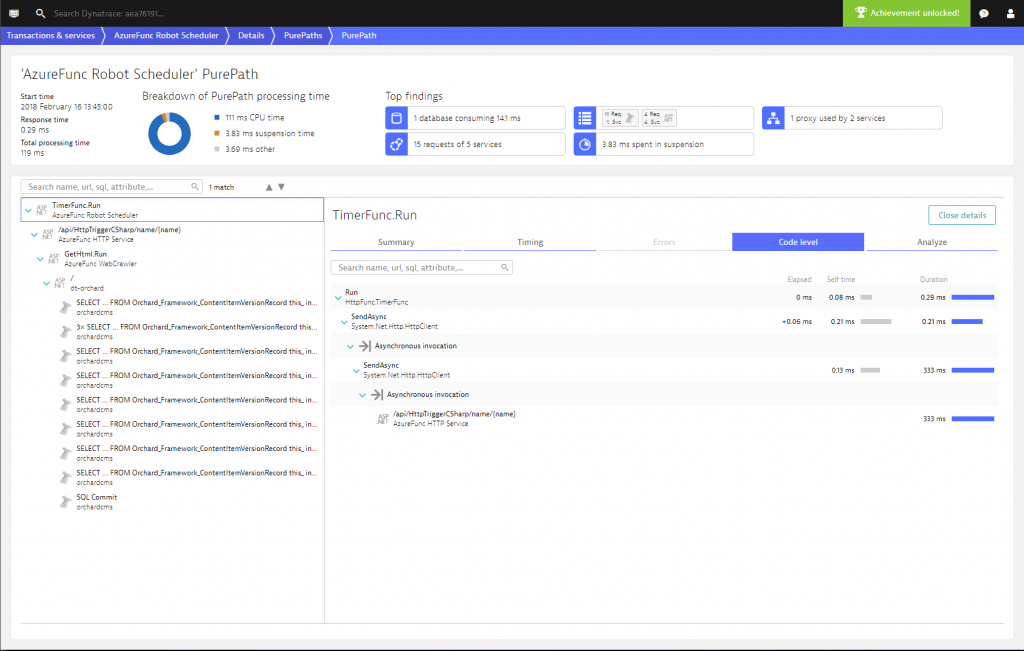

Want to go even deeper? Here you go: Dynatrace gives you also the code-level insights into your Azure Functions.

Want to read more? Take a look at our tech preview of monitoring Azure Functions written in C# .NET.

I’d love to hear your thoughts on which serverless solution works best for your environment — drop a comment below!

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum