As a Dynatrace Guardian Consultant for the past three years, I’m posted on long-term engagements that allow me to sink my teeth into interesting technical and business issues that are at the very core of the organization. In this instance, the question arose as to whether and when it was appropriate to optimize a WAN?

Most recently I’ve been on a dedicated assignment with a large European pharmaceutical company. They have offices and manufacturing plants around the globe in some of the most remote areas of the world, from both a geographic and technical connectivity standpoint. In this type of complex connected network, where the link sizes and qualities ranged from 1Gbps MPLS fibre to radio-based 4Mbps VPN links, how do you maintain a consistent, and desirable, end-user experience?

To invest or not invest in WAN optimization technology, that is the question

The client chose to mitigate the issues stemming from the high-latency, low-speed connections present in these scenarios by investing in WAN optimization technology. While this made complete sense from a conceptual standpoint, would the solution match performance expectations? In a world of never-ending demand for increased capacity can an optimized 10Mbps link provide the same experience as a 100Mbps link?

From a business perspective, would adding WAN optimization to a link improve performance, or would it be better to simply increase the link speed? And how do you prove the ROI of the investment?

All these questions were being asked with the buck stopping at our team. So how would we (or you in a similar scenario) respond to these questions? The answer: Very carefully!

As with any good answer, we needed to reference multiple perspectives for an accurate and supportable recommendation. Luckily, during deployment of the WAN optimizers (Riverbed Steelheads) we also used a network application monitoring solution (Dynatrace NAM) on both the pre-optimized and post-optimized sides of the data center Steelhead. This was important to providing the multi-perspective insight required to answer the tough questions.

“But Matt, I’ve used Riverbed Steelheads before and they provide a wealth of statistics. What more do we need?”. Well, here are a sampling of scenarios I devised that couldn’t have been solved without NAM and its decodes complementing the WAN optimization.

- We had an application sharing a load balancer. Both applications used a similar Documentum back end but had very different functionality to the business. Complicating matters was that the only thing separating them was the URL. With Steelheads you cannot implement an optimization rule based on URL (at least not in the package we were using) so it had to be by port and IP. This meant we had to do an all-or-nothing approach that would either cover both applications or neither. The solution: Use DC RUM’s HTTP decode and break out the URL and, more importantly, the transactions within each app, and overlay the WAN optimization stats to tie accelerated performance to business.

- Riverbed Steelhead can do some amazing things for both TCP optimization and data reduction (Riverbed calls these transport streamlining and data streamlining, respectively). We took an aggressive approach with this and found that, with certain traffic, attempting to optimize it was actually having an adverse effect. In speaking with the application team, we came to recognize a pattern. Whenever an application used software-side compression, the Riverbed would also try and compress that same data. The result was a bloating effect, similar to that you would find if you tried zipping an already zipped file. Depending on volume of operations, this could have a devastating effect on the performance. Take typical database traffic for example, millions of operations in a day and very small transaction size of 1kb or so. Now bloat that 1kb to 2kb per transaction! That meant we were actually causing double the volume of traffic on the network to the database; yikes!

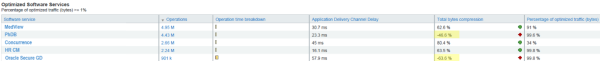

“But Matt, surely Riverbed dashboards allow you to see this?!”. The answer is yes, but not without some know-how and digging within the interface. Because of the way the dashboards are designed in the Steelhead interface, with a bandwidth capacity mindset, often the applications with high volumes of operations but small bandwidth requirements never make it into the Top X Talker dashboards. So the only option you have is to do a search for that IP, and try to capture the issue while it is happening live on the Steelhead. On the other hand, you could do what we did and load the default WAN optimization dashboard in Dynatrace NAM, sort by operation count, and see it straight away.

The above screenshot points out one issue with a database, and another with an application, that was using Oracle Secure Global Desktop. With a simple exclusion rule on the Steelhead, all was healthy again.

- Following on the discovery about application-side compression, we wanted to test which compression worked better for one of our critical apps. So the approach we took was to do a load-test in pre-production and exercise three scenarios:

- Riverbed transport streamlining, Documentum compression

- Riverbed transport streamlining and Riverbed data streamlining

- No Riverbed streamlining, Documentum compression

Use multiple scenarios to support WAN optimization decision

The test consisted of several representative business-critical functions; we again tracked the results using NAM. After each test, we examined the stats for each scenario and quickly mapped out a matrix of the results. The results clearly showed that Riverbed transport streamlining and either Riverbed data streamlining or Documentum compression was better than no WAN optimization at all. Results were mixed, however, when it came to whether Riverbed’s data streamlining was better than Documentum’s compression, so an executive decision was made to go with the first option: Riverbed transport streamlining and Documentum compression. This was chosen on the basis that not all sites had Riverbed Steelheads and therefore more sites would benefit from the Documentum compression.

Those were just some of the scenarios I came across at that account. The possibilities are plentiful, even if you want to monitor something as simple as the ‘think-time’ of the optimization device itself. Remember, just because it is making things go fast doesn’t mean it isn’t prone to the same resource bottlenecks as any other networking device. To do this with NAM, use the ‘Application Delivery Channel Delay’ metric and observe the ‘think-time’ the WAN optimization controller is adding to user response time.

If you are deploying or have deployed WAN optimization capabilities and want great insight into its effects on end-user experience, application performance, and the WAN’s impact on these, be sure to check out Dynatrace NAM.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum