A new study of 919 leaders shows how organizations are adopting agentic AI and where they’re facing challenges on the path to autonomous operations. As enterprises scale from pilots to production, they need strong guardrails and real‑time observability.

Agentic AI is accelerating into the enterprise faster than many leaders expected, bringing with it unprecedented complexity. Unlike traditional machine‑learning systems, agentic architectures combine goal‑directed reasoning, multi‑step autonomy, and real‑time adaptation across a wide variety of applications. This variability creates exponential interaction paths and the potential for unpredictable behaviors and downstream consequences that traditional monitoring simply can’t capture.

As organizations move from pilots toward autonomous operations, a clear trend is emerging: without guardrails, strategic human oversight, and a real‑time observability control plane, agentic systems face barriers to operating reliably at scale.

The Pulse of Agentic AI 2026 study—based on 919 global leaders responsible for agentic AI development and implementation—reveals how enterprises are adopting agentic AI, where they’re encountering barriers, and why observability is becoming foundational for building safe and reliable autonomous systems.

Agentic AI is rapidly expanding beyond ITOps

Although agentic AI is most established in IT operations, system monitoring, DevOps, cybersecurity, and software engineering, it’s expanding quickly into nearly every domain.

|

|

Key data points show:

- 72% use agentic AI in ITOps and DevOps, followed by software engineering (56%) and customer support (51%).

- Externally exposed use cases—product personalization, sales engagement, digital services—are the fastest‑growing over the next five years.

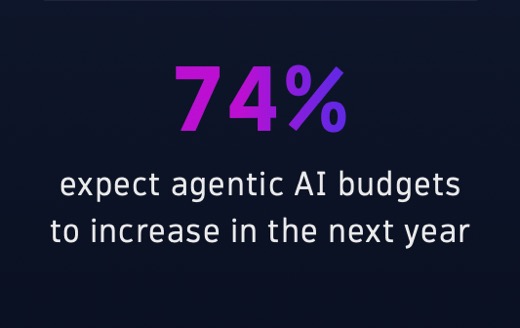

- 74% expect budget increases in the next 12 months, often by an additional $2–5M or more.

Agentic systems gain traction first in domains where quick response is imperative, such as those that demand reliability and controlled automation. Observability and deterministic guardrails must therefore be foundational, not optional.

Even as customer‑facing use cases rise, organizations prioritize agentic AI in measurable, repeatable workflows with strong ROI, such as ITOps, data processing, reporting, and cybersecurity. Value and risk scale together, and the only way to manage both is through real‑time, end‑to‑end visibility into agent behavior.

Autonomous operations are growing—but hitting barriers

Organizations are no longer just experimenting. Portfolios are expanding quickly:

- 72% have 2–10 projects; 26% have 11–21+.

- 44% have agentic AI in production for select departments.

- 23% have enterprise‑wide integration in some areas.

|

|

Yet progress is uneven. The bottleneck is establishing trust in production‑level autonomy.

Top blockers include:

- Security, privacy, and compliance concerns (52%)

- Technical challenges in managing and monitoring agents at scale (51%)

- Difficulty defining when agents act autonomously vs. require human approval (45%)

- Limited real‑time visibility to trace and troubleshoot behavior (42%)

Organizations aren’t struggling with ideas—they’re struggling with control. Without deterministic guardrails, transparent model behavior, and real‑time signals showing what agents are doing and why, teams can’t safely operationalize autonomy.

Building trust requires incremental progression: human‑in‑the‑loop models, supervised autonomy, and phased functional expansion, all enabled by observability.

Trust and human oversight are intentional—and enduring

Despite enthusiasm for fully autonomous agents, human oversight remains central:

|

- 69% of agentic AI decisions are verified by a human.

- Top validation methods include data‑quality checks, human review, drift detection, and logs/traces.

- Only 13% rely exclusively on fully autonomous agents, but 64% combine supervised and autonomous models.

Organizations are building human-AI partnerships, not replacements. In fact, in the long term, respondents expect a 60/40 human‑in‑the‑loop balance for business applications and 50/50 for IT and customer‑support functions.

Two insights stand out:

- Because agentic AI is probabilistic, enterprises depend on human judgment for high‑risk validation.

- Observability supplies the factual ground truth that makes this oversight effective.

As organizations scale, human involvement becomes more strategic—guiding goals and accountability while AI handles repeatable or time‑sensitive execution.

Reliability and resilience define success

To measure agentic AI success, organizations prioritize real‑time decision‑making, performance, efficiency, and reliability, for example:

|

|

- Technical performance is the top metric (60%)

- Developer and operational efficiency follow

- Customer satisfaction and business outcomes come next

- Compliance and security are rising, especially in large enterprises

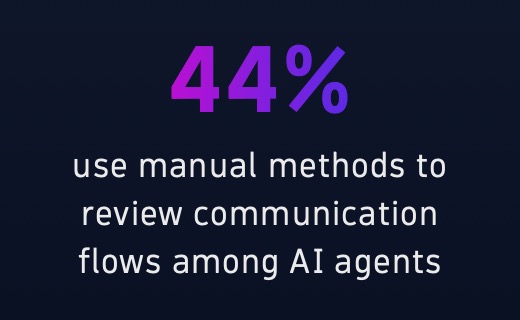

Still, 44% manually review inter‑agent communication flows—a clear scaling limitation.

Agentic systems are inherently interconnected. A performance regression or hallucination in one agent can cascade downstream into applications, user experiences, or security posture. As a result, resilience and rapid recovery—not just efficiency—must be built into agentic systems.

Doing so requires:

- Observability signals that detect anomalous or unexpected actions

- Real‑time tracing of inter‑agent communication

- Automated risk detection informed by factual telemetry

- Deterministic guardrails preventing stochastic failures from propagating

Reliability and security are no longer separate concerns—they’re inseparable in autonomous systems.

Observability is a control plane for agentic AI

The study’s most strategic finding: observability is shifting from a supporting function to the control plane for agentic AI.

Usage is already broad:

|

|

|

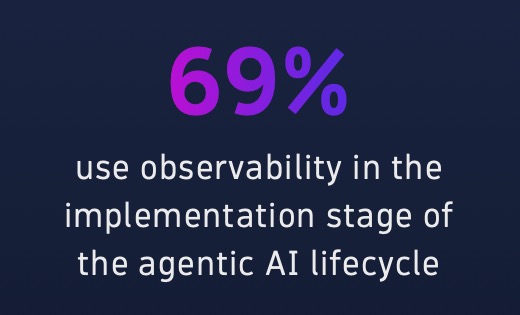

- 69% use observability during implementation

- 57% in operationalization

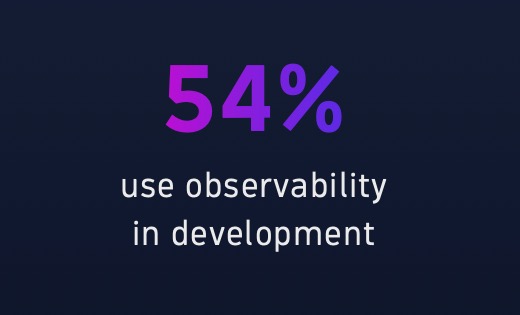

- 54% during development

But gaps remain in transparency, real‑time visibility, risk detection, and linking signals to business outcomes.

Because agentic behavior can’t be fully tested in advance, teams need real‑time observability to monitor performance in production and respond quickly to anomalies. Traditional monitoring tools can’t explain why an agent took an action, detect hallucinations in real time, or trace downstream impact.

A modern observability control plane must:

- Blend deterministic telemetry with probabilistic model insights

- Standardize semantic conventions and agent‑action signals

- Link behavior to business outcomes

- Detect and correct anomalies instantly

- Keep agents aligned to shared, real‑time facts

- Maintain clear human accountability and governance

This is the foundation organizations need to progress from supervised autonomy to reliable, production‑grade autonomous operations.

The path to operationalizing agentic AI

Autonomous operations will redefine enterprise technology. But success requires treating autonomy as a maturity journey, not a leap:

- Start with preventive and recommendation‑driven workflows

- Build trust through human‑in‑the‑loop models

- Harden services, signals, and data paths

- Use observability to detect anomalies and validate actions

- Scale autonomy gradually—with transparency and governance

The message of the 2026 research is clear: the future is autonomous, but limited visibility is hindering reliability and control. Scaling agentic AI requires an observability‑based control plane that grounds probabilistic agent behavior in deterministic, real‑time facts.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum