Metrics matter. We drive our business based on metrics, our compensation can be impacted by metrics and we like to think metrics make our lives easier. But, metrics can also be misleading.

I live and breathe web and mobile performance and have done for the last 20 years. In my role in services, I’ve had the privilege of working with thousands of customers as they work to improve end user performance. As part of that work, the starting point for discussion is often “how are you measuring end user performance?”

I’m commonly asked, what is the “right” performance metric? And I get where this is coming from. Our customers are measuring hundreds of applications, and within each application, there might be hundreds – or thousands – of key pages they want to measure. Having a single performance metric, to rule all others may sound like the right approach but in my experience, not always.

The simplest answer is always the best, right?

Basing a performance management program on a single metric is risky. What if it’s the wrong metric? What if it isn’t applicable to all my applications? Am I trying to force a metric on my developers, or managers? And, if that single metric isn’t the right one then all the work in collection, reporting, and analysis is going to be partially wasted.

This is especially true with customers who seem to have chosen their performance metric based on what will present them in the best light, and in my experience, this has been the case with the customers I interact with.

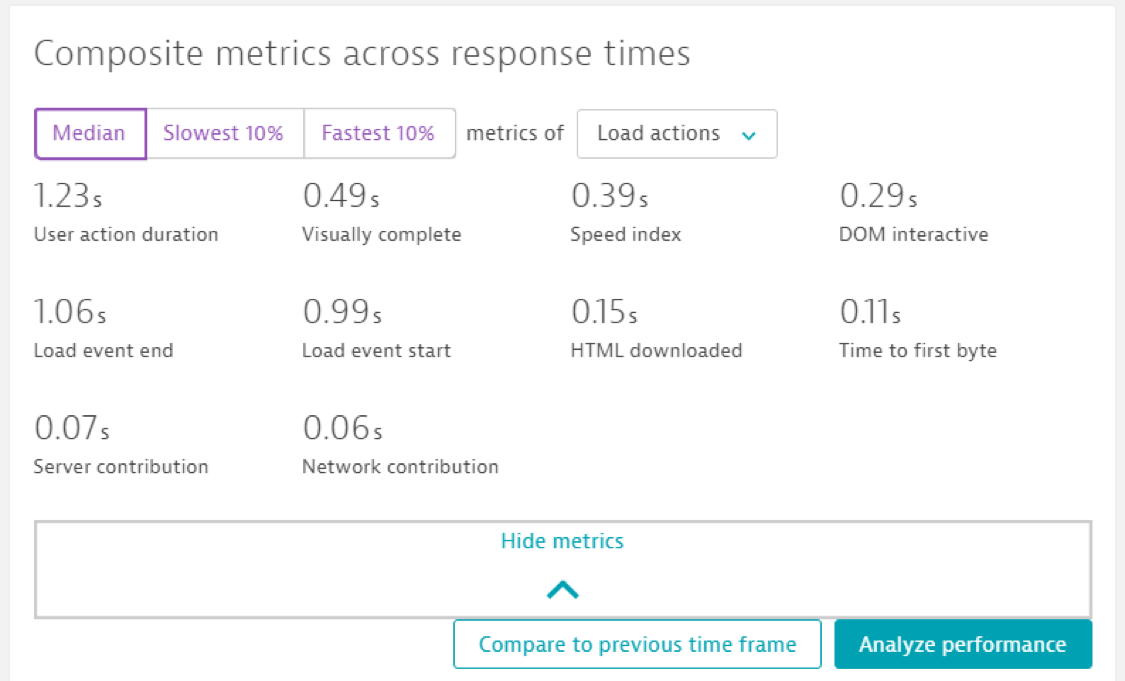

Those customers who fit have the “metrics exist to make me look good” mindset tend to use metrics like “first byte” or many of the DOM metrics. While these might work in some cases, for most modern applications they are just too early in the load cycle to accurately capture the customer experience.

Let’s pause and take a step back. First, you must answer the question – what is the goal of an end user performance metric?

In my view, the goal should be to capture, as closely as possible, what the user experience of performance is when loading a page without inflation (based on things that don’t matter to the experience like tags, lazy loaded content, etc.). But how do we really measure this experience – when it’s multifaceted and based on a combination of expectations, context, and prior experience, –all working at a subconscious level to trigger “this is fast” or “this is slow”.

And this is exactly why no single performance metric will ever be perfect, and why the metrics available to us are constantly evolving.

We first had synthetic metrics like “end to end” – which were good when sites were simple. Then the W3C timings began to be exposed (at the same time Real User Monitoring became popular) and now we see more visual metrics like Visual Complete and Speed Index.

So where does this leave us? Should we define metrics for every page or page type? Or do we just give up because we will never capture the nuance of human expectations? I don’t think so. We need to continue to manage our applications and to do that we need metrics.

What metrics have done the trick

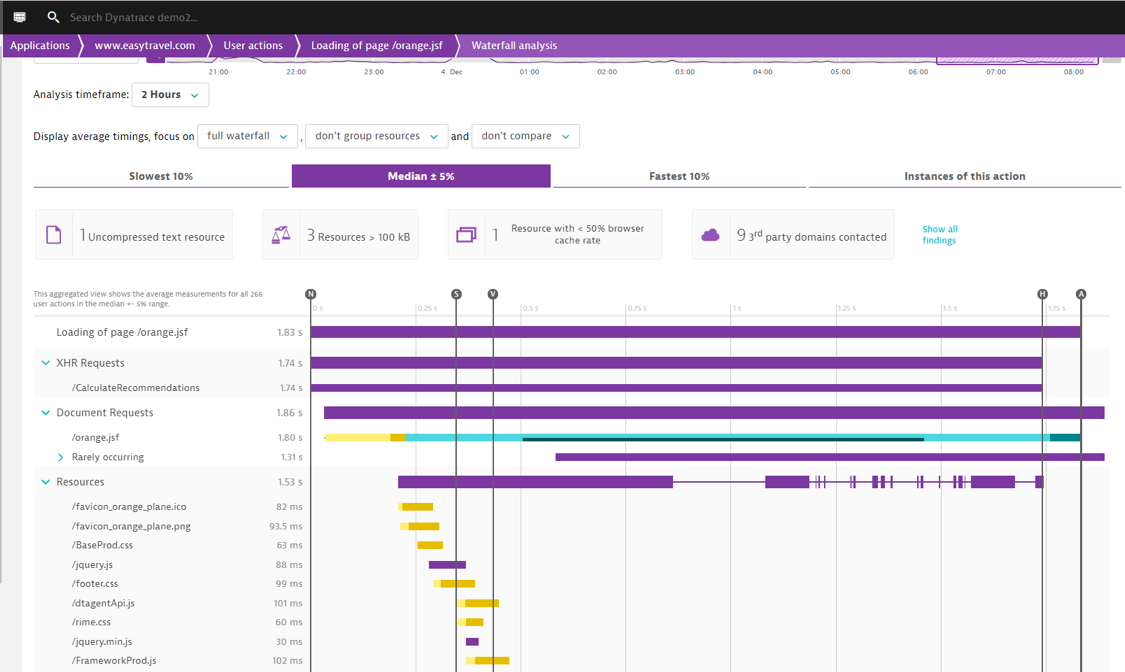

What I have seen work well, and what we promote to our customers, is a multi-metric approach. This means, having primary and secondary metrics, and continuing to evolve as the metrics available evolve. For this approach, we recommend a combination of Visual Complete, Speed Index, and LoadEventEnd. These metrics combine visual completeness (above the fold), the speed at which some useful content is rendering (Speed Index), and when page functionality is likely ready (LoadEventEnd).

And, at Dynatrace, our Business Insights team strives to help make sense of metrics and the occasional metric overload. As we’re assisting customers from a Gen2 to a Gen3 monitoring mindset, we like to step back and re-identify what the right measurement and metric strategy is. Our teams’ deep knowledge of web and mobile performance and performance optimization helps our customers get to the root of the issue, optimizing their end user’s experience, and doing it is a way that matters to their business.

Are these the three, perfect metrics? No. Will they work for every site and page perfectly? No. Will these be different in the next 12-24 months? More than likely. But they are a good starting point.

I see a future where performance metrics will be merged with business metrics around engagement (time on site, bounce, interaction) and where we will have a better understanding of what our customers care about on a page (Session Replay will a key part of that at Dynatrace). Wherever this future takes us, it is clear to me that the most important part of a performance management program is not a single metric, but the ability to evolve and continually drive important decision-making for our customers no matter what metrics we use.

To learn more about Ben’s team, and the Dynatrace Services organization, check out: /services-support/business-insights/

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum