In the following AWS re:Invent 2021 guide, we explore the benefits of AI-enabled observability on AWS and other cloud environments. These resources examine new approaches to AIOps that tame cloud complexity, improve application reliability and resiliency, and make app development smarter.

Digital transformation with AWS: Making it real with AIOps

When Amazon launched AWS Lambda in 2014, it ushered in a new era of serverless computing.

Serverless architecture enables organizations to deliver applications more efficiently without the overhead of on-premises infrastructure, which has revolutionized software development.

In fact, Gartner predicts that cloud-native platforms will serve as the foundation for more than 95% of new digital initiatives by 2025 — up from less than 40% in 2021. Gartner data also indicates that at least 81% of organizations have adopted a multicloud strategy.

Although the benefits of serverless computing are clear, gaining visibility into multicloud environments at scale is challenging. Amazon Web Services (AWS) and other cloud platforms provide visibility into their own systems, but they leave a gap concerning other clouds, technologies, and on-prem resources. These modern, cloud-native environments require an AI-driven approach to observability.

Dynatrace is making the value of AI real. Having recently achieved AWS Machine Learning Competency status in the new Applied Artificial Intelligence (Applied AI) category for its use of the AWS platform, Dynatrace has demonstrated success building AI-powered solutions on AWS. The Dynatrace partnership with AWS expands into EKS Anywhere and ECS Anywhere, using the Dynatrace Software Intelligence Platform to bridge the gap between customers’ AWS-hosted and on-premises Kubernetes and container environments.

A resource guide to Dynatrace and AWS for AWS re:Invent

In the following AWS re:Invent guide, we explore the benefits of observability through applied AI on AWS and other cloud environments. These resources examine new approaches to AIOps that tame cloud complexity, improve application reliability and resiliency, and make cloud-native app development smarter. As a result, organizations can deliver better user experiences and make better business decisions.

At AWS re:Invent 2021, the focus is on cloud modernization. As an AWS Advanced Technology Partner Independent Software Vendor (ISV), Dynatrace helps organizations manage the cloud journey and gain end-to-end visibility into the entire digital value chain. If you’re in person in Vegas, come visit Dynatrace at booth 100 to see the magic in action.

Observability with AWS and beyond

According to recent Dynatrace research, 99% of large organizations now enlist multicloud computing on AWS and other cloud platforms. As organizations move workloads and software development to multicloud environments to operate more efficiently and flexibly, traditional monitoring tools often fall short. These tools simply can’t provide the observability needed to keep pace with the growing complexity and dynamism of hybrid and multicloud architecture.

Adding to the complexity are containers–tools for cloud development—which can be ephemeral. Indeed, according to one survey, 87% of respondents report their main observability challenges lie in Kubernetes, microservices, and serverless frameworks.

To address these issues, organizations that want to digitally transform are adopting cloud observability technology as a best practice. In fact, IT analyst Gartner found that 30% of enterprises implementing distributed system architectures will have adopted observability techniques by 2024, up from less than 10% in 2020.

In this section, we explore how cloud observability tools differ from traditional monitoring: cloud-native observability platforms identify the root causes of anomalous events and provide automated incident response.

Check out some Dynatrace perspectives on modern cloud observability:

|

Common use-cases for AWS Lambda in the enterprise (and how to get observability for them

What are the typical use cases for AWS Lambda? What are the challenges related to operating Lambda functions? And how to to achieve observability? |

|

What is observability? Not just logs, metrics and traces

What is observability? Gain a deeper understanding of how observability tames modern cloud complexity and enables you to transform faster. |

|

Modern approaches to observability for multicloud environments

A modern observability solution transforms data from distributed environments into actionable business intelligence. Learn more here. |

|

What is AWS Lambda?

What is AWS Lambda? Its approach to serverless computing has transformed DevOps. But how do you get the benefits without sacrificing observability? |

|

Scale your enterprise cloud environment with enhanced AI-powered observability of all AWS services

Dynatrace expands its support for AWS observability to support all AWS services. |

AWS made better through AIOps

In recent years, artificial intelligence for operations, or AIOps, has become increasingly popular as organizations strive to automate cloud operations, infrastructure monitoring, and development pipelines.

With AIOps, practitioners can apply automation to IT operations processes to get to the heart of problems in their infrastructure, applications and code.

As Gartner notes, AIOps includes event correlation, anomaly detection, and causality determination, which goes a long way toward helping organizations manage workloads. But to keep pace with the scale and complexity of modern environments running on AWS and other cloud platforms, organizations need the ability to instantly pinpoint root causes.

Deterministic AI, or causal AI, automatically reveals precise links between cause and effect in real time—and takes action on these root causes before they result in performance or user experience problems downstream. Unlike traditional machine-learning models that require extensive, time-consuming training, Dynatrace’s causal AI pinpoints normal and anomalous behavior in context in real time.

Bringing AIOps to AWS

The following resources demonstrate how Dynatrace’s causation-based AI is changing the game for AIOps to bring real-time root-cause analysis to your AWS environment and beyond.

|

What is AIOps? AI for ITOps–and beyond

Only deterministic AIOps technology enables fully automated cloud operations across the entire enterprise development lifecycle. Learn how deterministic, or causal, AI drives automated remediation. |

|

From AIOps tools to an AIOps platform: what it takes to automate AI operations

AIOps tools can help you streamline operations. But teams need automatic and intelligent observability to realize true AIOps value at scale. |

|

Dynatrace extends contextual analytics and AIOps for open observability

Dynatrace provides new AIOps capabilities for all data sources, including open-source observability data via OpenTelemetry and Prometheus. |

|

AIOps done right

Enable autonomous operations, boost innovation, and offer new modes of customer engagement by automating everything. |

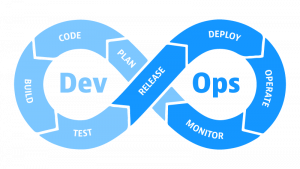

DevOps/DevSecOps with AWS

According to Deloitte, technology teams are now expected to deliver projects four times faster with the same budget. But most of that budget goes toward running the business—not software innovation.

That finding echoes our own research. According to a recent Dynatrace report, almost half (44%) of IT and cloud operations teams’ time goes toward manual, routine work.

Successful DevOps is as much about tactics as it is technology. Teams need to gain strategic insight into day-to-day tasks so they can automate DevOps pipelines and architect software for reliability and resiliency in modern cloud environments.

To achieve that strategic advantage, teams turn to AI and AIOps, the discipline of applying AI and advanced analytics to IT operations. Using these principles, organizations can take an AIOps approach to DevOps that leverages AI throughout the software development life cycle (SDLC). DevOps, together with complementary technologies and tactics, such as site reliability engineering (SRE), has the potential to transform the business.

Here are some of the ways Dynatrace helps teams integrate cloud-native development and operations using AI.

|

2021 DevOps Report

The need for speed has never been more urgent in today’s hyper-digital age. This places a burden on DevOps teams, who must continue to meet their service level agreements (SLAs) for the business, while driving shorter development cycles, producing better quality software, and innovating faster than ever. |

|

Re-imagining your DevOps tools to build a next-generation delivery platform

Many organizations that have taken on DevOps methodologies still struggle with efficiency given tool fragmentation. |

|

Unbreakable DevOps Pipeline: Shift-Left, Shift-Right & Self-Healing

This tutorial demonstrates the role of full-stack monitoring in modern delivery pipelines. Prevent poor-quality code changes from affecting end users. |

|

9 key DevOps metrics for success

Now that you’ve deployed DevOps, how do you measure success? Key measures such as deployment frequency, lead time for changes, and change failure rate measure the dexterity, agility and quality control underlying your team. |

|

DevOps eBook: A Beginners Guide to DevOps Basics

Every stage of the DevOps pipeline requires some amount of analysis to drive decisions, responses, and automation. From fundamentals to best practices, learn how to architect software for reliability and resiliency in modern cloud-native environments in this comprehensive ebook. |

Cloud migration and digital transformation

Organizations across every industry are undertaking cloud migration to accelerate digital transformation, mitigate operational costs and security risks, and drive business value.

As AWS and other cloud platforms reshape how organizations deliver value to their customers, organizations need help planning, executing, and optimizing their migrations.

Here are some practical ways Dynatrace uses AI and automation to help organizations migrate existing workloads to the cloud.

|

What is cloud migration?

Like any move, cloud migration requires a lot of planning and preparation—but it also has the potential to transform the scope, scale, and efficiency of how you deliver value to your customers. |

|

Healthcare giant accelerates application modernization and cloud migration with Dynatrace

Cloud migration often requires companies to modernize their cloud infrastructure as well. One health care company migrated to the cloud, consolidated apps and struggled with visibility as a result, which prompted them to adopt Dynatrace for cloud observability. |

|

Wiley accelerates digital transformation to enable seamless access to research and education content globally with Dynatrace

Digital transformation creates complexity. Wiley, a leader in research and education for more than 200 years, turned to observability to simplify their management of infrastructure and to enable the product innovation at the heart of its cloud infrastructure. |

|

5 Steps to Accelerate AWS Cloud Migration

If you are transferring business services to the cloud, here are 5 steps to accelerate AWS cloud migration. |

|

The Department of Veterans Affairs’ journey to modernization

The race to fully adopt cloud applications is on. Learn how the U.S. Department of Veterans Affairs (VA) is taking one of the most aggressive approaches to cloud transformation in their journey to modernization. |

|

Using Dynatrace to master the 5 pillars of the AWS Well-Architected Framework

To guide organizations as they plan, migrate, transform, and operate their workloads on AWS, Amazon developed the Well-Architected Framework. Learn more about Using Dynatrace to master the 5 pillars of the AWS Well-Architected Framework. |

To find out more about Dynatrace at AWS re:Invent 2021, see AWS re:Invent 2021 shines a light on cloud-native observability.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum