Enterprise organizations face mounting pressure to become more customer-centric, agile, scalable and responsive. Compounding this is the fact that IT environments, faced with digital transformation, are changing rapidly and growing more complex by the day. As a consequence, digital performance is a top priority for most, which means the performance monitoring space has become congested and noisy.

My daily work puts me in touch with cloud service providers, managed service providers, government bodies and enterprise organizations – all of whom are entrenched in the ‘digital transformation’ journey. Today, I’m keen to share some useful insights I’ve gleaned from more than 20 years working in this space.

Specifically, this post presents:

- The three most common approaches to unification and why they fall short in the current digital landscape

- The four key elements of true unified monitoring

Three most common unification approaches

- Unification via acquisition

Stalwart IT Operations Management vendors like IBM, CA, BMC and HP (once known as the BIG 4 in ITOM), have made multiple acquisitions and major investments in an attempt to give businesses a ‘single pane of glass’. But throw back the cover on these solutions and you’ll see numerous tools pinned together, different configurations and different data repositories. All of these are loosely integrated with each other and struggle to cohesively achieve ‘unified monitoring’.

- A unified platform underpinned by fragmented tools

The second approach typically looks like this: New, aggressive APM players start by offering monitoring tools for a specific use case (e.g. java/.NET, Ruby or Network). They then extend their solution, or address gaps in their offering, through acquisitions or internal development.

It’s a similar approach to the big vendors except that these guys boast a common platform that unifies the disparate data sets, tools and reporting underpinning it. The problem is, unified monitoring is an afterthought. So, each time they extend their offering the solution requires re-design and re-architecture. Such a fragmented approach will always struggle to monitor complex environments holistically.

- Pure analytics and algorithms

This third approach is commonly known as IT Operations Analytics (ITOA). It relies on third party data sources and integrations, ‘fit for purpose’ algorithms and skilled people to know exactly how to stitch all these together. The problem with this is, the IT space is changing so quickly and it’s so complex that you can’t rely on manual processes. Not to mention IT resources are usually very stretched.

Additionally, success is highly dependent on rich data sources that provide context; after all no matter how great the algorithms, the principle of ‘garbage in, garbage out’ applies.

What should unified monitoring look like in the digital world today?

If we are to truly unify monitoring, we need a complete and directly connected set of in-context data, which gives us a clear view of cause and impact. This is only achievable if we address four fundamental elements:

Unified monitoring element 1: A solution built “by design”

Unified monitoring today must be built from the ground up. Throwing a monitoring layer over the top, or adding fragmentary components as an afterthought, will never properly address gaps and monitoring shortcomings.

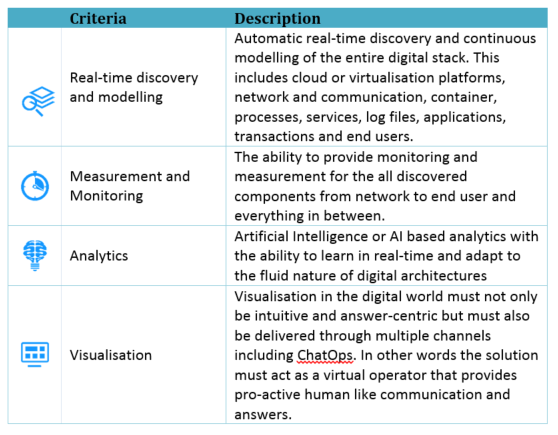

Unified monitoring element 2: All core criteria of the solution need to work in unity, as one

The table below shows the four foundational monitoring elements. Each one needs the others; otherwise, the overall monitoring function will fail.

Unified monitoring element 3: Forget correlation, cause and impact analysis are crucial

Using causation analysis is the only way forward for monitoring now. When you consider the complexity, modularity, fluidity, and scale of digital architectures with millions of dependencies and even more metrics, correlation alone simply doesn’t cut it. At best it can narrow down the scope and at worst it can lead to incorrect conclusions.

A causal model, with artificial intelligence at its heart, reduces our reliance on humans having to perform eyeball analytics on insurmountable amounts of data. This type of cause and impact analysis is only possible when all core elements of monitoring are tied together in a single, coherent system.

Unified monitoring element 4: Clear answers with rich context

Organizations today need intuitive visualization and clear context. IT departments are getting busier, with increasing workloads and information to digest and analyze. Every extra chart or alert without context and impact means more time and effort required by IT. And every extra click to correlate different events to a probable common root cause adds more friction in the workplace.

The shifting goalposts of IT monitoring make it one of the most difficult undertakings for enterprise organizations, but it’s a task that’s marked with urgency and is critical to the success of ‘digital transformation’ initiatives.

In my next post, I’ll look at the deeper history surrounding the failed attempts at ‘unified monitoring’. I believe it will provide further context for why performance monitoring needs to address all four criteria I’ve outlined today.

*If the context of what I’m presenting above resonates then I highly recommend that you consider time trialing Dynatrace, the all-in-one full stack availability, and performance monitoring powered by artificial intelligence.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum